Key Takeaways

- Failing CI pipelines, context switching, and slow reviews create a large, hidden productivity cost for engineering teams.

- Autonomous AI tools can analyze CI logs, generate fixes, and update pull requests so developers stay focused on feature work.

- Shorter, more reliable pipelines and AI-assisted reviews help distributed teams reduce delays across time zones.

- Configurable trust models and phased rollouts let teams adopt automation gradually while maintaining control and code quality.

- Teams can use Gitar to apply autonomous fixes and review support directly in their existing GitHub workflows: Try Gitar for CI fixes and review automation.

The Productivity Drain: Why Current Version Control Solutions Fall Short

How Traditional CI/CD Disrupts Developer Flow

CI pipeline failures continue to slow delivery. Environment inconsistencies, misconfigurations, and dependency failures frequently break builds, and many teams report developers spending up to 30 percent of their time on these issues. Each failure forces a shift from focused feature work into log reading and debugging.

The High Cost of Context Switching

Even a short interruption from a failing build can cost an hour of productive time once you factor in regaining context. For a 20-developer team, this context switching can translate into hundreds of lost hours and as much as one million dollars per year in wasted capacity. Fragmented attention also increases the risk of defects and rework.

The Distributed Team Delay

Distributed teams often wait a full day for a simple code review response. A few review rounds between time zones can stretch a straightforward merge into several days. These delays compound across many pull requests and slow the entire release pipeline.

Introducing Autonomous AI for Self-Healing CI

Why Autonomous AI Matters for Version Control

Autonomous AI tools extend beyond static analysis or simple suggestions. These systems can inspect failing CI runs, propose specific code changes, apply them to branches, and re-run checks. They can also implement code review feedback so developers spend less time on mechanical edits and more time on design decisions.

Key Capabilities of Autonomous AI

- Autonomous CI fixes: The AI analyzes failed logs, identifies root causes, generates code or config changes, and updates pull requests. It can address lint errors, test failures, build issues, and dependency problems while validating fixes through CI.

- Review feedback implementation: When reviewers leave comments with clear instructions, the AI applies the requested changes, pushes new commits, and explains what changed in plain language.

- Configurable trust model: Teams can begin with suggestion-only modes that require one-click approval, then move toward auto-commit modes with rollback options as confidence grows.

1. Eliminate CI Failures with Autonomous Troubleshooting and Fixes

Strategy: Treat CI Failures as Automatable Work

Engineering leaders can remove a major productivity drain by giving CI failures to an autonomous fix engine instead of individual developers. The goal is a workflow where most red builds become green without manual intervention.

Impact of Failing CI on Teams

Environment inconsistencies, misconfigurations, and dependency issues remain leading causes of broken pipelines. Every time this happens, developers must pause their current work, diagnose the issue, and rerun jobs. These interruptions increase lead time, delay releases, and inflate CI costs through repeated runs.

How an Autonomous Fix Engine Works

An autonomous fix engine reviews CI logs, maps errors to likely causes, and proposes targeted code or configuration changes. It updates the pull request branch and re-triggers checks. When the pipeline passes, the developer receives a ready-to-merge change instead of a problem to solve.

Tactical Implementation

- Start in approval mode, where the AI proposes fixes as pull request comments or draft commits.

- Define clear rules for what the AI may fix automatically, such as tests, linters, or documentation updates.

- Expand to auto-commit for low-risk changes once the team has reviewed several successful fixes.

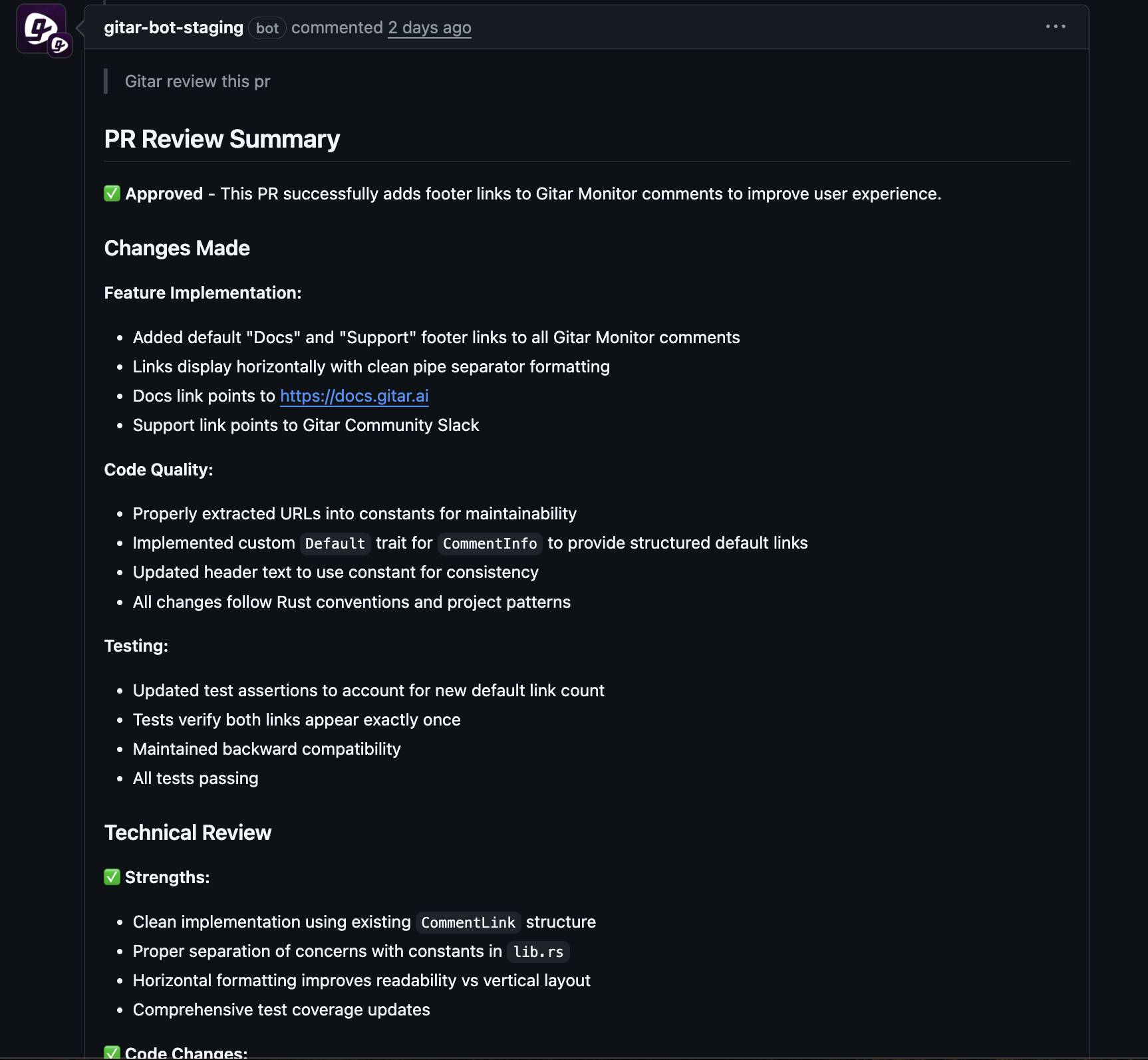

2. Accelerate Code Review Cycles with AI-Driven Feedback Implementation

Strategy: Turn Review Comments into Direct Actions

Code reviewers often spend time describing simple edits that developers must then implement and re-validate. AI that can apply feedback closes this loop more quickly, especially for distributed teams.

Code Review as a Bottleneck

Traditional reviews include multiple handoffs. Reviewers request changes, authors adjust the code, CI runs again, and reviewers re-check the updates. This cycle slows merges and encourages larger pull requests because developers try to amortize the review overhead.

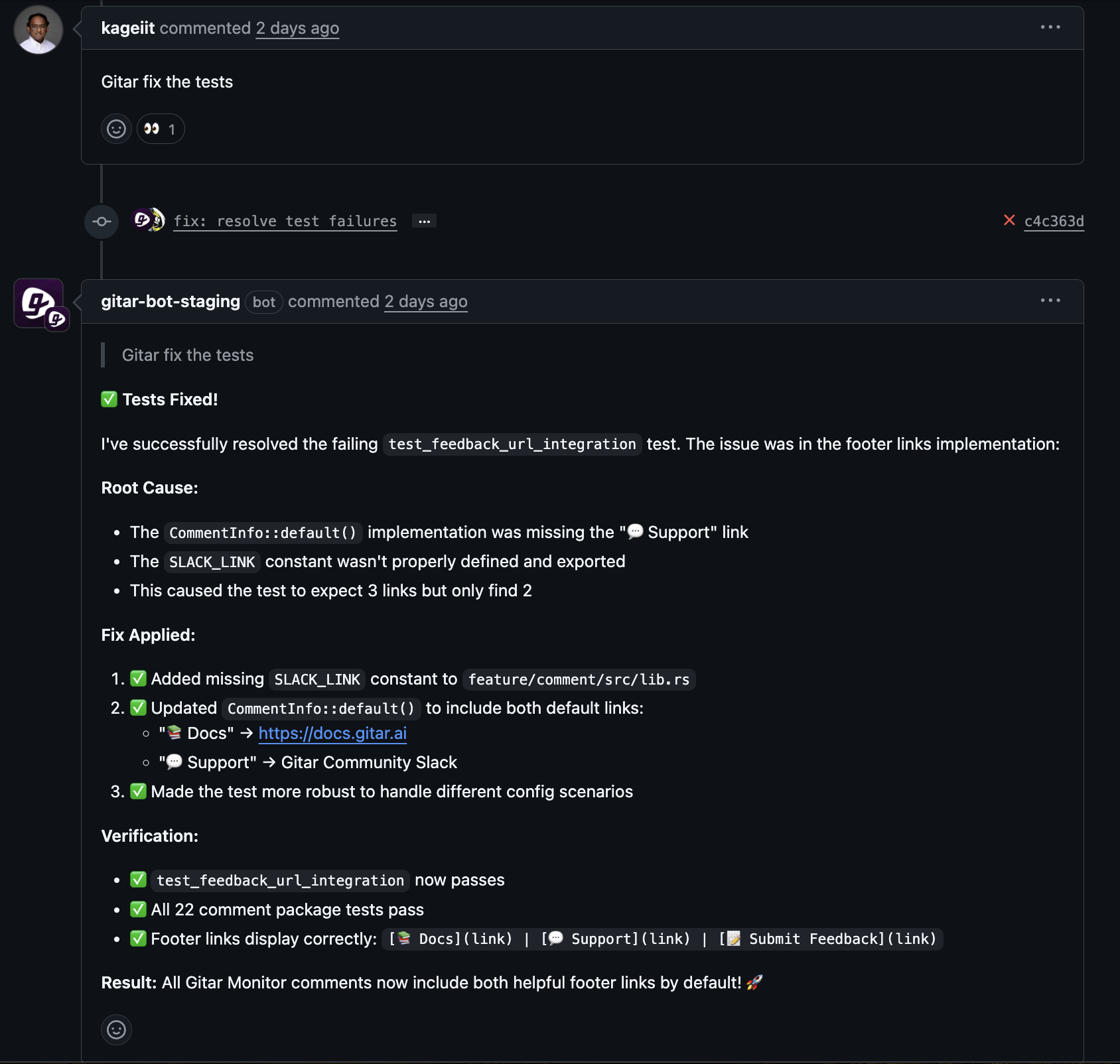

Actionable AI Review in Practice

Autonomous AI can respond to comments such as “rename this variable,” “extract this into a helper,” or “remove this Slack link” by editing the branch and explaining the change. Reviewers keep control of intent while the AI handles the mechanics.

Tactical Implementation

- Add simple AI tags to review workflows, for example comments that begin with the tool name.

- Encourage reviewers to leave precise, actionable requests the AI can apply safely.

- Use this pattern for repetitive edits first, then extend to more complex refactors once trust is established.

3. Optimize CI/CD Pipelines for Speed and Reliability

Strategy: Shorten Feedback Loops and Reduce Flakiness

Fast, stable pipelines support more frequent deploys and increase developer confidence. Pipeline design and autonomous AI each play a role in reaching this state.

Why Pipeline Speed and Stability Matter

Teams with pipelines under 10 minutes deploy twice as often as those with slower feedback. At the same time, flaky tests consume significant time and CI budget by forcing reruns and noisy investigations.

Role of Autonomous AI in Pipeline Health

Autonomous AI does not replace CI orchestration but reduces failed and flaky runs by fixing common issues before developers intervene. Fewer retries mean lower compute costs and a more predictable path to green builds.

Tactical Implementation

- Integrate AI with existing CI systems such as GitHub Actions, GitLab CI, or CircleCI.

- Log every AI change and tie it to specific CI runs for auditability.

- Combine AI-driven fixes with standard pipeline optimizations like test parallelization and caching.

4. Enhance Developer Flow and Reduce Context Switching

Strategy: Protect Focus Time with Background Automation

High-performing teams protect deep work. CI and review automation support this goal by handling repetitive tasks while developers stay focused on design and feature development.

The Context Switching Tax on Engineers

Frequent alerts about failing jobs or minor review comments pull engineers out of flow. Returning to a complex problem after debugging or small edits requires re-loading mental state, which slows progress and increases cognitive fatigue.

How Autonomous AI Protects Flow

Autonomous AI runs in the background, watching for failures and actionable comments. It attempts safe fixes first, reports outcomes in familiar channels, and only escalates when it cannot resolve an issue. Developers receive a summary instead of a fire to put out.

Tactical Implementation

- Configure notifications so developers see AI updates in existing tools without intrusive alerts.

- Define clear escalation rules for changes that always require human review.

- Review AI activity in retrospectives to refine policies and build confidence.

5. Build a Culture of Trust and Continuous Improvement with Intelligent Automation

Strategy: Introduce Automation Gradually and Transparently

Trust is essential when any system can change code. Successful teams adopt automation with clear controls, visibility, and measurable results.

Managing Trust Concerns Around AI

Teams often worry that automated fixes might introduce subtle bugs or style drift. A configurable trust model addresses this by allowing suggestion-only modes, scoped permissions, and easy rollback. Clear explanations in pull request comments help reviewers understand why each change was made.

Tactical Implementation

- Roll out AI in three phases: conservative suggestions, supervised auto-commits, and then broader autonomous operation for low-risk areas.

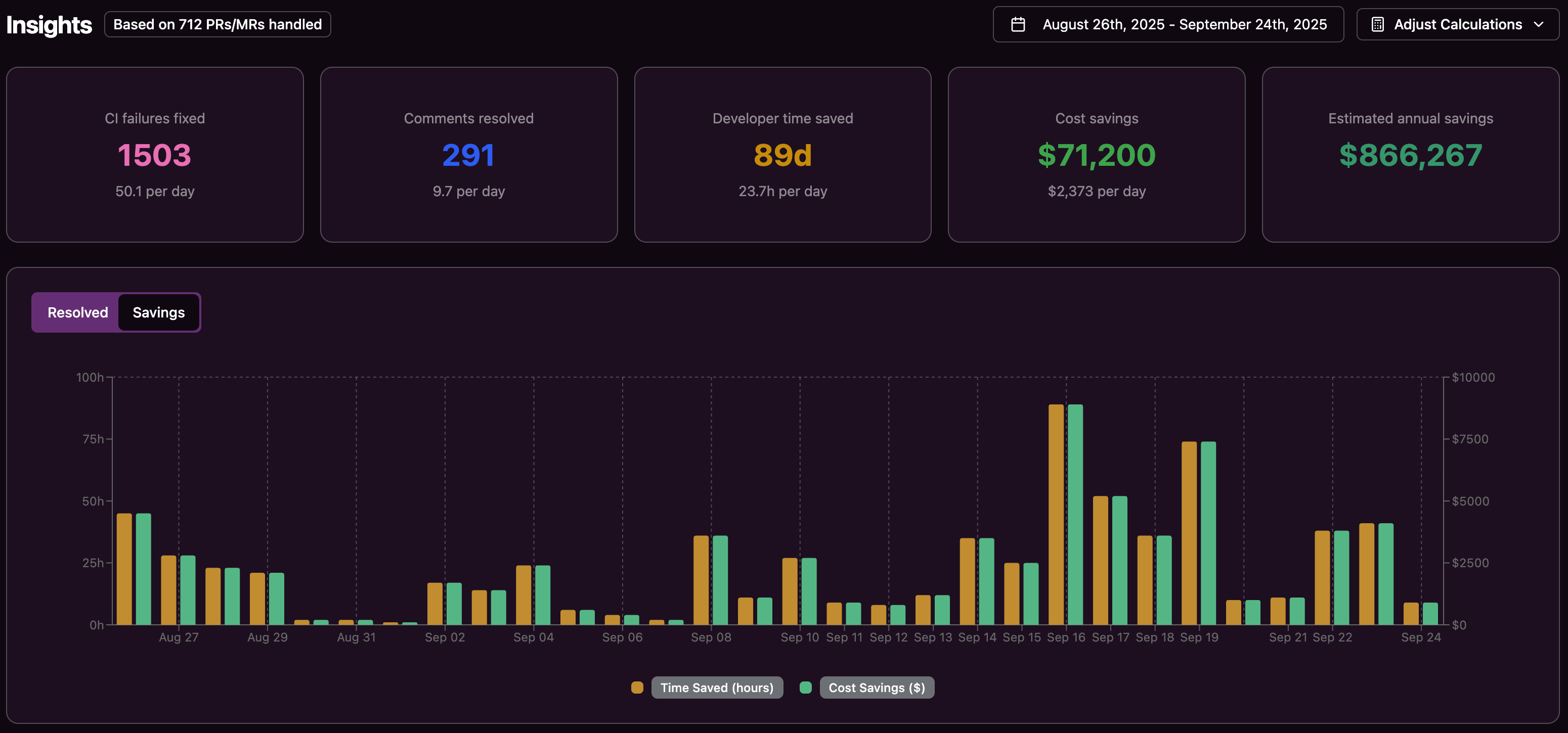

- Track metrics such as CI failures resolved, review comments addressed, and developer time saved.

- Share these metrics with stakeholders to demonstrate ROI and guide further investment.

Autonomous AI vs. Other Version Control Productivity Tools

|

Feature or Tool |

Manual Fixes |

AI Suggestion Engines |

Autonomous AI |

|

CI failure resolution |

Manual debug and fixes, full context switch |

Highlights issues, requires manual edits |

Applies fixes and validates against CI |

|

Code review feedback |

Author implements all requested changes |

Suggests edits, still needs manual work |

Implements reviewer instructions directly |

|

Developer interruption |

High, frequent task switching |

Moderate, suggestions need attention |

Low, runs in background and summarizes |

|

Cost efficiency |

High developer time and CI spend |

Reduces analysis time, not execution time |

Reduces both developer effort and CI retries |

Frequently Asked Questions (FAQ)

How is autonomous AI different from AI reviewers like CodeRabbit for version control productivity?

Many AI reviewers act as suggestion engines that point out problems but leave implementation to developers. Autonomous AI both identifies and applies fixes, then validates them through the full CI workflow so builds return to green with less manual effort.

How can teams reduce risk when giving AI access to their codebase?

Teams can start with a conservative mode where the AI only proposes changes. Every change requires a human approval step, and all commits remain traceable. Over time, teams can enable auto-commit for specific file types or repositories while keeping rollback options in place.

What ROI can engineering teams expect from autonomous AI for version control productivity?

If a 20-developer team spends one hour per day on CI and review issues, that adds up to about 5,000 hours per year, or roughly one million dollars in loaded costs. Even if autonomous AI removes only half of this burden, teams can save around five hundred thousand dollars annually while improving developer satisfaction and delivery speed.

Conclusion: Move from Reactive Fixes to Autonomous Version Control in 2026

Failing pipelines, slow reviews, and constant context switching continue to limit engineering output. Manual fixes and basic suggestion tools help, but they still rely on developers to execute repetitive work.

Autonomous AI changes the balance by handling a large share of CI failures and review feedback directly in version control systems. This shift frees engineers to focus on complex problems, reduces lead time, and improves the reliability of releases.

Teams that adopt clear trust models, phased rollouts, and measurable goals can integrate autonomous AI into their existing workflows with confidence. Tools like Gitar make this practical in GitHub-based environments by applying fixes, summarizing changes, and tracking impact: Start using Gitar for autonomous CI and review support.