Balancing high-quality code with fast delivery often feels like an uphill battle, especially with inconsistent reviews and bottlenecks in the process. This article offers a straightforward framework for setting up clear code review guidelines, tackling the challenges of asynchronous workflows and the complexities of AI coding tools. You’ll see how common pain points slow teams down and how Gitar, an autonomous AI agent, automates tedious review tasks to boost productivity and cut time-to-merge for distributed teams.

Unpacking the Issue: Inconsistent Reviews and Workflow Delays

Why Unstructured Reviews Cost Time and Money

Unstructured code reviews drain productivity across engineering teams. Without defined guidelines, a process meant to ensure quality turns into a bottleneck that slows development. For a team of 20 developers, this can mean losing around $1 million annually in productivity, a cost that grows with team size.

Common issues include feedback that differs from reviewer to reviewer, lengthy debates over style choices, and overlooked critical flaws due to unclear standards. Developers lose focus as they switch between coding and addressing feedback, breaking their problem-solving flow.

For distributed teams, these challenges multiply. A developer in San Francisco submits a pull request, waiting for input from teammates in London and Bangalore. What should be quick feedback stretches into days of back-and-forth across time zones. Each comment requires recontextualizing the work, making changes, and updating the code, all while juggling other tasks.

Manual enforcement of reviews adds to the inconsistency, weakening team alignment. One reviewer might question a design choice, while another lets the same pattern pass. This confusion frustrates developers and builds technical debt over time. Install Gitar to fix broken builds automatically and ship better software faster, cutting through these inefficiencies.

How AI Coding Tools Create New Review Bottlenecks

AI tools like GitHub Copilot speed up code creation but shift the workload to review and validation. Teams spend more time reviewing AI-generated code, as the focus moves from writing to ensuring quality and alignment with project goals.

This shift, often called a “right-shift” bottleneck, happens because AI produces correct syntax but may not meet business needs or team standards without oversight. The result is a flood of pull requests needing detailed checks, more testing, and fixes for hidden issues.

Traditional review methods struggle under this increased load. Queues pile up, feedback loops stretch longer, and the benefits of AI-driven coding get stuck behind human bottlenecks. Teams can build features quickly but face delays in safely releasing them.

The added complexity of AI-generated code also slows reviews. Unusual approaches or patterns take extra effort to assess. Reviewing AI code means confirming it addresses the problem and fits the design, beyond just checking for errors, which demands more time and deeper focus from reviewers already stretched thin.

Building a Solution: Strong Guidelines for Better Reviews

How Clear Guidelines Improve Teamwork and Code Quality

Well-defined code review guidelines create a solid base for consistent, efficient development as teams and projects grow. When set up properly, they turn reviews from a subjective slog into a reliable process that lifts both code standards and team output.

These guidelines cut out guesswork. Instead of reviewers relying on personal biases, they provide shared criteria for judging code. This reduces mental strain for everyone involved, as expectations are aligned across the board.

They also act as a knowledge hub, preserving team practices and preventing loss of expertise during turnover. New developers can refer to them to grasp conventions quickly, speeding up onboarding. Senior team members benefit too, offering focused feedback without recreating standards for every review.

Most crucially, guidelines soften the tension often tied to reviews. When feedback ties back to agreed-upon rules rather than opinions, developers can accept and apply changes without taking it personally. This builds trust and fosters collaboration within the team.

Tailoring Guidelines for Distributed Teams and Async Workflows

Distributed teams need guidelines built for asynchronous collaboration to handle unique challenges like communication gaps. Well-planned async reviews cut scheduling stress and boost productive coding hours by setting clear rules for interaction and response timing.

Effective async guidelines tackle the problem of delayed context. Pull requests should use standard templates detailing business needs, design choices, testing methods, and trade-offs. This gives reviewers enough information to provide useful input without waiting for live explanations.

Timelines matter in async setups. Guidelines should define response windows, such as 24 hours for urgent fixes or 48 hours for routine features. This balances thorough reviews with progress while respecting time zone realities.

Async reviews work well for teams spread across time zones, especially when guidelines outline how feedback transfers between regions. Teams need rules to ensure comments build on each other without creating conflicts.

Review assignments should adapt too. Guidelines can detail how tasks are split across regions, perhaps pairing a backend expert in Europe with a UI specialist in Asia. This allows parallel reviews instead of sequential delays.

Adapting Guidelines for AI-Generated Code

AI-generated code needs specific guidelines to manage its unique risks and traits. Reviewing AI code prioritizes confirming the solution works and fits the design, not just fixing syntax, requiring a focused approach to evaluation.

Guidelines should stress validating outcomes over surface-level correctness. Reviewers need clear benchmarks to check if AI code solves the right problem, matches architecture, and integrates well. Key points include scalability, error handling consistency, and adherence to security practices.

Testing rules gain importance with AI code. Guidelines should require thorough coverage, including edge cases AI might miss, with specific needs for unit, integration, and end-to-end tests to confirm system-wide functionality.

Documentation also needs updates for AI code. Guidelines should call for noting when AI tools are used and explaining the prompts or limits set. This clarity helps future maintainers understand and debug the code more effectively.

Install Gitar to automate fixes and integrate these guidelines into your workflow, ensuring consistent handling of AI-generated code.

Meet Gitar: AI That Solves Review and CI Challenges

Gitar tackles the key slowdowns in modern development by automating repetitive code review and CI tasks. As an autonomous AI agent, it doesn’t just spot issues, it fixes CI failures and acts on feedback, saving developers hours and speeding up code delivery.

Unlike tools that only suggest changes, Gitar acts as a “CI healing engine.” It analyzes problems, creates and applies fixes, and tests them in the full environment, taking the burden off developers.

Here’s what Gitar brings to the table:

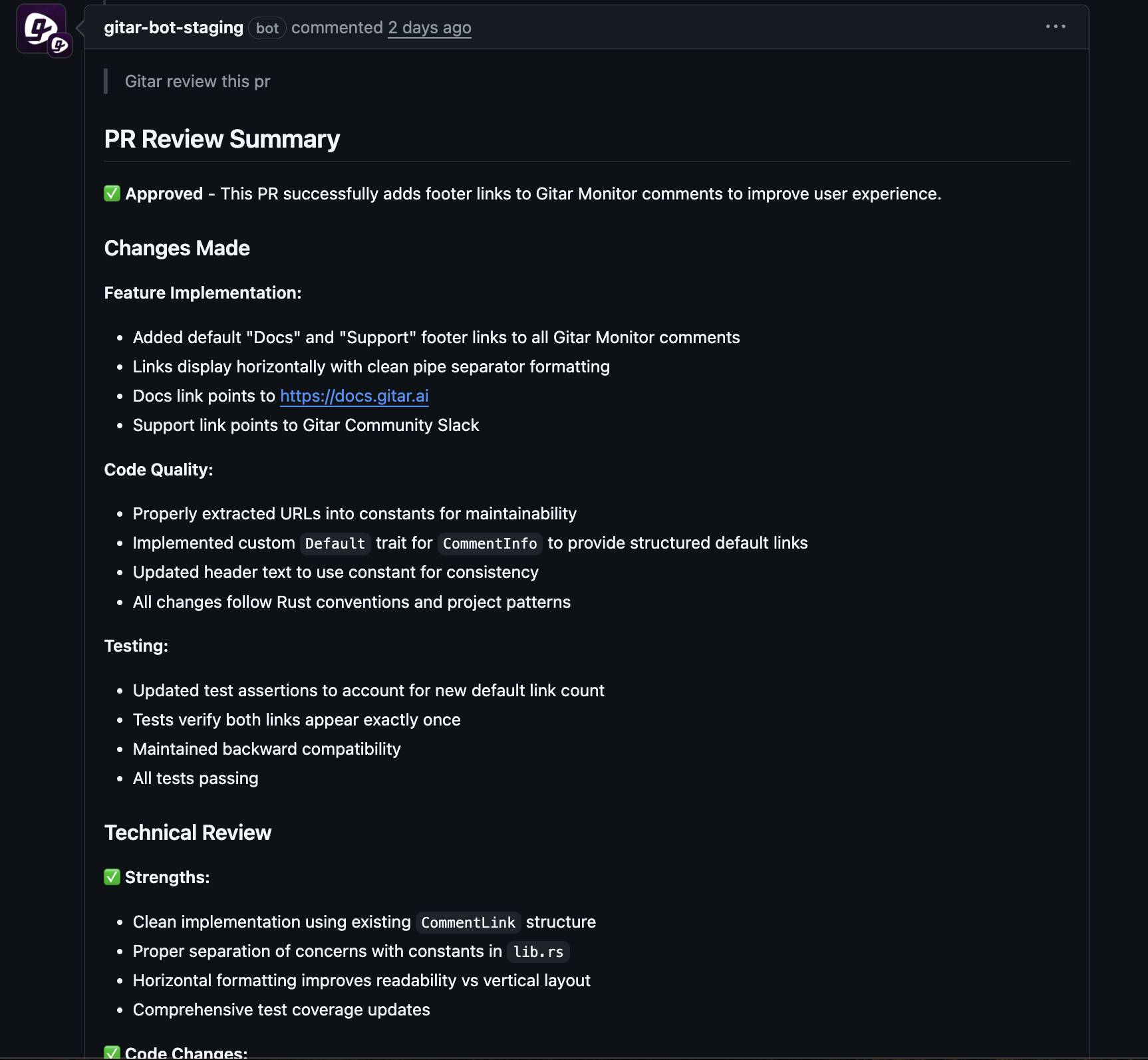

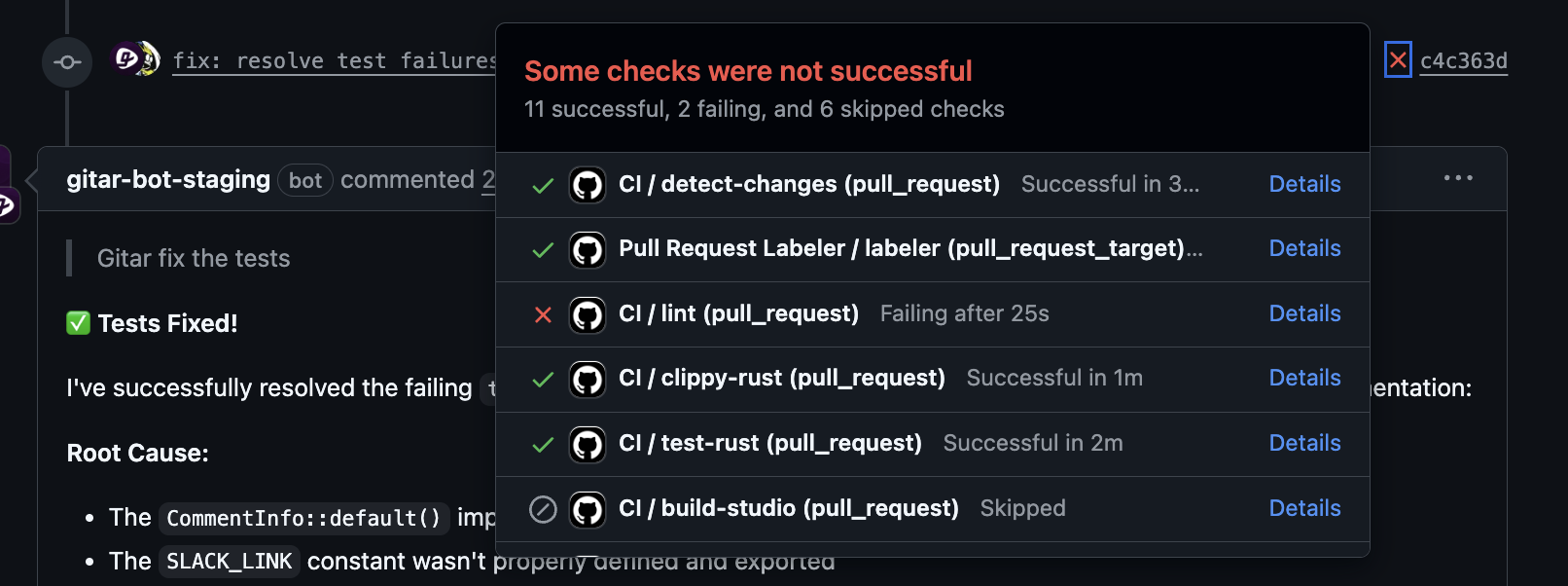

- Automatic CI Fixes: Gitar resolves common CI issues like lint errors, test failures, and build problems. It reads logs, finds the root cause, applies fixes, and commits them directly to the pull request.

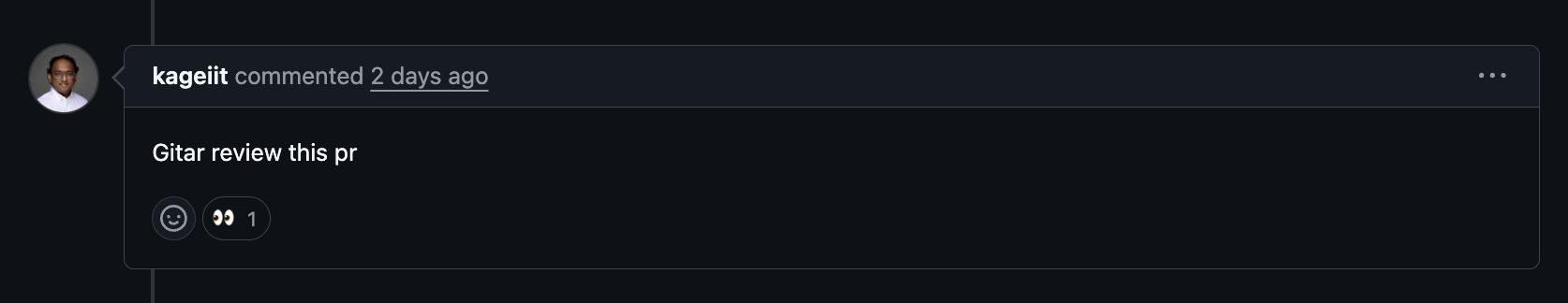

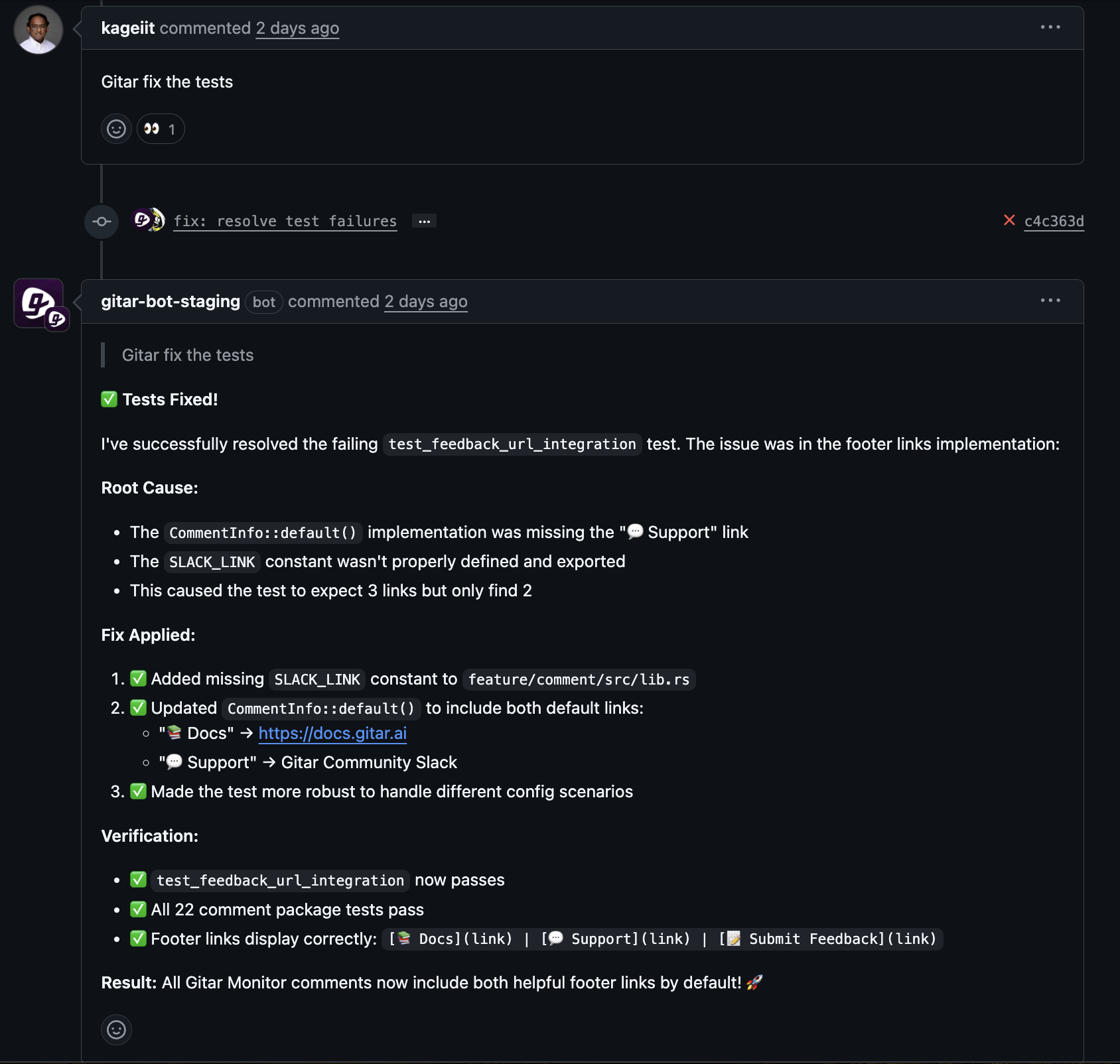

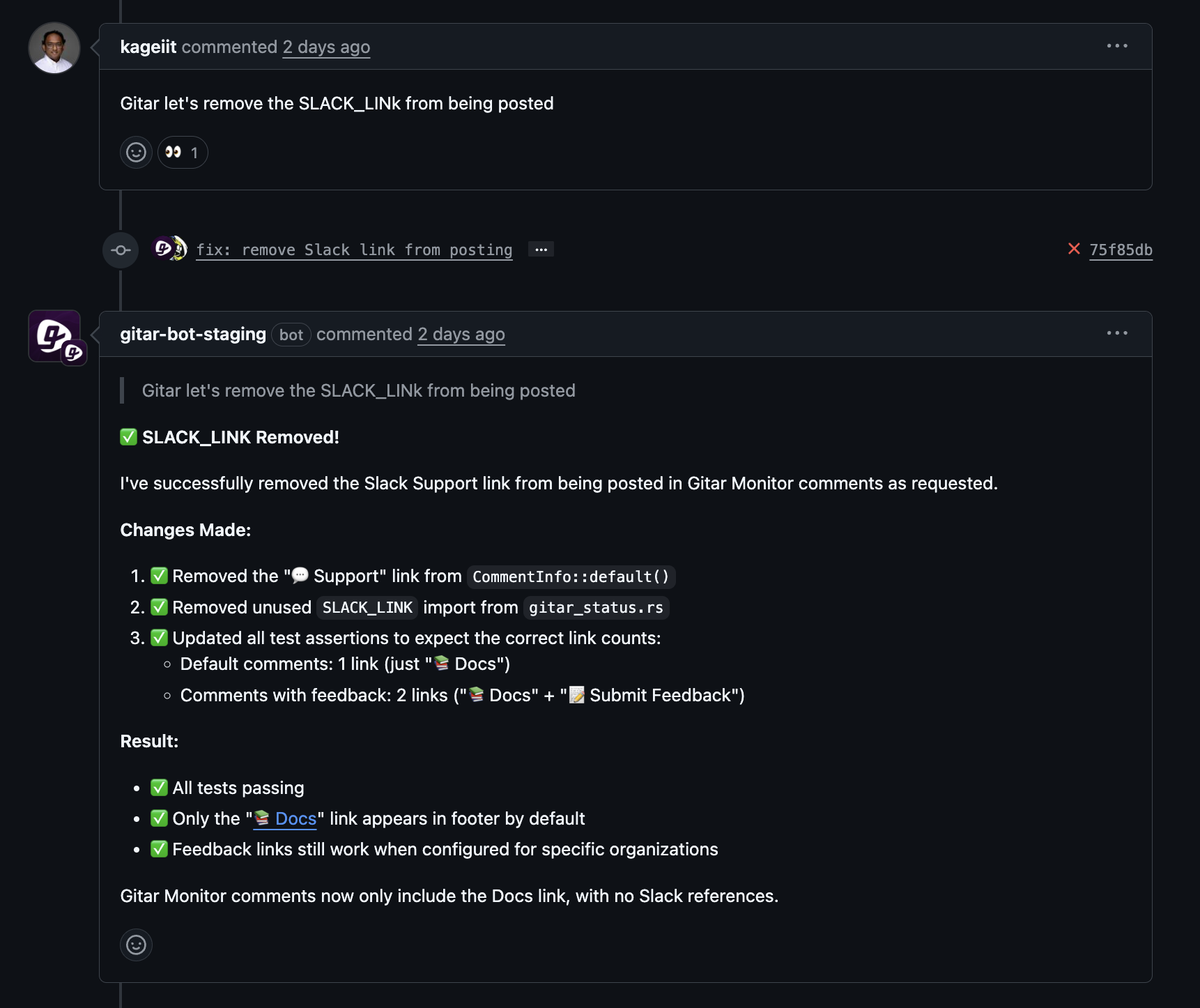

- Smart Feedback Handling: Reviewers can assign tasks via comments, like asking to remove a feature. Gitar interprets the request, makes the change, and documents it clearly.

- Wide CI Compatibility: Gitar works with major CI systems, fitting into varied development setups.

- Adjustable Automation: Start with Gitar suggesting fixes for approval, then scale to automatic commits with rollback options as confidence grows.

- Time Zone Support: Gitar handles async delays by acting on feedback instantly, so fixes are ready when developers log in.

From Manual Review Struggles to Automated Efficiency with Gitar

Manual Reviews Fall Short Compared to Gitar’s Automation

Manual code reviews often vary widely as teams grow or spread across time zones. Reviewers have different focuses and energy levels, leading to uneven results that frustrate everyone. One might spot design flaws but miss security risks, while another fixates on style over performance.

Reviewer burnout adds another layer of difficulty. As pull requests stack up and AI code adds complexity, human reviewers struggle to keep up. The mental load of consistent standards across many reviews daily reduces sharpness, letting serious issues slip through.

Here’s how manual reviews stack up against Gitar’s approach:

|

Aspect |

Manual Code Review |

Gitar AI Automation |

|

Guideline Consistency |

Relies on reviewer recall and effort |

Applies rules evenly through automated fixes |

|

Fixing Issues |

Needs developer to switch tasks and fix manually |

Generates and applies fixes automatically |

|

Async Handling |

Hit by time zone delays and miscommunication |

Acts on feedback instantly, no delays |

|

CI Workflow |

Developers fix CI failures and rerun manually |

Fixes CI issues, ensures builds pass before merge |

Manual methods create a cycle where uneven enforcement confuses developers, eroding trust in the process. Reviews start feeling like random obstacles rather than helpful quality checks.

Install Gitar to escape manual review pitfalls and ensure steady code quality with automated solutions.

How Gitar Handles Feedback on Its Own

Gitar streamlines feedback and CI issue resolution with minimal developer input. When a CI check fails or comments come in, it assesses the situation, codes the fix, updates the pull request, and runs full tests to confirm the change works.

It goes beyond basic checks, addressing linting, formatting, test, and build failures by digging into logs for root causes and committing solutions. For feedback, Gitar reads reviewer notes, applies changes, and logs each step clearly.

Reviewers can delegate routine tasks to Gitar using simple instructions, focusing on big-picture input while the AI manages details. Comments should stay clear and neutral, letting Gitar handle repetitive edits while reviewers guide strategy.

Cutting Merge Times for Global Teams with Gitar

Distributed teams deal with delays from traditional review methods, as pull requests wait for hours or days across time zones. Multiple feedback rounds can stretch a simple review over a week.

Gitar counters this with round-the-clock automation. When feedback lands from one time zone, it processes and applies changes immediately, running full CI tests. By the time the original developer logs in, the pull request is updated and ready for final sign-off.

This ongoing workflow changes how distributed teams operate. Time zones shift from a drag to a chance for simultaneous progress. Async reviews help limit delays, and Gitar amplifies this with automated action on feedback.

Picture this: A developer in Singapore submits a pull request at day’s end. Overnight, European teammates leave feedback. Gitar acts on it right away, updating and testing the code. When the Singapore developer returns, the pull request awaits final approval, ready to merge.

Common Questions About Code Reviews and Gitar

What Slows Down Teams Using AI Coding Tools Like Copilot?

The main delay comes from validating and merging code, not writing it. AI tools speed up coding but create a bottleneck where teams generate code faster than they can review or deploy it. This shows up as more pull requests needing checks, tougher validation for AI code, and longer CI/CD cycles. Review queues grow, and merge times drag, even as individual coding output rises. Validation becomes the key hurdle, needing new ways to keep speed without risking quality.

How Do Consistent Guidelines Help Async Teams Maintain Quality?

Clear guidelines offer a common structure for async collaboration, cutting confusion that often stalls reviews. Without them, reviewers in different regions might contradict each other or miss issues due to uneven standards. Guidelines ensure everyone applies the same criteria, keeping code quality steady no matter the location. They also reduce the need for live meetings by outlining expectations and patterns upfront. This limits context switching when developers face unclear or conflicting feedback, and it preserves team knowledge over time across regions.

Does Gitar Fix Issues or Just Point Them Out?

Gitar fixes issues directly, acting as a “healing engine” rather than just suggesting changes. It handles the full process: analyzing problems, coding fixes, applying them to the pull request, testing in the CI environment, and documenting each step. This covers lint errors, test failures, build issues, and reviewer requests. Teams can adjust Gitar’s automation level, starting with suggestions and moving to full commits with oversight and rollback options as needed.

How Does Gitar Help Global Teams With Time Zone Gaps?

Gitar cuts time zone delays by processing feedback and fixes 24/7 across regions. When a reviewer leaves comments, Gitar acts immediately, updating the pull request and running tests. Developers return to find feedback addressed and code ready for approval. For instance, a California developer submits a pull request, gets feedback from London overnight, and sees Gitar’s updates by morning. This turns time zone differences into a strength, slashing merge cycles for distributed teams.

What Code Problems Can Gitar Fix Automatically?

Gitar handles a variety of issues independently, from CI failures to feedback actions. It fixes linting and formatting errors, test issues like broken assertions, and build problems from dependency clashes. It also acts on reviewer requests, like removing features. Gitar supports diverse coding environments and complex CI setups, testing all fixes fully before commits to ensure they work in production.

Boost Developer Output with Gitar’s Automated Review Support

Clear code review guidelines are vital for team success, but enforcing them manually often creates the delays teams aim to avoid. AI coding tools worsen this by speeding up code creation while slowing validation and merges. Standard review methods, whether manual or suggestion-based AI, can’t match the pace of today’s distributed development needs.

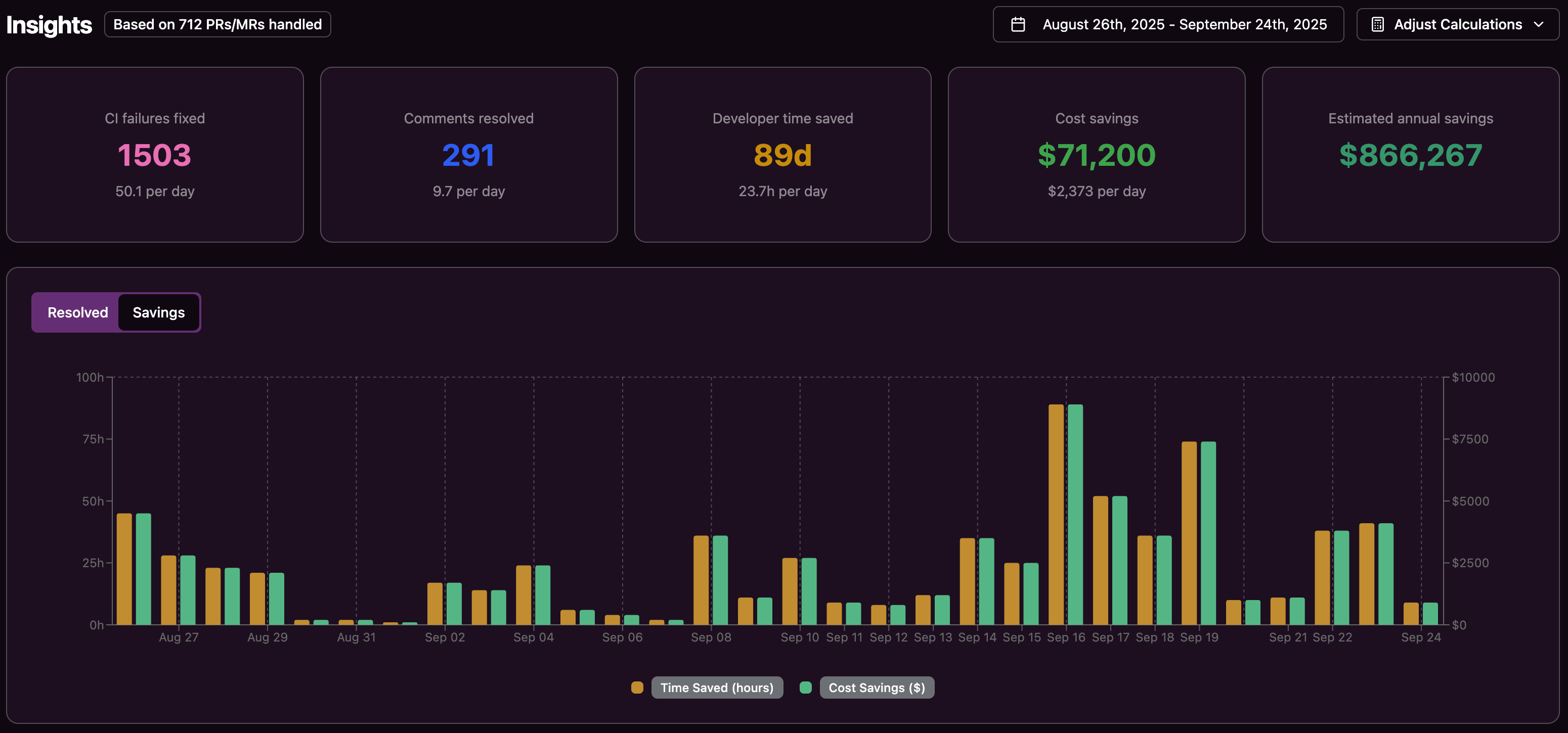

Gitar solves this by automating CI fixes and feedback resolution, reducing context switches and time zone waits for global teams. Its adjustable trust settings let teams start with suggestions and scale to full automation over time.

The impact on productivity is clear: Gitar saves hours on manual fixes and review loops, cutting significant costs for engineering groups. More than that, it lets developers focus on innovation rather than repetitive tasks like fixing small errors or handling basic feedback.

Turn your review process into a driver of speed. Install Gitar to automate builds and ship quality software faster, scaling automation with your team’s goals.