Key takeaways

- Test stability automation reduces CI noise, improves trust in test suites, and shortens feedback loops for developers.

- Flaky tests usually stem from concurrency, external dependencies, non-deterministic logic, and weak isolation in CI environments.

- A phased approach to test stability combines detection, deterministic architecture, automation, and continuous monitoring.

- Autonomous AI that can reproduce CI environments and apply validated fixes offers measurable ROI for engineering teams.

- Teams can use Gitar to automatically fix broken builds and reduce CI-related interruptions by installing it at https://gitar.ai/fix.

The critical challenge: flaky tests and CI failures in DevOps

Flaky tests erode confidence in CI pipelines and slow delivery. These tests pass and fail under the same code and environment conditions, which makes test results hard to trust and encourages teams to ignore failures.

The cost adds up quickly. Developers can spend up to 30% of their time handling CI problems, and unstable pipelines delay projects for most teams. For a 20-person engineering team, that can approach $1 million in yearly productivity loss through lost time, slower releases, and growing technical debt.

Common root causes of test flakiness include:

- Concurrency issues such as race conditions, deadlocks, and shared state

- Dependence on external systems such as APIs, queues, or databases

- Non-deterministic logic involving time, randomness, or asynchronous behavior

- Unstable or shared test environments with poor isolation

These risks increase in parallel CI/CD pipelines, where timing and shared resources make failures harder to reproduce. Treating flaky tests as background noise or repeatedly re-running builds encourages teams to overlook real defects and increases context switching. Developers leave their current work, dig through logs, rerun tests, and manually patch issues, which disrupts flow and reduces focus.

Install Gitar to start automatically fixing broken builds and reduce time spent on CI

A practical framework for test stability automation

Phase 1: Detect and classify flaky tests

Reliable automation starts with clear visibility into test behavior. Teams need monitoring that surfaces patterns rather than isolated failures.

Effective detection typically includes:

- Tracking pass and fail rates for each test over time

- Correlating failures with environment, branch, or configuration changes

- Tagging and quarantining tests that show intermittent behavior

The goal is to identify which tests are unreliable, how often they fail, and under what conditions. This context supports more targeted fixes instead of ad hoc workarounds.

Phase 2: Design for deterministic, isolated tests

After locating flaky tests, teams can reinforce their architecture for stability. Deterministic tests produce the same result given the same inputs, which requires stronger isolation and control.

Key practices include:

- Isolating state with database sandboxing and clean test data setup and teardown

- Replacing external dependencies with mocks or stubs

- Removing shared global state and hidden coupling across tests

- Using explicit wait conditions for asynchronous operations instead of arbitrary sleep calls

- Abstracting time and randomness behind test-friendly interfaces

Clear testing guidelines and reusable patterns help keep new tests stable as the codebase grows.

Phase 3: Automate remediation with autonomous AI

Manual debugging, patching, and validation do not scale. Test stability improves when CI failures trigger automated analysis and fixes instead of pulling developers out of their work.

Autonomous AI solutions such as Gitar act as a CI fixing layer. These systems:

- Reproduce the CI environment and failure conditions

- Analyze logs and failing tests to infer the root cause

- Generate and apply code or configuration changes

- Re-run the pipeline to validate that the fix passes checks

This model reduces context switching and keeps developers focused on feature work while still maintaining pipeline health.

Phase 4: Monitor CI health and iterate

Test stability remains a moving target as systems evolve. Teams benefit from tracking a small set of metrics and reviewing them regularly.

Helpful indicators include:

- Mean time to a green build after a failure

- Percentage of tests or builds marked as flaky

- Developer time spent on CI fixes or reruns

- Number of automated versus manual remediations

Regular reviews of these metrics support decisions about where to invest in better isolation, tooling, or automation.

How Gitar supports self-healing CI

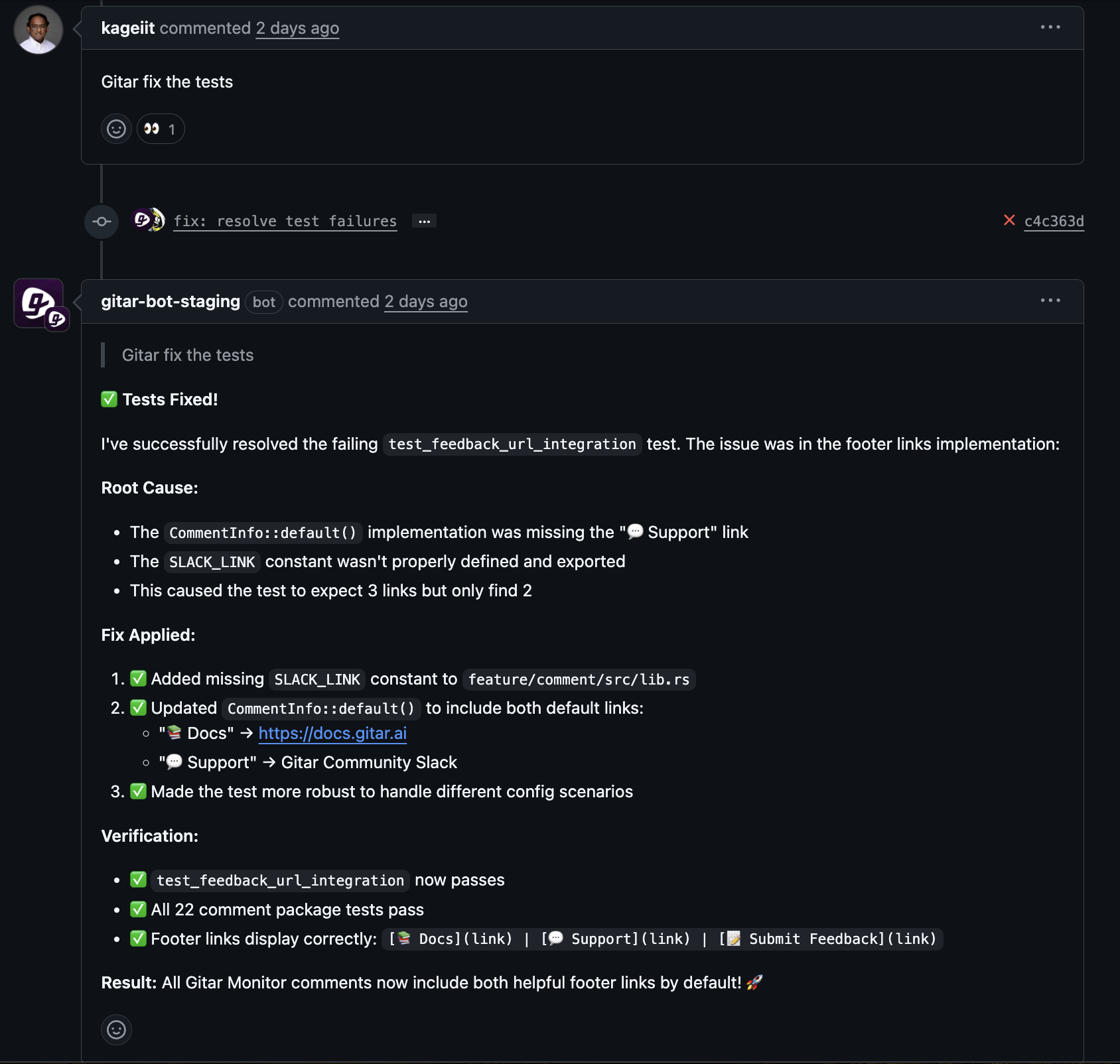

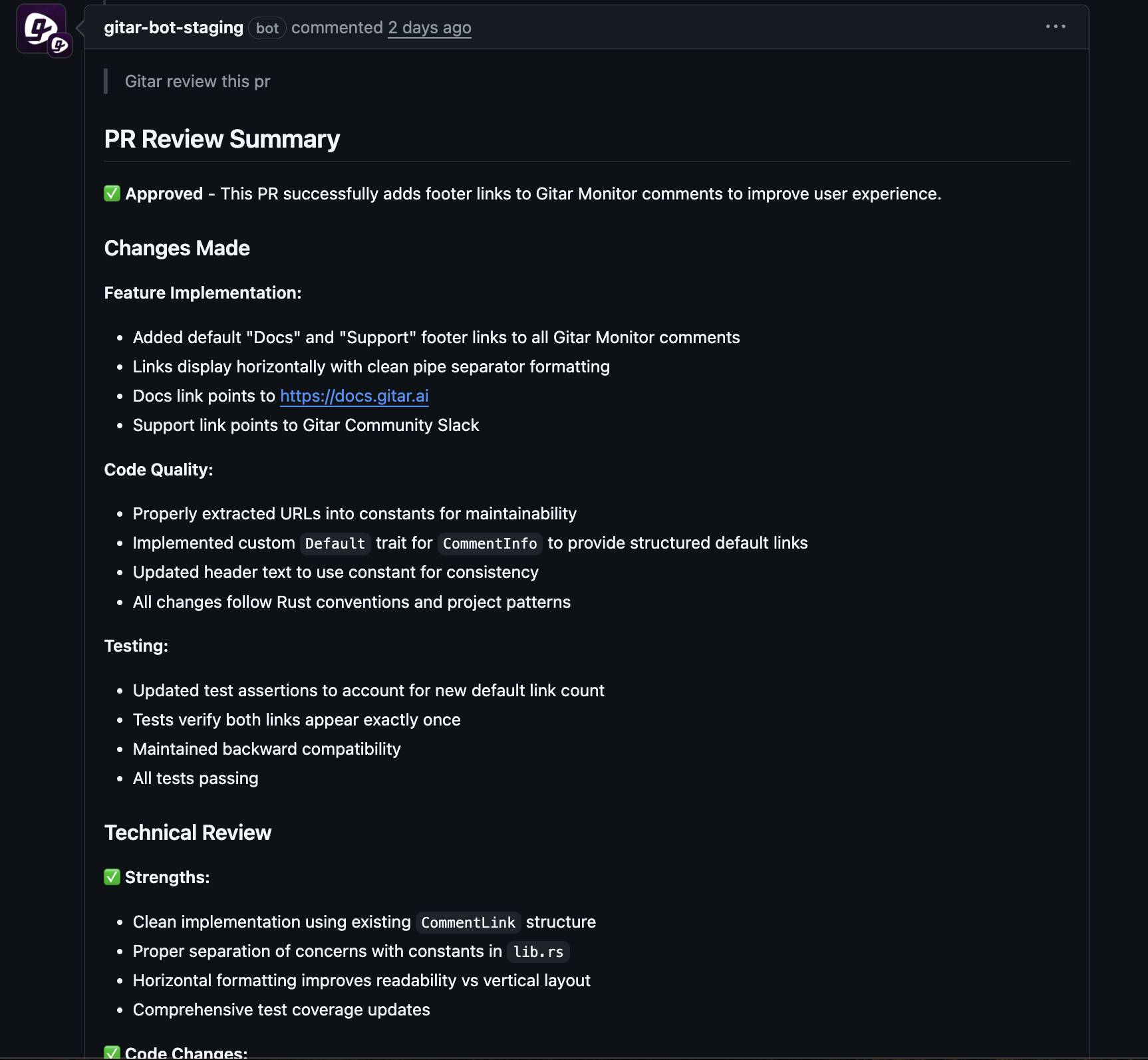

Gitar focuses on the specific bottleneck of failing CI builds and drawn-out code review cycles. Instead of acting only as a suggestion engine, Gitar functions as an autonomous fixer that updates pull or merge requests with validated changes.

Core capabilities include:

- End-to-end fixes that edit code, configuration, or tests and then re-run CI

- Accurate reproduction of enterprise environments with specific SDK versions, multiple languages, and tools such as SonarQube or Snyk

- Configurable trust levels, from suggestion-only modes to auto-commit with rollback

- Support for major CI providers, including GitHub Actions, GitLab CI, CircleCI, and BuildKite

Teams can start with low-risk modes where fixes appear as suggestions for review, then move to more automated flows as confidence grows. Optional human approval before merge keeps control with the team while still reducing manual effort.

Book a Gitar demo to see autonomous CI fixing in your own environment

Gitar vs. conventional approaches to CI fixing

|

Feature |

Manual CI fixing |

AI suggestion engines |

Gitar autonomous AI |

|

Problem resolution |

Human investigation and code edits |

Suggests fixes, developer implements |

Applies and validates fixes automatically |

|

Developer interruption |

High context switching and delay |

Moderate interruption for review and changes |

Low interruption; fixes often complete before review |

|

CI validation |

Manual reruns until green |

Requires manual reruns |

Automated pipeline reruns and verification |

|

Complex environments |

Slow and error-prone for large systems |

Limited awareness of full environment |

Replicates environment, tools, and dependencies |

From suggestions to autonomous resolution

Suggestion-only tools reduce some friction but still depend on developers to interpret advice, update code, and re-run CI. The cognitive cost remains, and failures can linger in queues while people handle other work.

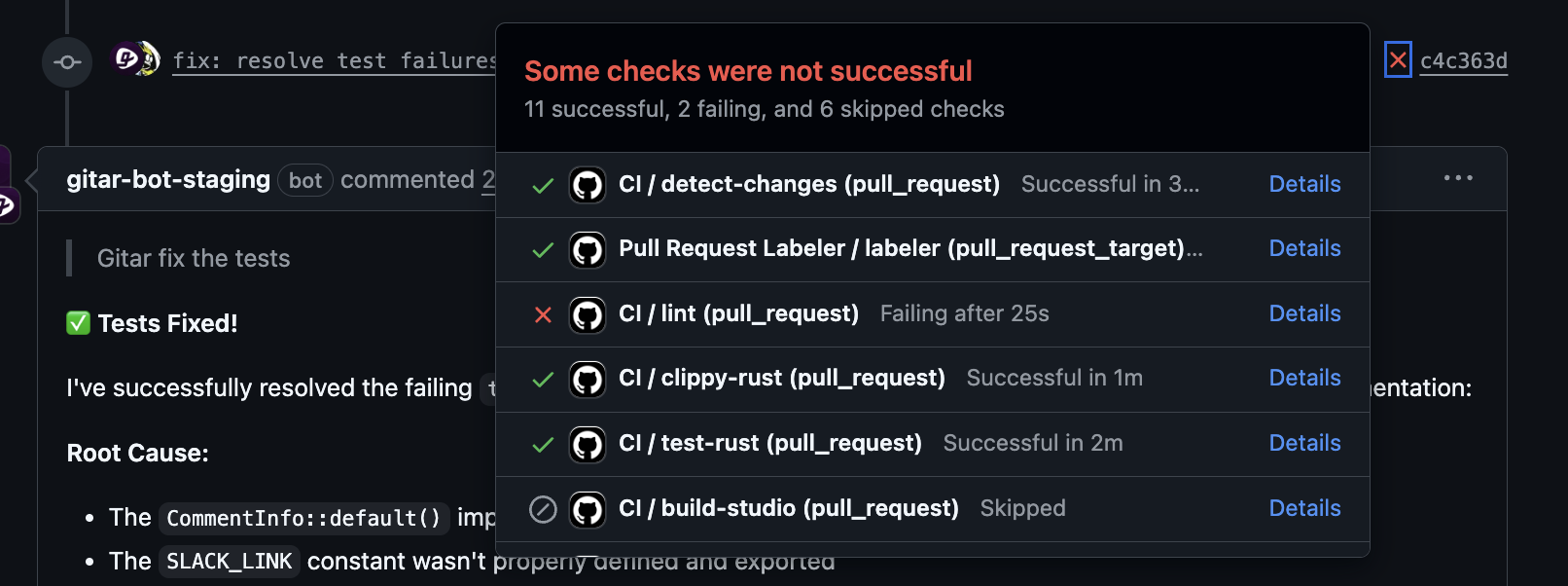

Gitar closes this loop. When a test or check fails, Gitar analyzes the failure, applies a candidate fix, triggers the CI pipeline, and surfaces the result. Developers see a passing build or a narrowed set of remaining issues instead of a raw failure.

This approach is particularly useful for environment-sensitive failures such as shared state issues, order-dependent tests, or integration problems. Gitar works inside the real CI context, which helps ensure that changes hold up under actual pipeline conditions.

ROI of autonomous test stability

Time lost to CI issues represents a direct cost. When developers stop feature work to debug pipelines, organizations pay more for the same output and delay delivery.

Consider a 20-person engineering team where each developer spends one hour per workday on CI-related tasks. At a $200 hourly loaded cost, that is roughly 5,000 hours per year and about $1 million in productivity diverted from feature development, reliability improvements, or customer work.

Autonomous CI fixing reduces that load by shortening or removing many of those interruptions. If automation removes even half of the manual effort, a similar team could reclaim around $500,000 of engineering time annually, in addition to faster releases and fewer late-stage surprises.

Install Gitar to start reducing CI-related engineering costs

FAQ: test stability automation and Gitar

How does Gitar handle complex enterprise CI environments?

Gitar mirrors full enterprise workflows, including language versions, SDKs, and tools such as SonarQube and Snyk. The system learns from the existing pipeline configuration and applies fixes that align with each repository’s structure and dependencies.

How do teams maintain trust and control over automated fixes?

Teams configure Gitar’s aggression levels. Conservative modes keep humans in the loop by posting fixes as suggestions that require approval. Higher-aggression modes support auto-commits with built-in rollback. Every change runs through the CI pipeline before merge, and teams can review a full history of modifications.

How does Gitar address timing issues and shared state flakiness?

Gitar examines failure logs and pipeline behavior to locate patterns that suggest race conditions, inconsistent cleanup, or shared resources. Fixes can include more reliable synchronization, better isolation of test data, or corrections to resource handling so tests behave consistently.

How does Gitar differ from AI code reviewers for test stability?

AI code reviewers focus on suggestions inside pull requests. Gitar focuses on failing CI runs and completes the cycle from analysis to applied fix to validated build. This specialization makes it better suited to reducing time spent reacting to CI breakages.

How does autonomous test fixing support distributed teams?

Gitar runs continuously against CI events, so reviewers and authors in different time zones do not need overlapping hours to resolve failures. A reviewer can request a fix, and Gitar can apply and validate the change before the original author returns, reducing multi-day feedback loops.

Conclusion: stabilizing CI with autonomous test fixing

Test stability automation has become a core requirement for teams that want predictable, fast delivery in 2026. Manual CI troubleshooting and repeated reruns do not scale with growing codebases and distributed teams.

Gitar provides an autonomous layer that keeps CI pipelines healthy by fixing many failures directly in the environment where they occur. Configurable trust modes, environment-aware fixes, and support for major CI platforms help teams adopt automation at a pace that matches their risk tolerance.

Organizations that invest in autonomous test stability gain more than time savings. They improve developer focus, reduce release uncertainty, and create space for higher-impact engineering work.

Request a Gitar demo to see how autonomous CI fixing can support your team’s workflow