Key Takeaways

- Selenium test suites often create hidden maintenance costs, including infrastructure, specialist talent, and time spent fixing brittle UI tests instead of building features.

- Autonomous and AI-assisted testing changes the model from reactive “fix after failure” work to proactive, self-healing validation that reduces context switching and flakiness.

- Modern alternatives range from low-code platforms and newer frameworks to AI-driven test healing and fully autonomous CI agents, each with different tradeoffs for maintenance overhead.

- Successful adoption of autonomous solutions depends on clear ROI analysis, careful change management, and phased rollout that builds trust and fits existing workflows.

- Gitar provides an autonomous CI agent that fixes failing builds and review feedback automatically, helping teams reclaim developer time and reduce CI/CD friction; try Gitar to start reducing your CI maintenance load.

Why Selenium Maintenance Overhead Keeps Growing

Selenium maintenance often extends far beyond simple test updates. Many teams invest in grids, containers, and orchestration just to keep large suites running, which increases total cost of ownership. The framework itself may be free, but infrastructure, configuration, and ongoing care rarely are.

Talent requirements add another layer of cost. Teams frequently need experienced SDETs or developers to keep tests stable. Minor UI or CSS changes can break locators, and engineers may spend hours diagnosing failures that add little direct business value. This creates a recurring productivity tax when combined with context switching.

Modern development practices intensify this effect. Tools like GitHub Copilot and Cursor can increase code volume and pull requests, which expands test coverage needs and CI load. Writing and maintaining robust end-to-end UI tests can become a bottleneck when teams are already under pressure to ship faster.

Install Gitar to start reducing time spent on broken CI builds and repetitive maintenance work.

A New Mental Model: From Reactive Fixing to Autonomous Validation

Traditional Selenium workflows follow a fail-first pattern. Tests run, failures appear, and developers must stop their work, read logs, reproduce issues, patch locators or flows, and rerun pipelines. Even small fixes can stretch into long interruptions when context switching and queue times are included.

Autonomous and AI-assisted approaches promote a heal-first mindset. Systems adapt to expected changes, resolve common failures automatically, and only escalate edge cases. The goal shifts from writing more tests to reducing the time teams spend keeping existing tests and pipelines healthy.

Different categories of modern solutions address this shift in distinct ways:

- Low-code and no-code platforms simplify test authoring but usually do not remove maintenance overhead when the UI evolves.

- AI-driven healing solutions adjust locators and flows when elements change, which directly targets brittle UI tests.

- Autonomous CI agents operate across the full pipeline, diagnosing failures, applying fixes, and validating results without manual intervention.

This mental model focuses on lowering maintenance effort as the primary outcome, not just increasing test coverage or adding new reporting layers.

Modern Alternatives to Selenium: What They Solve and What They Do Not

The current tool ecosystem offers several paths away from manual Selenium maintenance. Evaluating them clearly helps leaders match tools to the problems they actually need to solve.

Low-Code and No-Code Platforms: Faster Authoring, Similar Upkeep

Low-code and no-code tools reduce the skill barrier for writing tests and speed up early automation work. Product managers, QA analysts, or support staff can often contribute flows through visual editors. However, when the UI changes, those tests still need care. Teams may trade script editing for test model updates, but the underlying maintenance obligation remains.

Next-Generation Frameworks: Better DX, Still Manual Fixes

Frameworks like Playwright and Cypress improve reliability, speed, and developer ergonomics compared with legacy Selenium setups. They simplify waits, provide richer debugging, and integrate well with modern CI. They still rely on hand-authored code, though, so engineers must update selectors and flows when the application evolves. Maintenance improves but does not disappear.

AI-Driven Test Healing: Direct Relief for Brittle UI Tests

AI-powered test healing platforms adjust tests when the UI changes, update locators automatically, and reduce flakiness by using multiple signals to find elements. Intelligent test maintenance solutions report potential maintenance reductions of more than 80 percent through self-healing and predictive capabilities. These tools concentrate on UI brittleness rather than broader CI or code review friction.

Autonomous AI Agents for CI/CD: Closing the Loop from Failure to Fix

Autonomous CI agents sit inside the pipeline itself. They read logs, understand failures, propose and apply code changes, rerun the relevant jobs, and only surface results when the build returns to green or an issue needs human input. This approach reduces the manual attention that failing tests, lint errors, and configuration issues typically demand.

How Gitar Reduces CI and Review Maintenance Work

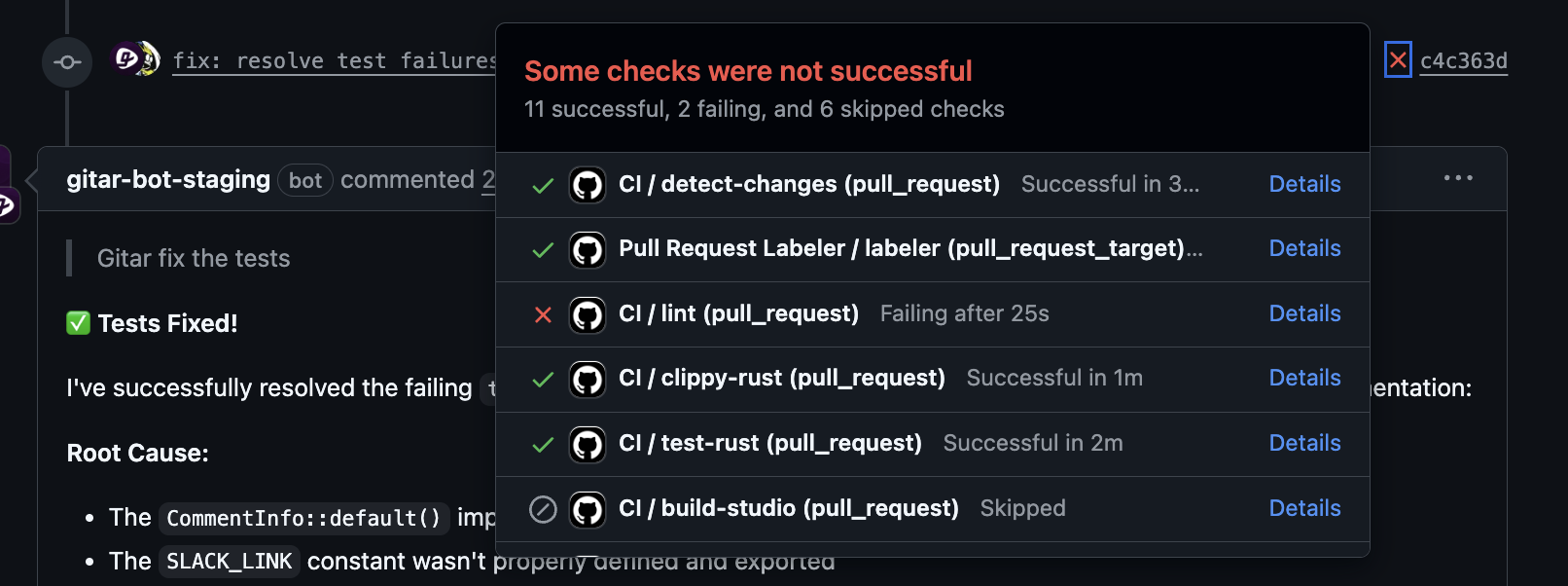

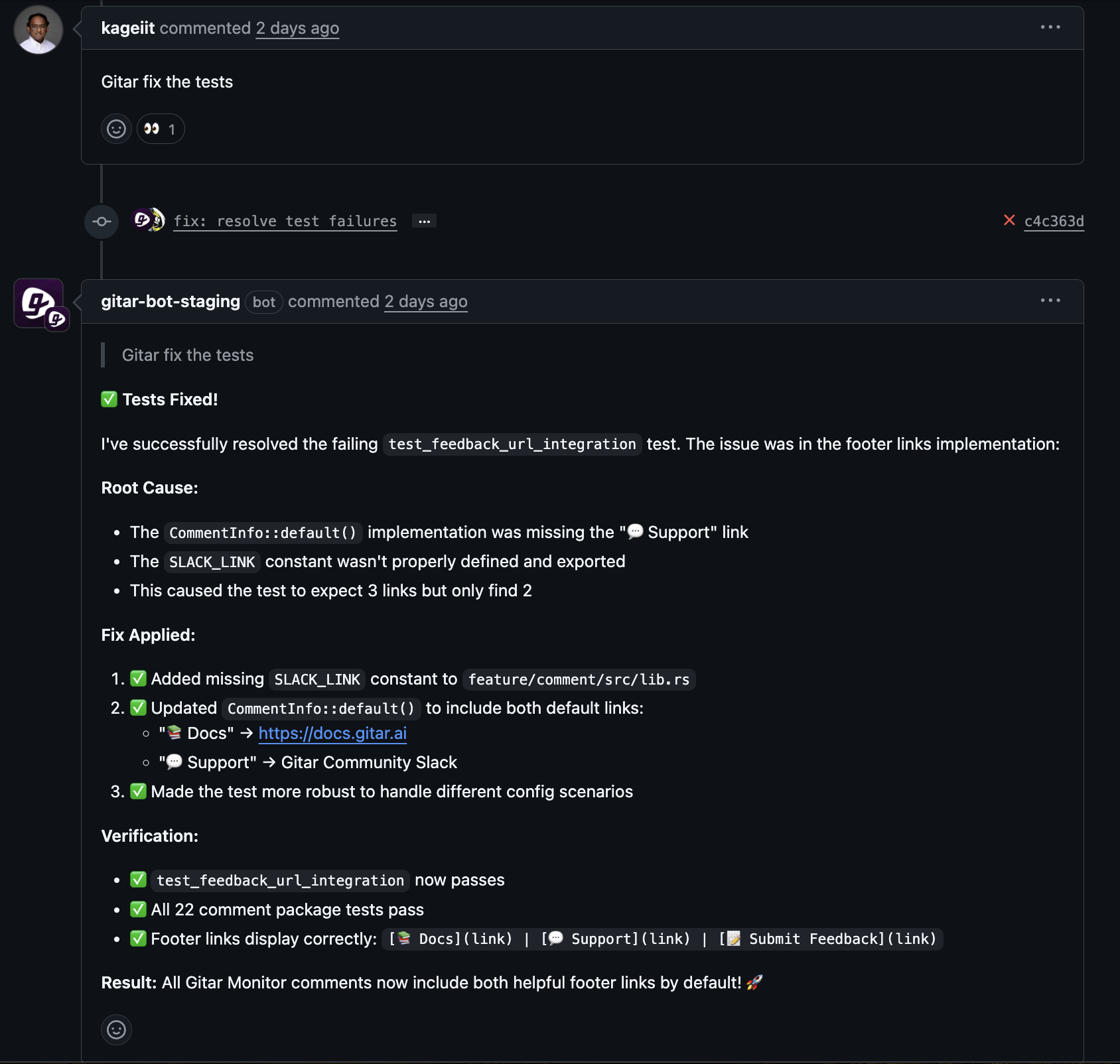

Gitar provides an autonomous AI agent focused on CI failures and code review follow-ups. It handles issues such as lint violations, test failures, and build errors, then pushes validated fixes back to the pull request. Engineers gain time to focus on design and feature work instead of pipeline triage.

When a check fails, Gitar analyzes logs, identifies the root cause, creates a change, runs the appropriate workflow, and only then updates the pull request. The result feels like self-healing CI, where routine failures resolve without a human jumping into logs or local repro steps.

Key capabilities that support this behavior include:

- End-to-end fixing, where Gitar applies changes, validates them in CI, and only surfaces green pull requests or clearly documented edge cases.

- Full environment replication for complex setups, including specific JDK or SDK versions, multi-language builds, and tools such as SonarQube or Snyk.

- A configurable trust model, with conservative modes that suggest commits for review and aggressive modes that commit directly with safety controls.

Distributed teams benefit from this during code review as well. A reviewer can leave comments at the end of their day, and Gitar implements the requested changes so that the author can focus on high-value review follow-up instead of mechanical edits.

Experience autonomous CI fixes with Gitar and reduce repetitive CI/CD overhead.

Strategic Considerations for Adopting Autonomous Automation

Adopting autonomous tools is a strategic decision, not just a tooling swap. Clear thinking about build versus buy, organizational readiness, and measurement makes success more likely.

Build vs. Buy: Clarifying ROI and Opportunity Cost

Internal builds require investment in AI infrastructure, environment modeling, and integrations. Open-source stacks can reduce licensing costs while increasing long-term ownership costs through maintenance and support. Purchased platforms shift some of that burden to vendors but introduce subscription or usage fees. Leaders should compare these options using expected hours saved on CI triage, test maintenance, and code review churn, not just license line items.

Change Management: Building Trust in Automation

Teams adopt autonomous systems more smoothly when trust grows in stages. Many organizations begin in suggestion-only mode, where tools propose fixes and humans approve them, then expand to more autonomous modes as quality and safety prove out. Training, clear escalation paths, and fast feedback channels all help.

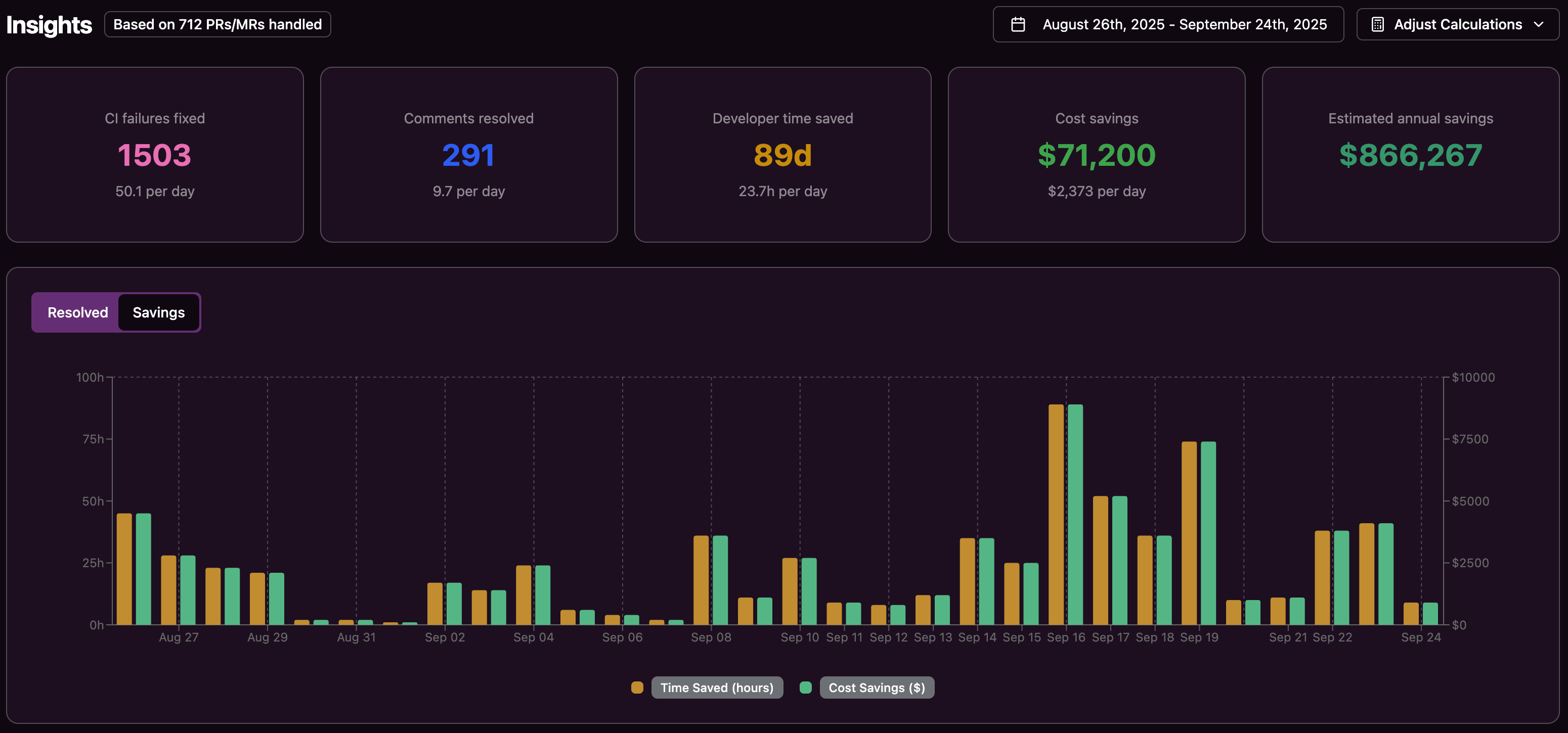

Measuring Success: Tracking the Impact on Delivery

Useful metrics include time from first PR open to merge, mean time to resolve CI failures, number of context switches per engineer per day, and the share of CI failures resolved without human intervention. For a 20-developer team, small time savings on each pull request can add up to meaningful annual cost reductions and faster release cycles.

Implementation Tactics: Phased Rollout

Many teams start with a limited scope, such as one service or repository with frequent CI pain. They gather baseline metrics, enable conservative automation, and expand coverage only after seeing concrete improvements. This approach reduces risk and gives stakeholders real data for further investment decisions.

Common Pitfalls in Modernizing Test and CI Automation

Leaders can avoid costly missteps by recognizing a few common patterns.

- Underestimating the cost of free tools, including infrastructure, maintenance time, and talent needed to keep them running.

- Optimizing for authoring speed alone, which can create large suites that are expensive to maintain later.

- Expecting suggestion-only tools to deliver healing-level benefits, which leaves most of the manual work in place.

- Ignoring team skills and change fatigue, which can stall adoption even when tools are technically sound.

- Overlooking the merge and validation stages as potential bottlenecks, even as coding speed increases.

Use Gitar to address CI bottlenecks that often remain after test framework upgrades.

Frequently Asked Questions (FAQ)

How much financial impact can Selenium maintenance have on an enterprise?

Mid-size teams often dedicate several engineer-days each week to keeping tests and CI stable. Direct labor costs combine with indirect costs from slower releases, more context switching, and reduced morale. Over a year, this can represent a significant share of engineering capacity that does not create new product value.

Are frameworks like Playwright or Cypress enough to solve the problem?

Newer frameworks reduce flakiness and improve developer experience, but they still rely on manual edits when the application changes. They are a strong foundation, but they do not remove the need for human attention in CI and test maintenance, the way autonomous tools aim to do.

How does an autonomous AI agent like Gitar differ from AI tools that only suggest changes?

Suggestion tools analyze code and leave comments that developers must implement and revalidate. Gitar analyzes failures, applies changes, runs CI, and returns results automatically, so developers mainly review the final outcome.

What is a good starting approach for teams unsure about automated fixes?

A practical approach uses a conservative mode first, where Gitar posts proposed changes as suggestions or separate commits for review. Teams review outcomes, tune policies, and then enable more autonomous behavior for low-risk categories of failures once they feel confident.

Conclusion: Turning Selenium Maintenance From Burden to Managed Overhead

Selenium-based testing remains valuable, but its maintenance overhead can limit how quickly teams move. Autonomous and AI-assisted solutions, especially at the CI layer, offer a way to reduce this drag without abandoning existing investments.

Gitar gives engineering leaders a concrete path to reclaim time from failing builds and repetitive review edits, while keeping existing workflows and tools in place. In 2026, organizations that treat maintenance as a problem to automate, not just to staff, will be better positioned to ship quickly and maintain quality.

Explore Gitar to reduce CI/CD maintenance overhead and help your team focus on shipping product work.