Key Takeaways

- Platform teams in 2026 operate under a “prove it or lose it” mandate, where operational efficiency and clear ROI determine budget and headcount.

- The largest overhead drivers include CI/CD pipeline toil, slow code reviews, complex infrastructure management, and growing governance requirements for AI systems.

- Autonomous AI that fixes CI failures and applies code changes reduces manual debugging, context switching, and review delays more effectively than suggestion-only tools.

- Leaders gain stronger executive support by combining build-vs-buy decisions, FinOps practices, and clear metrics that tie platform work to financial outcomes.

- Gitar provides an autonomous CI and code review assistant that fixes failures and implements feedback for you, so teams can reduce overhead and ship faster; get started with Gitar here.

The Operational Overhead Crisis in Platform Engineering: Why Today’s Challenges Demand New Solutions

Persistent Complexity

The emerging 2026 IT operating model is product-centric, AI-native, and platform-driven, designed explicitly to combat complexity. Hybrid cloud environments, microservices architectures, and AI-native applications expand the operational surface area. Each additional service or provider increases the risk of failures, configuration drift, and manual interventions that platform teams must absorb.

The Developer Productivity Drain

Developers currently spend around 42% of their week dealing with technical debt. A large share of that time goes to:

- Investigating and fixing CI failures

- Responding to code review feedback and re-running tests

- Recovering focus after context switching between incidents, PRs, and feature work

A short CI fix often expands into an hour of lost flow once interruptions and re-orientation time enter the picture.

Hidden Costs Beyond the Cloud Bill

AI initiatives incur significant hidden operational costs beyond cloud infrastructure bills, mainly in the form of governance, compliance, and risk management activities. Senior experts spend time on model approvals, explainability, and audits. These activities introduce recurring operational overhead that often sits outside traditional cloud cost dashboards.

The Right-Shift Bottleneck

Generative AI speeds up code creation, but it pushes the bottleneck to validation and merging. More code means more pull requests, more tests, and more opportunities for CI to fail. The primary challenge has shifted from producing code to validating and merging it through complex quality gates at scale.

A Strategic Framework for Operational Overhead Reduction

Identifying Overhead Hotspots

Platform teams benefit from auditing operational burden across four areas:

- CI/CD pipeline toil: Manual debugging, reruns, and flaky tests, often consuming 6–8 hours per developer each week.

- Code review delays: Latency from time zones, reviewer bandwidth, and multiple feedback cycles before approval.

- Infrastructure management: Custom platform maintenance, cloud cost management, and configuration drift control.

- Governance and compliance: AI model governance, security reviews, and regulatory checks for every change.

Quantifying the Impact: Beyond DORA Metrics

Traditional engineering metrics like DORA are insufficient to justify platform investments to finance leaders because they do not directly show business value. Platform teams gain support faster when they map work to business-oriented outcomes:

- Revenue enabled through faster time-to-market

- Costs avoided through fewer incidents and lower infrastructure spend

- Profit center contribution where platforms directly support new products or services

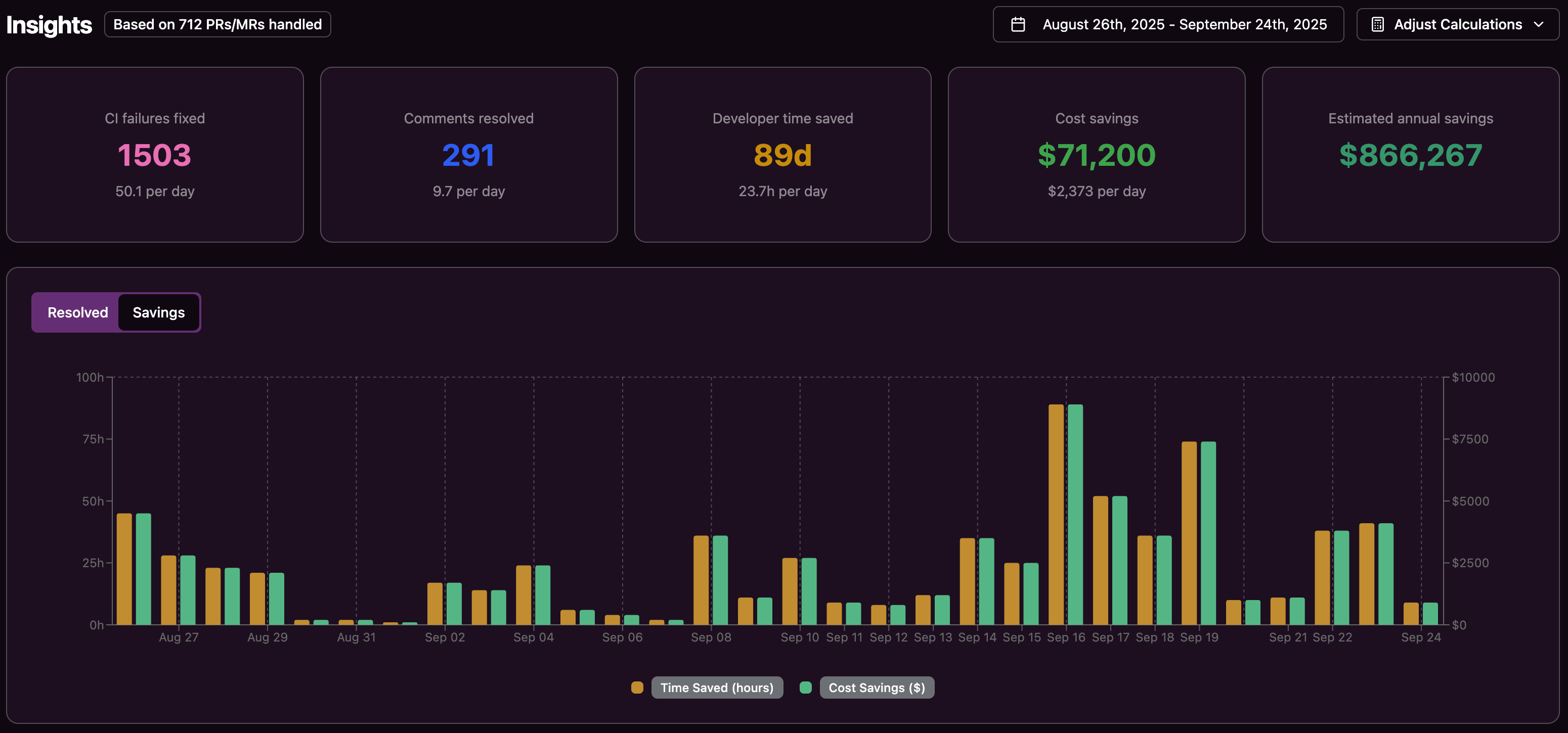

For a 20-developer team that spends one hour per day on CI and reviewing issues, the result is roughly 5,000 hours annually. At a loaded cost of $200 per hour, that equals about $1 million in lost productivity. High-maturity platform teams report 40–50% developer productivity gains and 20–30% infrastructure cost reductions, which builds a clear case for more autonomous approaches.

Advanced Automation and AI: The Path to Autonomous Platform Operations

The Shift from Suggestion to Healing

Traditional AI reviewers identify issues and suggest edits, but developers still implement changes, re-run CI, and handle failures. This suggestion-only model keeps manual toil in the loop.

Autonomous systems change the approach. These systems analyze failures, propose and apply fixes, and validate results in CI before asking humans to review. The focus moves from highlighting problems to delivering working solutions that pass pipeline checks.

Autonomous CI Fixes: How Gitar Changes CI/CD Workflows

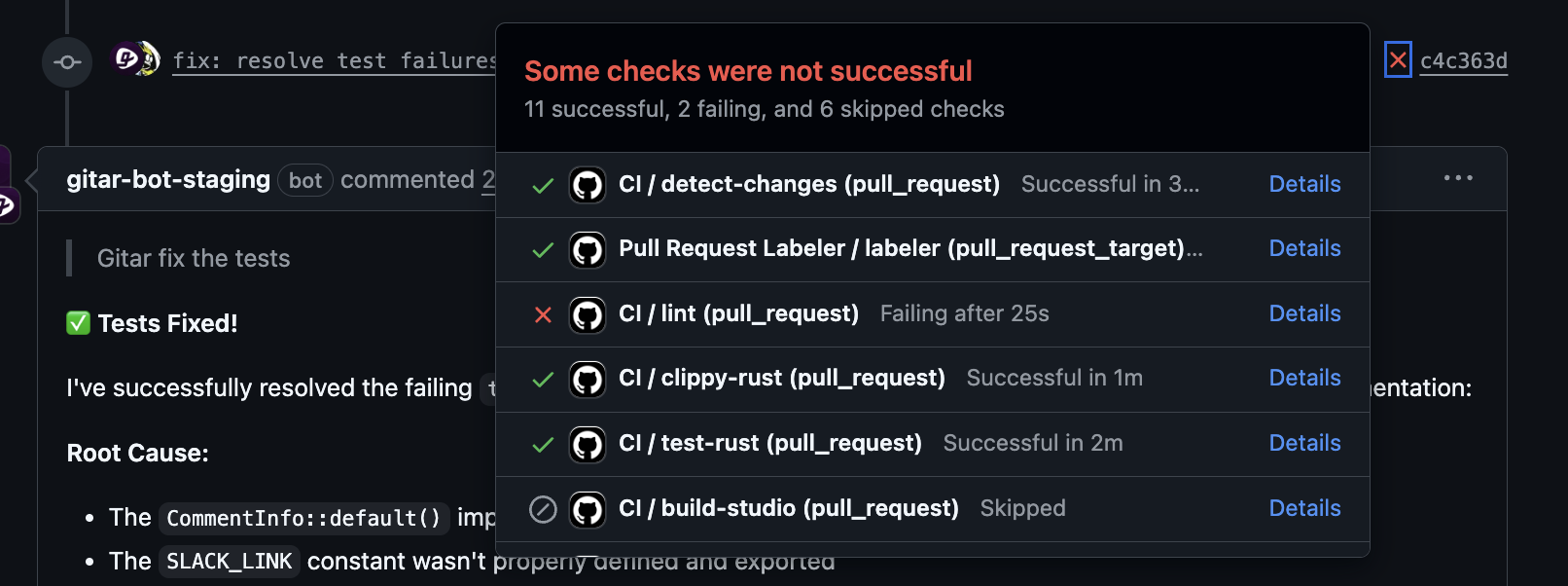

Gitar provides an autonomous AI agent that focuses on failing CI pipelines and code review feedback. When checks fail, such as lint errors, test failures, or build issues, Gitar analyzes logs, identifies likely root causes, generates code changes, and commits fixes back to the pull request branch once CI passes.

Teams can tune Gitar’s behavior with configurable modes. Conservative mode posts suggested changes that require one-click approval. More aggressive modes commit fixes automatically, with options for rollback if needed. This approach lets teams build trust gradually while still reducing CI toil.

|

Feature |

Gitar (healing engine) |

Traditional AI reviewers |

DIY model integrations |

|

Core function |

Fixes and validates CI failures autonomously |

Suggests fixes and provides analysis |

Requires custom engineering work |

|

Validation against CI |

Yes, targets passing builds before handoff |

No, depends on manual validation |

Requires custom validation pipelines |

|

Manual intervention |

Low and configurable |

High, developers must implement suggestions |

High, orchestration and prompt design |

|

Environmental awareness |

Works against the full enterprise CI environment |

Often limited to code context |

Needs extensive custom context wiring |

See how Gitar automates CI fixes across your existing pipelines.

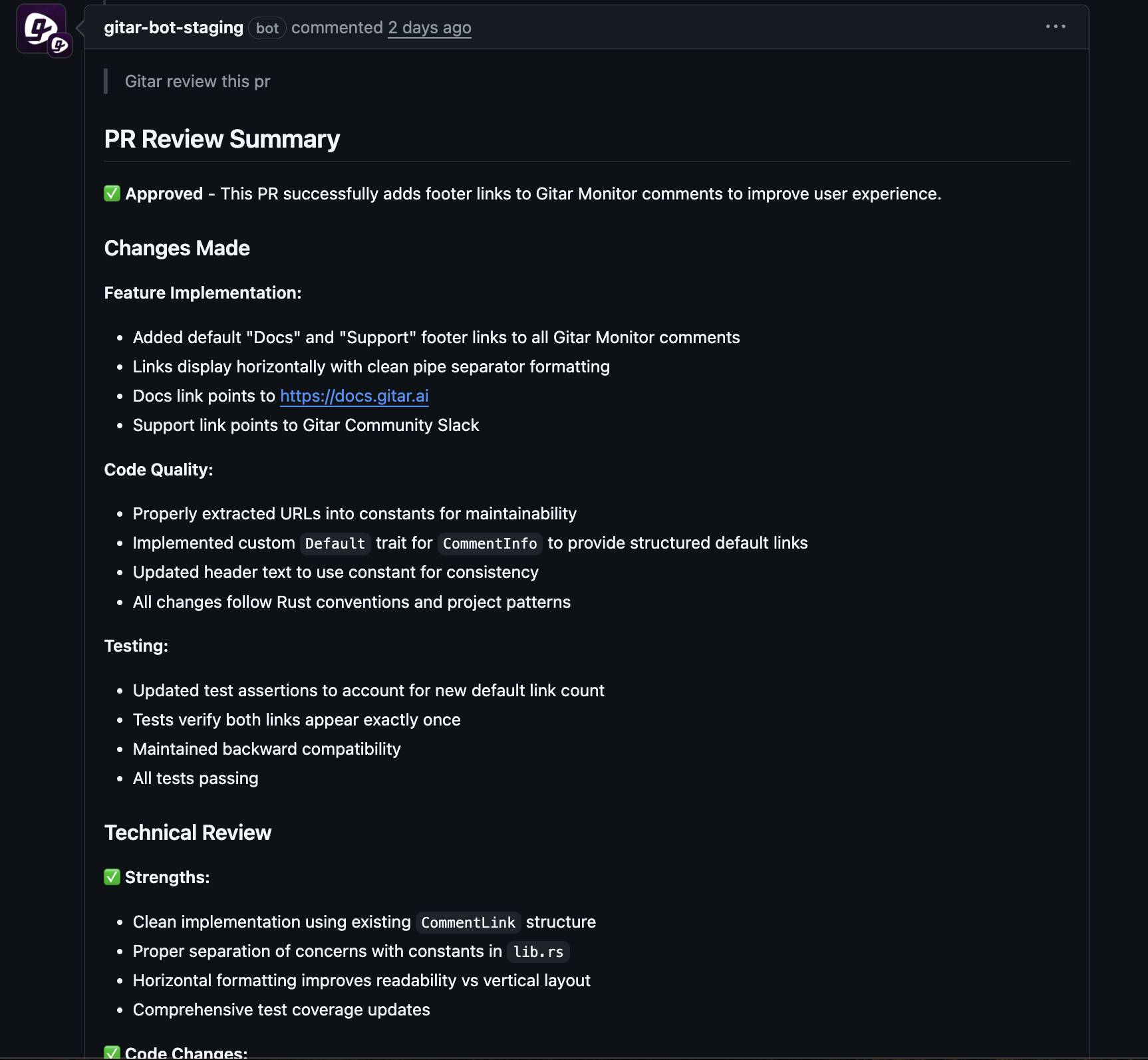

Beyond CI: Automating Code Review Feedback

Gitar also supports human reviewers. Reviewers can mention Gitar for an AI-generated review or leave comments that Gitar turns into concrete code changes. Teams working across time zones gain particular value, because reviewers can leave feedback at the end of their day while Gitar applies changes and re-runs CI so that the next team starts with a ready-to-approve PR.

Implementing an Autonomous Platform: Strategic Considerations for Leaders

Build vs. Buy: The Case for Managed Platforms

DIY platform engineering poses significant challenges by 2026, especially for Backstage-based portals, due to the substantial engineering effort required for building, extending, and maintaining them. Every custom plugin, workflow, and integration becomes long-term build debt. Managed solutions reduce this maintenance load so internal teams can focus on higher-value problems.

Organizational Readiness and Trust Building

Effective adoption of autonomous tools usually follows a staged rollout:

- Start with suggestion-only modes in non-critical repositories.

- Track fix quality, rollback rates, and developer satisfaction.

- Expand to more repositories and enable auto-commit modes once teams gain confidence.

- Align approval workflows with existing code ownership and compliance rules.

Integrating FinOps for Proactive Cost Control

FinOps is identified as a critical competency for platform engineers in 2026, with dedicated tools needed to manage Kubernetes-native and multi-cloud cost optimization. Pairing FinOps with autonomous platforms lets teams enforce cost-aware policies before deployment, reduce wasteful environments, and surface cost anomalies early.

Metrics for Success: Demonstrating Platform ROI

Teams measuring a diversified set of at least six metrics spanning DevOps performance, developer experience, and FinOps are significantly more likely to succeed. A balanced scorecard often includes:

- DORA and reliability metrics for delivery performance

- Developer experience and satisfaction scores

- Cost per environment, per service, or per feature

- Hours of toil removed from CI and code review workflows

Strategic Pitfalls to Avoid in Your Overhead Reduction Journey

Underestimating Long-Term Maintenance

Internal platforms accumulate maintenance work across upgrades, security patches, plugin compatibility, and on-call coverage. Teams that overlook this ongoing cost often find their overhead rising again after initial gains.

Ignoring Developer Experience

Platforms that ignore user-centered design can increase cognitive load instead of reducing it. Clear interfaces, sensible defaults, and straightforward workflows help developers move quickly without extra training or support.

Focusing Only on Tactical Fixes

Narrow efforts that target isolated pain points often provide temporary relief. Structural reductions in overhead usually come from addressing systemic issues such as CI reliability, governance automation, and automated feedback loops in code review.

Lack of Quantifiable ROI

Platform teams that cannot express impact in financial terms struggle to secure continued investment. Translating technical wins into revenue enabled, costs avoided, and measurable productivity gains aligns their work with executive priorities.

Frequently Asked Questions (FAQ) about Reducing Operational Overhead

How can platform engineering demonstrate ROI beyond traditional technical metrics?

Platform engineering teams can frame ROI around business pillars such as revenue enabled, costs avoided, and profit center contribution. Converting developer experience improvements into minutes saved per developer per week, then into annual hours and loaded cost, yields a clear financial impact that finance leaders understand.

How does autonomous CI fixing differ from traditional AI code review tools in reducing operational overhead?

Traditional AI review tools act as suggestion engines. They highlight issues and propose edits, but developers still implement changes and re-run CI. Autonomous CI fixing works as a healing engine that identifies issues, applies code changes, and validates them against CI before developer review. This approach removes much of the manual toil and context switching tied to broken builds.

How can platform teams quantify the business impact of reducing developer context switching and CI failures?

Teams can multiply the average loaded hourly rate for developers by the number of hours lost to CI failures and context switching each week. For example, a 20-developer team that loses one hour per day to CI and review issues reaches about 5,000 hours per year. At $200 per hour, this equals roughly $1 million in opportunity cost, which automation can significantly lower.

Conclusion: Reclaiming Efficiency with Autonomous Platform Operations

Reducing operational overhead has become a core requirement for platform engineering in 2026. Traditional approaches to CI/CD complexity, governance, and developer productivity no longer keep pace with hybrid, AI-native environments.

Autonomous AI within CI/CD offers a practical path forward. By turning reactive debugging into automated resolution, platforms evolve from cost centers into measurable efficiency engines. Gitar supports this shift by fixing CI failures, implementing code review feedback, and reducing the time developers spend on repetitive operational work.

Try Gitar to reduce CI/CD overhead and give your engineers more time for high-impact work.