AI-assisted coding promises faster workflows and better delivery for software development teams. However, many engineering teams find the reality falls short. AI tools often provide solutions that are close but not quite correct, needing constant human intervention. This disrupts productivity and damages trust in automation.

The challenge now is clear. Teams need to evolve AI tools from basic suggestion systems into dependable, autonomous partners. User feedback plays a vital role here, especially for managing CI/CD pipelines effectively. This guide shows how to use feedback to refine AI tools, turning frustration into reliability. You’ll learn the obstacles in CI fixes, ways to gather useful input, and how to apply these insights for ongoing improvement.

Why AI Struggles in CI/CD: The “Almost Correct” Issue

AI tools in software development often deliver solutions that seem helpful but miss the mark. In CI/CD environments, where accuracy is critical, this creates significant delays.

Picture this: a developer submits a pull request, expecting smooth integration, but the CI pipeline fails. The AI suggests a fix that solves most of the problem yet introduces a small error or overlooks a key detail. Now, the developer spends extra time fixing both the original issue and the AI’s mistake. Such near-misses can waste up to 30% of a developer’s time on CI and code review tasks.

Trust in AI suffers too. Developers often doubt AI-generated code due to frequent errors and inconsistent results. This leads to constant double-checking, which slows down the process more than manual work would.

Even worse, these “almost correct” solutions break a developer’s focus. Switching back to fix an AI error after moving on to another task adds mental strain and wastes valuable time.

As AI helps create code faster, the volume of pull requests and potential failures grows. This bottleneck in CI and review processes is exactly where teams hoped for speed. Ready to overcome these AI shortcomings? Install Gitar to get accurate, autonomous CI fixes from the start.

How User Feedback Improves AI for CI Fixes

Moving AI from “almost correct” to fully reliable requires a shift in strategy. Successful teams use feedback systems to help AI learn and adapt over time, turning basic tools into smart solutions for complex challenges.

AI must grow with each interaction in your specific setup. General models can’t grasp your unique codebase or CI/CD needs without input from your team. Feedback helps AI understand your preferences and environment better.

When a developer declines an AI fix, that choice teaches the system about coding standards or specific constraints. Accepted fixes reinforce what works well in your context, building a stronger foundation for future suggestions.

Feedback goes beyond yes or no responses. Building trust in AI involves understanding team values and practices through detailed input. This ensures AI aligns with your goals, not just technical rules.

As codebases and tools change, AI must keep up. Feedback bridges the gap between outdated training data and current needs, ensuring relevance. The best systems create a partnership where human insight shapes AI, allowing developers to focus on creative tasks while automation handles routine fixes.

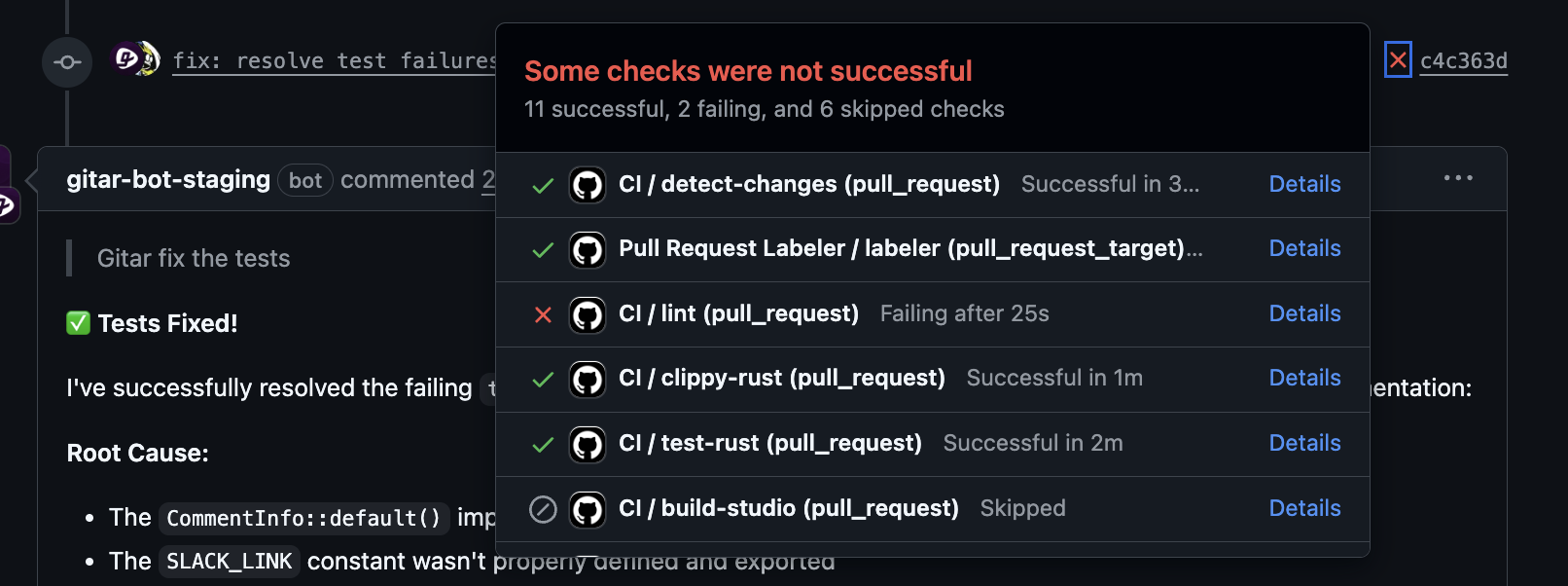

Gitar: AI-Driven CI Fixes That Learn from Feedback

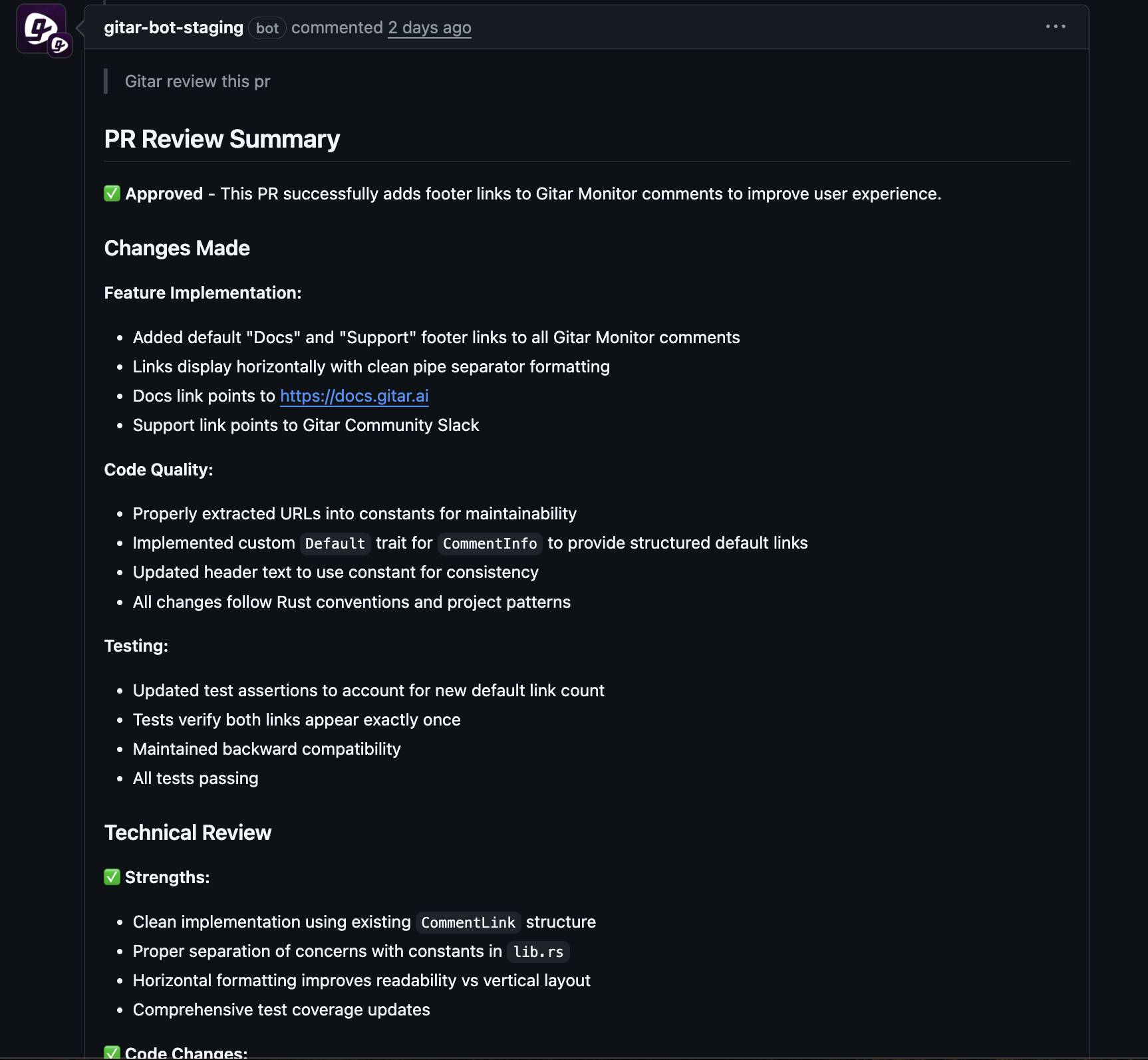

Gitar takes AI-assisted development to the next level by delivering autonomous CI fixes that evolve with user input. Unlike tools that only suggest changes for manual application, Gitar uses advanced learning to improve continuously.

How Gitar Builds Trust with a Flexible Model

Gitar tackles trust issues head-on with a customizable approach. Teams start in a suggestion-only mode, reviewing and approving fixes manually. This builds confidence through firsthand experience with Gitar’s accuracy.

Once comfortable, teams can switch to a mode where Gitar applies fixes directly. This gradual shift ensures trust grows from proven results, not assumptions. Each interaction refines Gitar’s understanding of your coding style and standards.

Context-Aware Fixes for Complex Setups

Gitar stands out by handling intricate enterprise CI environments. It adapts to specific software versions, multi-language builds, and tools like SonarQube, offering fixes tailored to your full system setup.

For instance, if a CI failure involves unique build scripts or dependencies, Gitar analyzes the entire context to craft a fitting solution. Feedback from actual results helps it manage setups that other AI tools can’t handle.

Real-Time Learning from Developer Input

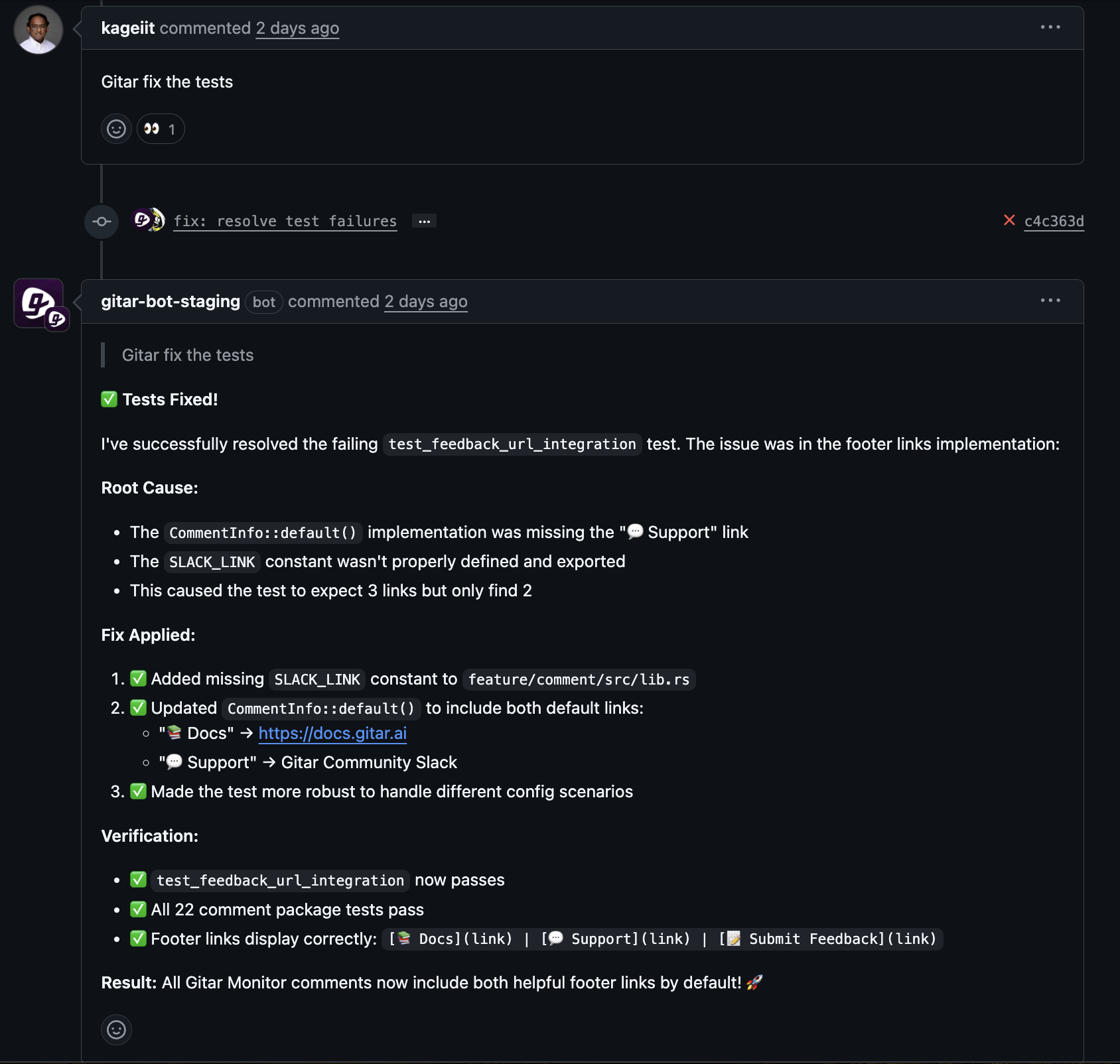

Gitar learns instantly from developer actions within workflows. Accepting or rejecting a suggestion feeds data back to improve future fixes. This process fits naturally into daily tasks, avoiding extra steps.

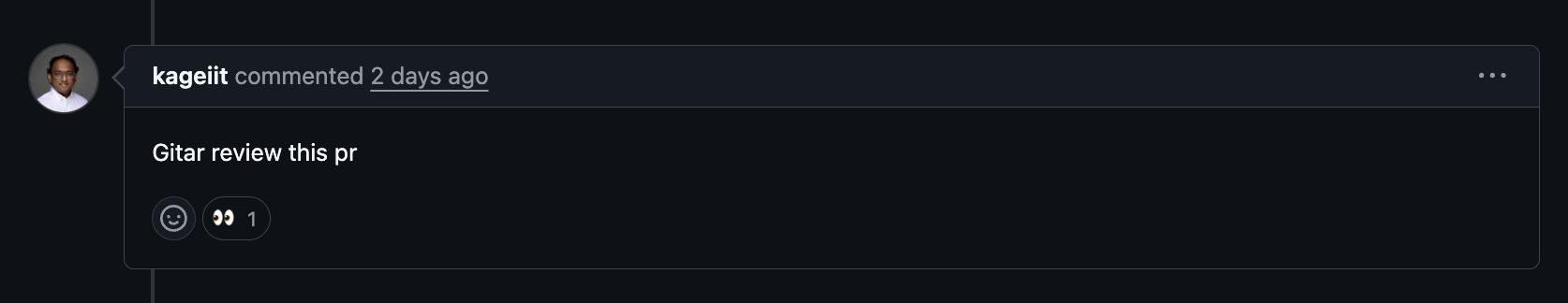

Developers can also guide Gitar via comments. A note like “@gitar use a map instead of a loop for efficiency” prompts a fix and teaches Gitar your preferences for future work. This direct input shapes its approach beyond basic checks.

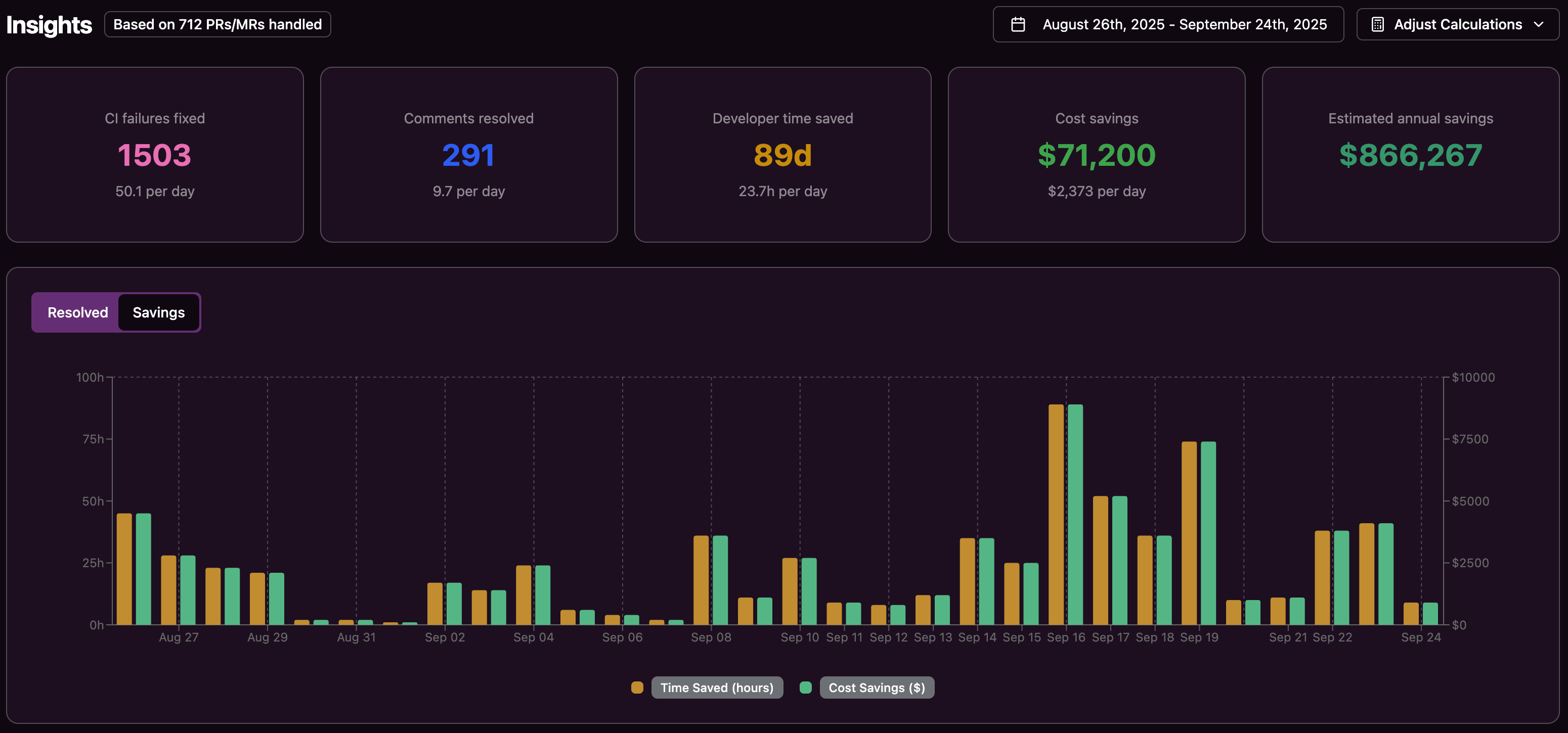

Track Progress with a Performance Dashboard

Gitar offers a dashboard to monitor AI effectiveness. It shows fix success rates, common issues, and interaction data, helping teams decide when to expand automation.

These insights also highlight code and pipeline trends, enabling proactive adjustments. Teams often spot recurring problems Gitar resolves, refining processes to prevent them. Install Gitar now to build an AI that grows with your team’s expertise and needs.

Effective Ways to Gather Actionable Feedback for AI Growth

Improving AI depends on capturing meaningful feedback during regular workflows. The goal is to design methods that provide deep insights without burdening developers.

Direct Feedback for Clear Insights

Direct input reveals user satisfaction and intent, guiding AI improvement. The focus is on seamless integration into daily tools.

- In-tool options within GitHub or GitLab let developers react to AI suggestions instantly with simple yes/no prompts or detailed notes.

- Periodic surveys or interviews dive deeper into AI’s impact on speed, accuracy, and alignment with team standards, seeking specific feedback.

- Dedicated channels, like Slack or forms, encourage ongoing discussion. Active monitoring and visible improvements boost participation.

Indirect Feedback from Usage Patterns

Indirect feedback tracks real behavior, offering large data sets for training. Interpreting these signals accurately is key.

- Analyzing acceptance or rejection rates shows where AI excels or struggles, pointing to specific improvement areas.

- Monitoring manual edits after AI fixes highlights unmet needs or preferences not captured in rules.

- Correlating CI success and merge times measures AI’s real impact, validating fixes or revealing new issues.

Comparing Direct and Indirect Feedback for AI Refinement

|

Feedback Type |

Advantages |

Challenges |

Impact on Improvement |

|

Direct (Ratings, Comments, Surveys) |

Clear view of developer needs; Detailed context |

Lower response rates; Possible bias |

Sharpens specific AI actions and preferences |

|

Indirect (Usage, Acceptance Rates) |

Large data from natural use; Shows true behavior |

Needs careful analysis; Lacks detail |

Spots widespread issues and measures overall effect |

Combining both types works best. Indirect data finds trends, while direct input explains why they happen. This builds a complete picture for AI enhancement.

Turning Feedback into AI Progress

Feedback becomes useful only when analyzed for actionable steps. This process identifies patterns, uncovers root causes, and sets priorities for AI updates.

Finding Patterns in Feedback Data

Analysis starts with spotting common themes across feedback. It blends numerical data, like acceptance rates, with comments to form solid insights.

Tracking changes over time shows if AI adapts to updates in code or tools. Feedback tied to specific teams or contexts highlights where training or expectations need adjustment.

Understanding Why AI Fails

When feedback points to problems, digging deeper separates core flaws from setup issues. This looks at how AI interprets tasks, chooses fixes, and applies them.

Checking environmental factors reveals if failures come from missing context, like CI tool quirks. Examining training gaps shows if AI lacks examples for certain cases, guiding where to focus learning efforts.

Deciding Which Improvements Matter Most

Not all issues need equal focus. Prioritizing balances problem frequency, impact, and effort needed. Fixes affecting daily work often rank higher than rare cases.

Strategic value also guides choices. Updates that build trust or enable new features can outweigh minor technical fixes. Aligning with team goals ensures maximum benefit. Turn feedback into results with Gitar. Install now for CI fixes that adapt to your evolving needs.

Applying Feedback for Ongoing AI Learning

The real value of feedback lies in using it to enhance AI performance. This creates a cycle of improvement, turning insights into better results.

Updating AI Models with New Insights

AI needs regular retraining to stay relevant. This process integrates fresh feedback while preserving existing strengths, avoiding unintended setbacks.

Developer corrections offer high-quality data, showing preferred methods in real cases. Curating diverse, relevant examples ensures updates apply broadly, not just to specific feedback.

Testing AI Updates for Real Impact

Before full rollout, testing different AI setups confirms they improve outcomes. Comparing versions with actual users measures effectiveness through metrics like success rates and user satisfaction.

Rolling Out AI Changes Carefully

Deploying updates needs a cautious plan to manage risks. Gradual rollouts with feature flags limit impact, allowing monitoring and quick reversal if issues arise. Clear communication about changes keeps developers informed and engaged.

Real-Time Growth with Developer Guidance

Advanced systems like Gitar adapt instantly from developer input during reviews. A comment like “@gitar optimize this code” refines current and future fixes, creating a true partnership as AI learns team habits.

Common Questions on AI Improvement for CI Fixes

How Does Gitar Earn Trust from Skeptical Teams?

Gitar builds trust with a flexible model. It starts by suggesting fixes for manual approval, letting developers see results firsthand. Success tracking and full rollback options ensure control. Over time, as confidence grows, teams can allow direct fixes. Transparent reasoning for each action further strengthens trust.

Can Gitar Manage Complex CI Setups and Learn from Errors?

Gitar excels in enterprise environments with custom setups and dependencies. It mirrors your full workflow, ensuring fixes fit actual conditions. Errors become learning opportunities, refining Gitar’s grasp of unique rules or constraints for better future performance.

How Does Gitar Match Our Team’s Coding Style?

Gitar adapts to your coding standards via direct comments and observed edits. Requests like “@gitar simplify this function” teach it your style. It also learns from review feedback, ensuring fixes reflect team quality expectations beyond automated checks.

What Happens If Gitar Makes an Error?

If Gitar introduces an issue, rollback options limit impact. Feedback on what went wrong feeds into learning, reducing similar mistakes. This cycle of error and improvement builds confidence, showing AI that adapts rather than repeats errors.

How Fast Does Gitar Adjust to Workflow Changes?

Gitar responds to changes through detection and feedback. Simple updates, like new rules, are handled quickly. Bigger shifts, like style changes, adapt over a few interactions. Direct guidance can speed up this process, ensuring steady reliability.

Conclusion: Turn AI into a CI/CD Partner with Feedback

Moving beyond “almost correct” AI requires a focus on feedback for continuous growth. Teams that succeed treat input as the core of AI development, not an extra step.

Clear evidence shows feedback-driven AI boosts trust and productivity. When AI learns context from ongoing input, developers rely on it more. This trust enables automation that clears CI hurdles effectively.

Great AI isn’t just coded; it’s shaped through teamwork between humans and machines. Every piece of feedback builds an intelligence that gets your code, team, and goals. Gitar leads with this approach, using strong feedback systems to deliver adapting, trusted CI fixes.

Teams adopting this model early gain an edge. While others wrestle with static tools, your AI will grow smarter, tackling new challenges without constant tweaks. This isn’t just a tech upgrade; it’s a must for keeping development speed in today’s complex field.