Key Takeaways

- Enterprises now face significant productivity loss from CI failures and slow code review cycles, with some teams reporting that developers may spend up to 30% of their time on these issues.

- AI developer tools in 2026 span autocomplete, IDE agents, CI fixers, AI code reviewers, and test-generation tools, giving engineering leaders many options to address different bottlenecks.

- Autonomous CI-fixing agents such as Gitar focus on resolving failures and code review comments directly in pull requests so developers can stay focused on feature work.

- Successful enterprise adoption depends on phased rollout, strong governance, developer-in-the-loop workflows, and clear ROI metrics tied to time-to-merge and CI stability.

- Engineering leaders can use Gitar to create self-healing CI pipelines that reduce context switching and accelerate delivery across teams.

Why Enterprises Need Autonomous AI Developer Tools

Enterprises that scale beyond predictable growth often see CI pipelines and code review queues turn into chronic bottlenecks. First-generation AI coding assistants improved typing speed but did not resolve these workflow-level delays.

Some organizations estimate that developers may spend up to 30% of their time dealing with CI and review friction. That time includes waiting on reviews, chasing flaky tests, and revisiting context long after the original change. For a 20-developer team, these interruptions can translate into roughly $1M per year in lost productivity.

These delays slow releases, increase operational costs, and create pressure on teams that already manage complex systems. The impact extends beyond engineering, as slower time-to-market and inconsistent release quality directly affect competitive position and customer trust.

Teams increasingly look for tools that move from suggestions to autonomous action so CI pipelines can recover on their own and pull requests move forward with less manual effort.

How AI Developer Tools Evolved From Helpers To Agents

AI developer tooling in 2026 now covers the full lifecycle, from writing code to validating and deploying it. Early tools focused on inline suggestions; current tools act as agents that understand repositories, run commands, and change code across multiple files.

Modern enterprise AI developer tools span several categories:

- Code autocomplete and coding assistants such as Codeium and Tabnine.

- AI-native IDEs and agents such as Cursor, Windsurf, and Amazon Q Developer.

- Agent-based development frameworks such as Factory, Cline, and Aider.

- AI-assisted unit testing tools such as Qodo and Diffblue.

- AI code review and understanding tools such as CodeRabbit, CodiumAI, and Sourcegraph Cody.

AI-native IDEs now combine deep repository context, agentic workflows, and conversational interfaces. Many can edit multiple files, run tests, and refine outputs based on feedback from developers.

Autonomous agents extend this model by performing workflow-level tasks in CI and code review. These agents monitor events, propose and apply fixes, and validate the result, which addresses core problems that suggestion-only tools leave to humans.

Most enterprises now assemble a stack of complementary AI tools, which increases the importance of interoperability, security controls, and consistent governance across that stack.

Gitar: Reducing CI Friction With Autonomous Fixes

Gitar focuses on the specific problem of CI failures and code review feedback that stall pull requests. The product acts as a self-healing layer on top of existing CI pipelines and Git providers.

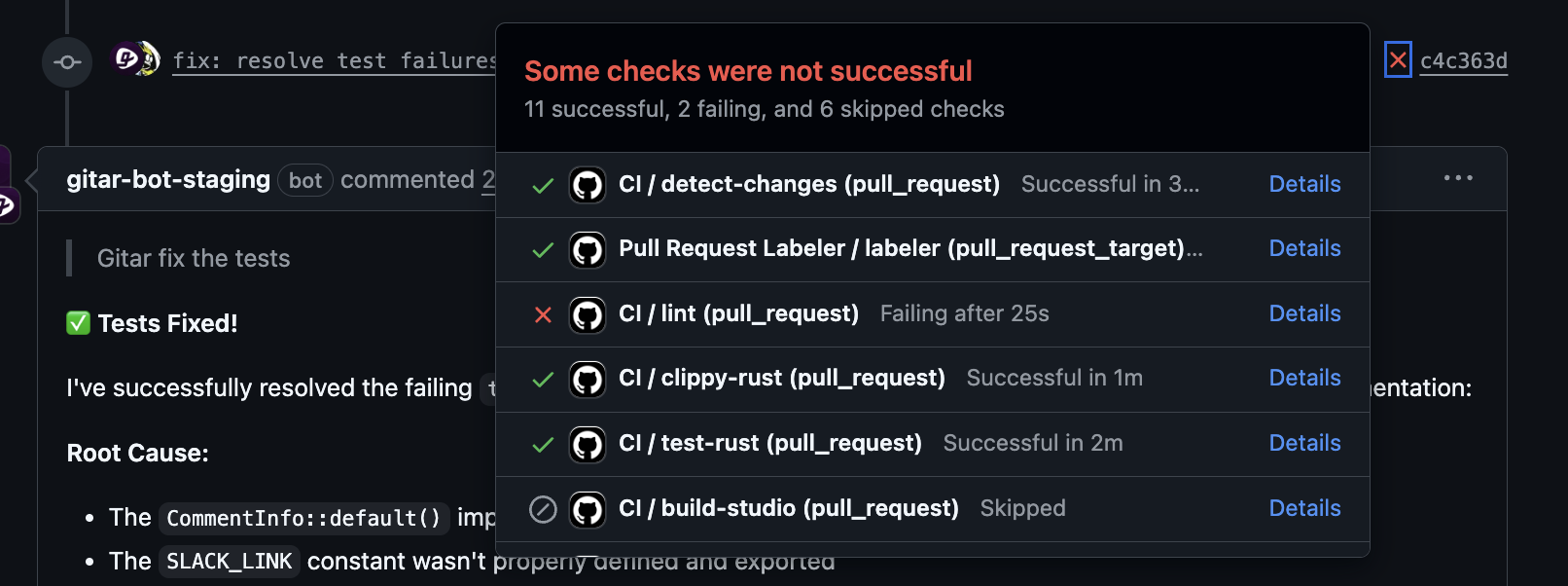

When a pull request fails CI or receives actionable review comments, Gitar receives the event, analyzes the failure, proposes a code change, and applies it in the repository. Developers see updated commits and status checks without leaving their current work.

Gitar behaves as a CI healing engine rather than a suggestion engine. Instead of posting advice and waiting for developers to act, it aims to resolve issues and validate fixes in the same CI environment that originally failed. This model particularly helps distributed teams where reviewers and authors work in different time zones.

Key Gitar Capabilities For Enterprises

- End-to-end autonomous fixing: Gitar identifies CI failures, updates code, and reruns checks, with coverage for lint violations, test failures, and build errors.

- Environment-aware execution: The platform emulates enterprise workflows, including specific JDK versions, multiple SDKs, and tools such as SonarQube and Snyk, to generate fixes that align with production behavior.

- Code review assistance: Reviewers can mention Gitar or leave structured comments so the agent applies requested changes, adds commits, and reports back in the pull request.

Teams that want to reduce CI-related interruptions can start with Gitar in conservative modes and increase automation as confidence grows. Explore Gitar to see how autonomous CI fixing fits into your workflow.

How Different Enterprise AI Developer Tools Address Bottlenecks

Automated CI Fixers To Stabilize Pipelines

Automated CI fixers such as Gitar target failures in CI pipelines and code review loops. These tools watch for broken builds, apply fixes, and re-run workflows so developers do not need to leave feature work to repair pipelines.

Their main value comes from shorter CI/CD cycles, fewer context switches, and reduced time spent on repetitive debugging tasks.

AI Code Reviewers To Improve Quality

AI code reviewers such as CodeRabbit and CodiumAI analyze pull requests, summarize changes, and flag potential defects or missing tests. Some can generate follow-up suggestions or test cases.

These systems improve review coverage and consistency but usually rely on humans to apply or validate the recommended changes.

AI IDEs And Agents To Support Daily Coding

AI-native IDEs such as Cursor and Windsurf integrate agents directly into the editor. Developers can ask the agent to refactor code, implement features, or debug issues across files without leaving the IDE.

These tools primarily enhance individual productivity and codebase understanding but may not automatically react to CI events or review queues.

AI Test Generation Tools To Strengthen Coverage

Unit test generation tools such as Qodo and Diffblue focus on creating and maintaining automated tests. Strong coverage supports safer refactoring and more reliable releases.

These products reduce the manual burden of writing tests and help teams reach coverage goals that would be difficult to achieve by hand.

Comparison Table: Autonomous Healing vs Suggestion-Based Tools (2026)

|

Feature Category |

Gitar (Autonomous Healing) |

CodeRabbit (AI Code Review) |

Claude Code (On-Demand AI Fixer) |

|

Core functionality |

Autonomous CI and code review fixes |

AI-generated review comments and summaries |

On-demand AI coding and explanations |

|

Fixing mechanism |

Applies and validates fixes in CI |

Provides suggestions; application varies by team |

Generates and sometimes applies code with manual control |

|

Integration focus |

GitHub, GitLab, and multiple CI systems |

Git provider integration for pull requests |

Editor and project-level workflows |

|

Environment context |

Full enterprise CI workflow replication |

Repository and multi-file context |

Project-wide and multi-file understanding |

How To Roll Out Autonomous AI Tools In Your Organization

Enterprises that adopt autonomous AI tools benefit from a deliberate strategy that balances automation with control.

- Build vs buy: Building long-running agents that handle CI events, state, and concurrency requires dedicated architecture and ongoing maintenance. Many teams choose specialized products instead of internal platforms.

- Integration complexity: Tools that support GitHub Actions, GitLab CI, CircleCI, and Buildkite reduce lock-in and align with heterogeneous environments.

- Trust and governance: Capabilities such as role-based access control, audit logs, and flexible deployment options give security and platform teams the oversight they require.

- Developer-in-the-loop workflows: Review steps, clear activity logs, and rollback options help teams keep humans in control while still gaining automation benefits.

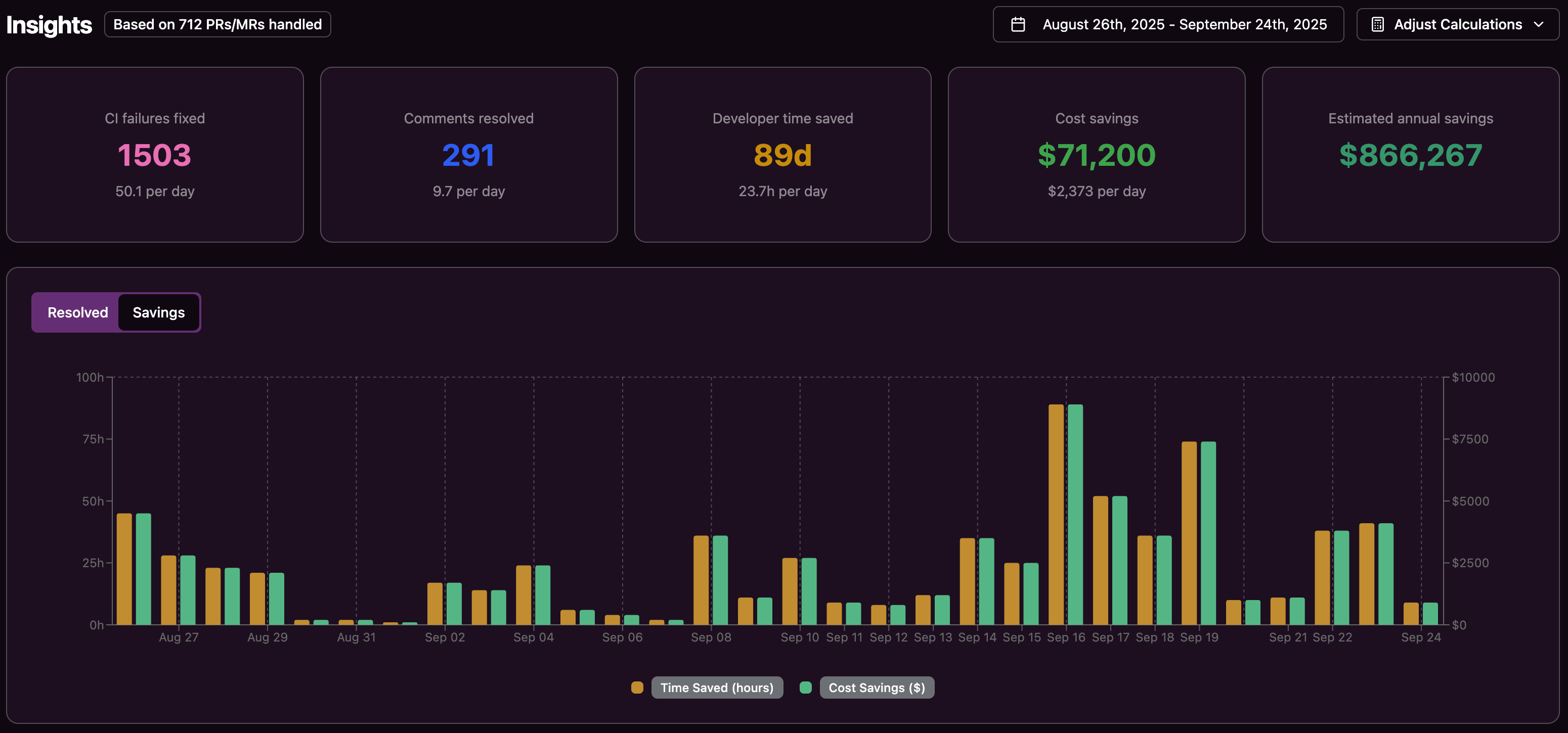

- ROI measurement: Metrics such as time-to-merge, CI failure rate, context-switch frequency, and developer satisfaction scores show whether AI tools deliver value. A 20-developer team that cuts CI and review friction in half can reclaim hundreds of hours per quarter.

Many organizations follow a three-phase approach: start with suggestion-only modes, expand to partial automation on low-risk repositories, and then enable full autonomous fixing for trusted workflows. Gitar supports this progression with configurable automation levels.

Common Pitfalls To Avoid With Enterprise AI Developer Tools

- Underestimating context complexity: General-purpose tools often struggle with custom SDKs, internal services, and proprietary integrations. Teams need solutions that replicate full enterprise environments rather than relying only on static analysis.

- Skipping trust mechanisms: Deploying fully autonomous agents without guardrails can create internal resistance. Configurable modes and simple rollback options make adoption smoother.

- Focusing only on individual productivity: Tools that improve local developer experience but ignore CI/CD throughput and time-to-market may not deliver business-level impact.

- Overlooking governance and security: Modern AI workflows need built-in security scanning, policy enforcement, and auditability. These requirements often determine whether a tool passes a security review.

Frequently Asked Questions (FAQ)

How does Gitar differ from AI code reviewers such as CodeRabbit?

Gitar focuses on action rather than suggestions. AI code reviewers highlight potential issues and offer advice, while Gitar aims to generate fixes for CI failures, apply code review feedback as commits, and validate changes in CI so builds move back to a passing state.

Can an AI tool handle complex CI setups with multiple SDKs and external scanners?

Tools designed for enterprise use replicate the full CI environment rather than relying only on static views of the repository. Gitar, for example, targets setups that use specific JDK versions, multiple SDKs, and integrations such as SonarQube or Snyk, so fixes match real pipeline behavior.

How can teams manage trust when an AI agent is allowed to commit code?

Many organizations start with conservative modes where the agent opens pull requests or suggested commits that humans review. Gitar supports configurable aggression levels so teams can move from suggestion-only to direct commits, with clear logs and rollback options to maintain control.

Moving Toward Autonomous Enterprise Development

The 2026 enterprise AI developer tools landscape shows a clear shift from passive assistance to autonomous action. Teams that combine CI-healing agents, AI code reviewers, IDE agents, and test-generation tools can attack systemic bottlenecks rather than only improving typing speed.

Gitar plays a focused role in this stack by turning CI and code review friction into an automated workflow. Engineering leaders who prioritize environment-aware fixes, strong governance, and measurable ROI can use Gitar to move toward self-healing pipelines and faster, more reliable delivery.