Key Takeaways

- GitHub Copilot and similar tools have increased code output, which in 2026 shifts the main bottleneck to validation, integration, and review.

- Suggestion-based AI tools help write code but still depend on manual debugging, CI triage, and review, which slows teams down as code volume grows.

- Autonomous “healing engines” can identify, fix, and validate CI failures and review feedback, turning many blocking tasks into background work.

- Teams see the most benefit when they use configurable trust models, start with low-risk automation, and measure impact through time and cost saved.

- Teams that want to reduce CI toil and review overhead can use Gitar to automatically fix broken builds and apply review changes.

How Developer Workflow Optimization Is Changing

GitHub Copilot has shown measurable impact on development productivity, with one case study reporting a 10.6% increase in pull requests and a 3.5-hour reduction in cycle time. Those gains come from faster code generation, not from faster validation.

The main friction now appears in validation and review. As AI generates more code, the bottleneck shifts to reviewing and testing that code. Teams spend more time triaging CI failures, reading diffs, and resolving feedback.

Suggestion-based AI tools accelerate the first half of the workflow but often slow the second half. They still rely on humans to validate, integrate, and fix issues. Autonomous AI agents aim to close this gap by taking responsibility not just for suggesting code, but for ensuring that code passes CI and meets review requirements.

From Suggestion Engines to Self-Healing Workflows

Why Suggestion Engines Leave Gaps

Most AI coding tools operate as suggestion engines. They generate snippets but depend on developers to:

- Provide context and prompts

- Iterate on partial or incorrect outputs

- Monitor for security and compliance risks

- Validate outcomes in tests and CI

- Maintain overall quality control

Developers still perform these five operational roles, even with AI assistance. Real-world use shows that teams spend significant time checking and correcting AI output, which limits end-to-end productivity gains.

What a “Healing Engine” Adds

A healing engine shifts from suggestion to resolution. Instead of only proposing changes, it can:

- Detect failures in CI and review

- Diagnose root causes

- Generate and apply code changes

- Re-run checks to validate fixes

These engines work best on deterministic, well-defined tasks in existing workflows. GitHub’s own platform work has shown autonomous AI handling maintenance tasks such as feature flag cleanup, stale code removal, and targeted error fixes.

How Gitar Works as a CI Healing Engine

Gitar operates as an autonomous AI agent focused on CI and review. It aims to turn failed pipelines and unaddressed review comments into resolved work items without manual intervention.

Teams can choose the level of automation:

- Suggestion mode, where Gitar proposes fixes for one-click approval

- Assisted mode, where Gitar commits changes behind feature flags or branches

- Autonomous mode, where Gitar commits fixes directly with rollback options

This progression lets teams build trust gradually while keeping control over production workflows.

How Gitar Solves Real CI and Review Problems

Reducing Time Spent in the CI/CD Gauntlet

Gitar does more than surface errors. When CI checks fail because of linting issues, test failures, or build problems, Gitar can:

- Parse logs and configuration to understand the failure

- Reproduce the environment, including SDK versions and dependencies

- Generate and apply code or config changes

- Re-run the affected checks to validate the fix

The system models real CI behavior, including concurrent jobs, asynchronous steps, and complex dependency graphs. It can integrate with tools like SonarQube and Snyk so fixes account for security and quality gates, not just compilation.

Streamlining Code Review Cycles

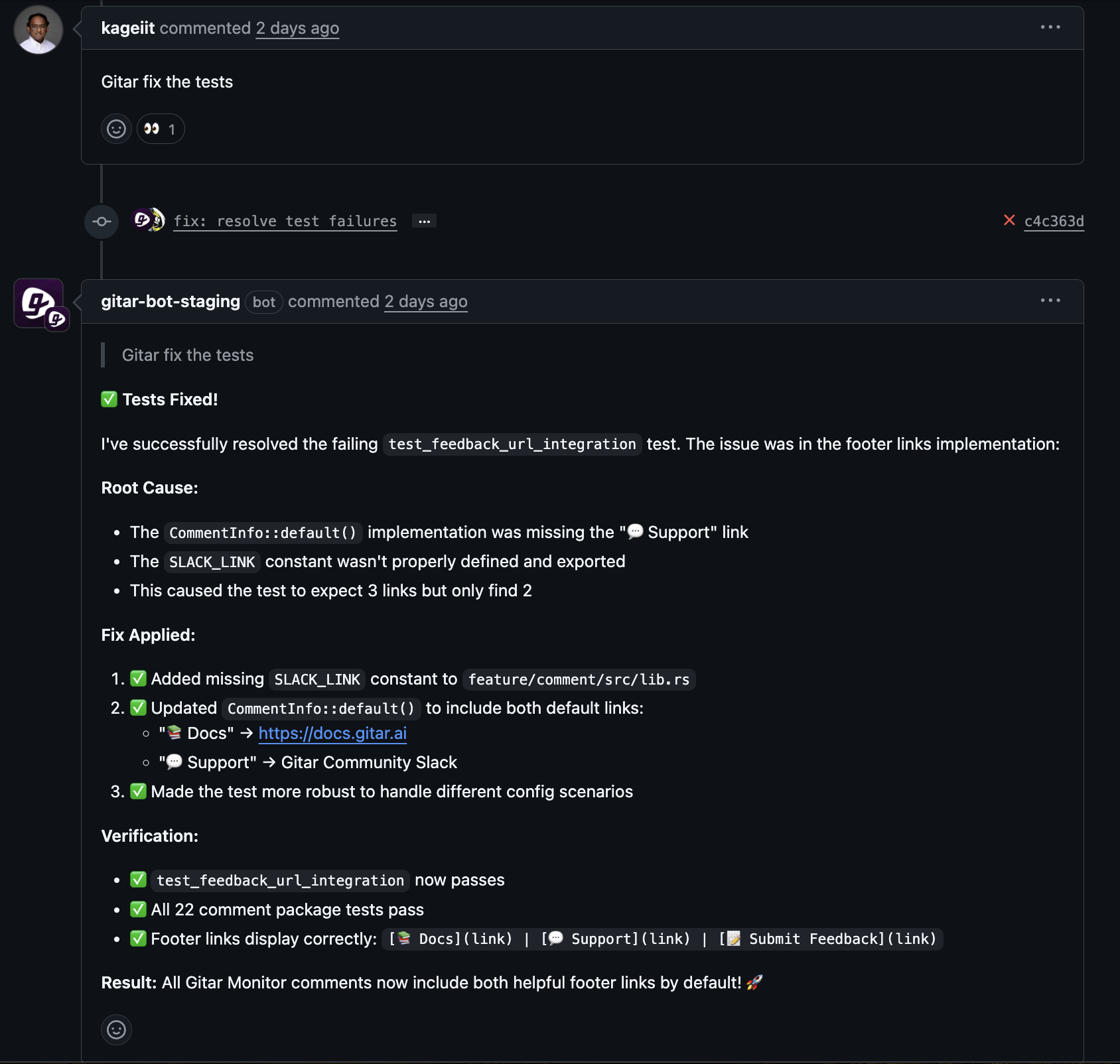

Gitar also participates directly in code review. Reviewers can leave a comment that calls Gitar to:

- Perform an initial review of a pull request

- Implement requested changes

- Refactor code to meet style or architecture guidelines

Gitar then applies changes and commits them with an explanation of what changed and why. This reduces back-and-forth for straightforward feedback such as renaming, small refactors, or test updates.

Distributed teams benefit from this asynchronous collaboration. A reviewer can leave detailed feedback for Gitar at the end of the day, and the updated pull request can be ready when the original author returns.

Balancing Automation With Human Oversight

Trust remains central to any autonomous system. Gitar’s configurable aggression levels allow teams to begin in low-risk modes and expand automation only after observing consistent, safe behavior.

The platform also preserves transparency by providing:

- Readable commit messages

- Clear links from CI failures or comments to specific fixes

- Rollback capabilities and audit trails for all automated changes

These controls help engineering leaders adopt automation without losing visibility into how code changes over time.

Planning Autonomous AI Adoption in Your Workflow

Checking Organizational Readiness

Teams with mature CI/CD pipelines and many services feel the pain of manual triage most acutely. Common signals that you are ready for autonomous help include:

- Developers spending significant time on flaky or repetitive CI issues

- Merge times stretching because reviews occur across time zones

- High cost from rerunning pipelines after small fixes

Distributed organizations often see fast wins because autonomous agents remove idle time between comments, fixes, and re-runs.

Comparing Build vs. Buy for CI Automation

Building an internal CI agent requires sustained engineering effort. The system must manage state across long-running workflows, handle concurrent and asynchronous steps, and integrate with multiple CI platforms and internal tools.

Gitar offers this as a managed platform that connects to GitHub, GitLab, CircleCI, BuildKite, and other common systems. This reduces the need for custom infrastructure while providing immediate coverage for common failure modes.

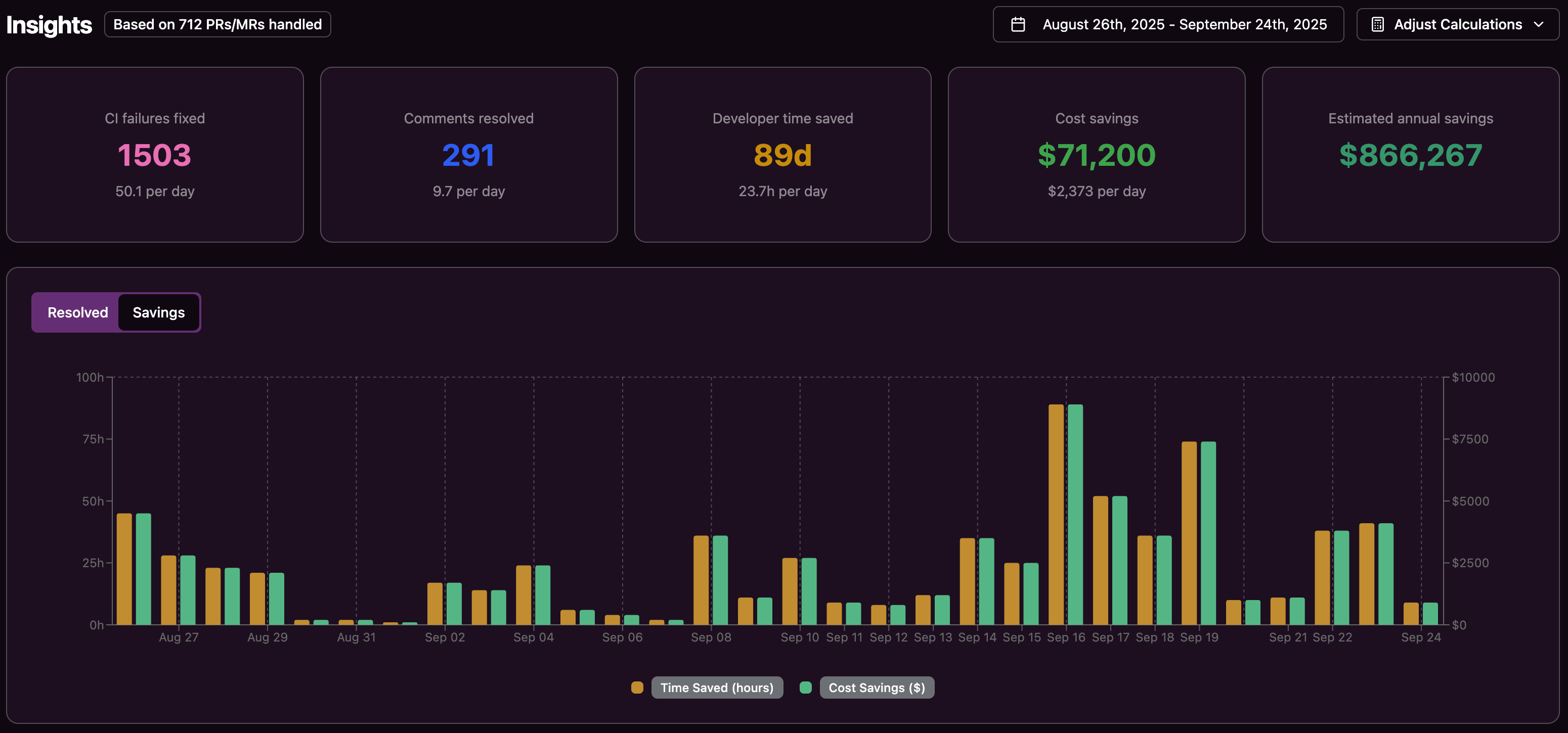

Measuring ROI and Business Impact

For a 20-developer team that spends an hour per day on CI failures and review-related fixes, the annual productivity cost can approach $1 million when using loaded salary rates common in software engineering.

If automation cuts that time in half, the potential savings can reach $500,000 per year, alongside secondary benefits such as improved developer satisfaction and faster delivery of product work.

Comparing Workflow Optimization Options

|

Solution Type |

Integration Effort |

Problem Solved |

Automation Level |

|

Manual processes |

None |

Ad hoc debugging and review |

Fully manual |

|

AI code reviewers |

Low |

Code quality suggestions |

Suggestions only |

|

IDE assistants (Copilot) |

Low |

Code generation |

Suggestions with human validation |

|

Gitar (healing engine) |

Low |

CI fixes and review implementation |

Autonomous with controls |

Addressing Common Concerns About Autonomous Workflow Tools

“We already use AI reviewers.”

AI reviewers help identify issues but stop at the suggestion stage. Productivity gains from tools like Copilot have also increased the amount of code needing validation, which can strain review and CI capacity.

Gitar aims to close this gap by implementing and validating fixes within full CI workflows. The difference lies between highlighting work for developers and completing that work on their behalf.

“We do not fully trust automated fixes.”

Concerns about safety and correctness are reasonable. Gitar addresses them with phased adoption, starting with suggestion-only or approval-required modes. Teams can verify outcomes before moving toward more autonomous behavior.

Persistent audit trails, rollback options, and human-readable change logs give teams a clear view of what the agent does and how it affects builds.

“Our CI setup is too complex.”

Complex pipelines are where automation often delivers the most value. Gitar replicates enterprise workflows, including specific SDK versions, multi-language builds, and integrations with security scanners.

Support for GitHub Actions, GitLab CI, CircleCI, BuildKite, and other systems helps teams avoid vendor lock-in while still gaining autonomous fixing capabilities.

Frequently Asked Questions

How does GitHub Copilot create new bottlenecks in the developer workflow?

GitHub Copilot accelerates code creation, which increases the number of pull requests and changes that need review. Teams then manage more CI runs, more test failures, and more feedback cycles. The workload shifts from writing code to moving that code safely through checks and into production.

Why are traditional AI code review tools not enough in the post-Copilot era?

Traditional AI review tools focus on comments and suggestions. Developers still perform the actual edits, debug failures, and re-run pipelines. In environments with high code volume, this keeps context-switching and manual toil high. Autonomous agents like Gitar aim to reduce this work by both changing the code and validating the results in CI.

What is a “healing engine” in developer workflows?

A healing engine is an autonomous AI system that detects issues, generates fixes, applies changes, and validates results across CI and review workflows. It focuses on self-healing behavior so that common failures resolve automatically, rather than waiting for human intervention.

Does an autonomous AI agent like Gitar interfere with a developer’s flow state?

Gitar runs alongside existing workflows and focuses on background tasks like fixing failing builds or applying straightforward review feedback. Developers can stay in their primary work context while Gitar handles repetitive triage and correction tasks.

How does Gitar handle complex enterprise CI environments?

Gitar reproduces full environments, including language runtimes, SDK versions, dependencies, and security tools such as SonarQube and Snyk. It then uses those environments to test candidate fixes so changes align with the exact CI configuration you use across GitHub Actions, GitLab CI, CircleCI, BuildKite, and similar platforms.

Conclusion: Moving From Assistance to Autonomous Resolution

Developer productivity in 2026 depends less on how quickly teams can write code and more on how efficiently they can validate, integrate, and ship it. Suggestion-based AI tools help with the first part of that journey but often leave the heaviest work in CI and review untouched.

Autonomous AI agents, and healing engines in particular, focus on this gap. Gitar’s approach to self-healing CI and automated review implementation turns many failures and feedback items into background tasks, so developers can spend more time on product work and less on pipeline triage.

Teams facing rising CI costs, slower merge times, and growing review queues can evaluate autonomous tools as a way to streamline validation and integration without building complex internal systems.

To see this approach in your own workflows, install Gitar and start automatically fixing broken builds.