Key Takeaways

- Modern development teams generate more code than their CI systems can efficiently validate, so autonomous agents that fix broken builds now directly influence release speed and engineering ROI.

- Suggestion-only tools reduce analysis effort but keep developers in the loop for implementation, while healing engines apply and validate fixes so engineers see ready-to-merge green builds.

- Successful autonomous CI remediation depends on mature pipelines, a clear trust model, and careful change management that starts with low-risk fixes and grows over time.

- Engineering leaders can avoid common pitfalls by resisting over-engineered internal tools, planning for cross-platform support, and measuring impact with clear, shared metrics.

- Teams can introduce an autonomous CI agent like Gitar to fix broken builds and resolve review feedback by adding it to their repositories through a simple installation flow on the Gitar site.

Why Build-Fixing AI Agents Matter In Modern CI

Software teams now ship more code than ever, yet CI pipelines often block delivery instead of enabling it. Tools such as GitHub Copilot increase pull request volume, test runs, and opportunities for failures. For a 20-developer team, CI failures and code review delays can cost around $1 million per year in lost productivity, creating a clear leadership issue rather than a minor tooling annoyance.

Manual debugging, static pipeline scripts, and suggestion-only AI helpers treat symptoms instead of the core problem. Teams need agents that detect failures, understand context, apply changes, and confirm that pipelines pass, all without constant human intervention.

Two Approaches: Suggestion Engines vs. Healing Engines

Most CI-focused tools now fall into two categories that shape how much work stays on developers’ plates.

Suggestion engines that still rely on developers

Suggestion engines, including AI code review tools such as CodeRabbit, inspect code and leave comments or snippets for engineers to apply. These tools can help with:

- Static analysis findings and style issues

- Simple bug patterns and anti-patterns

- Basic refactoring suggestions

Developers still need to interpret each suggestion, edit the code, push commits, and wait for CI feedback. Context switching between implementation work and CI repair remains a major drag on focus and throughput.

Healing engines that deliver green builds

Healing engines change the workflow. An agent such as Gitar reads logs, understands the CI environment, generates fixes, and pushes commits directly to the branch. The agent then re-runs the pipeline and only notifies developers when checks succeed.

This model reduces manual steps and serves completed work back to the team:

- Failures are detected and understood from logs, configs, and tests.

- Fixes are applied in-code with minimal developer input.

- Pipelines re-run until success or a clear escalation condition.

Suggestion engines shift labor from finding issues to implementing fixes, while healing engines remove that work from the critical path.

What Real-World CI Agents Must Handle

Effective agents for CI remediation must operate in noisy, concurrent environments rather than in idealized demos.

Practical agents need to handle:

- Concurrent builds and users across many repositories

- Events arriving out of order from multiple CI systems

- Multi-stage, parallel workflows that share artifacts and state

- Retries, transient failures, and duplicate events

- Long-running branches and PRs that evolve over days or weeks

These requirements demand robust state management, environment replication, and clear rules about when the agent should act, pause, or hand work back to humans.

Build vs. Buy: When Internal CI Agents Make Sense

Engineering leaders often consider building internal agents as an extension of existing automation. The idea can look simple: wire a large language model like Claude into CI, feed logs into prompts, and apply patches.

In practice, maintaining a homegrown agent usually requires:

- Custom orchestration and state storage for events and runs

- Environment replication for language versions, SDKs, and tools

- Prompt design, safety rails, and continuous evaluation

- Security reviews, access controls, and audit trails

Teams succeed with internal builds when they have dedicated platform and ML engineering capacity and a clear mandate. Many other organizations find that total cost of ownership and opportunity cost exceed the price of a focused commercial solution.

Designing Trust And Risk Controls For Autonomous Fixes

Technical capability alone does not determine adoption. Trust in autonomous changes to production code plays a larger role.

Practical trust models usually progress through clear stages:

- Observation mode, where the agent only proposes fixes in comments.

- Assisted mode, where the agent opens PRs that need approval.

- Autonomous mode for low-risk categories such as formatting and linting.

- Expanded autonomy for selected services or repositories, backed by rollback plans.

Transparency, clear logs, and simple ways to revert or disable the agent help developers stay comfortable while automation increases.

Meeting Enterprise Integration And Compliance Needs

Enterprise environments add more constraints than small projects. Pipelines often span multiple languages, SDKs, and platforms, and incorporate tools like SonarQube, Snyk, and organization-specific security scanners.

Effective CI agents for these settings should:

- Respect existing permissions and code ownership rules

- Work across CI platforms rather than one hosted provider

- Reproduce complex environments with the right JDKs, SDKs, and dependencies

- Produce auditable records of every change and decision

Solutions locked to a single platform or provider can create future migration risks and vendor dependence.

How Gitar Helps Teams Fix Broken Builds Automatically

Gitar focuses on practical CI remediation that returns green builds and resolved review comments back to developers.

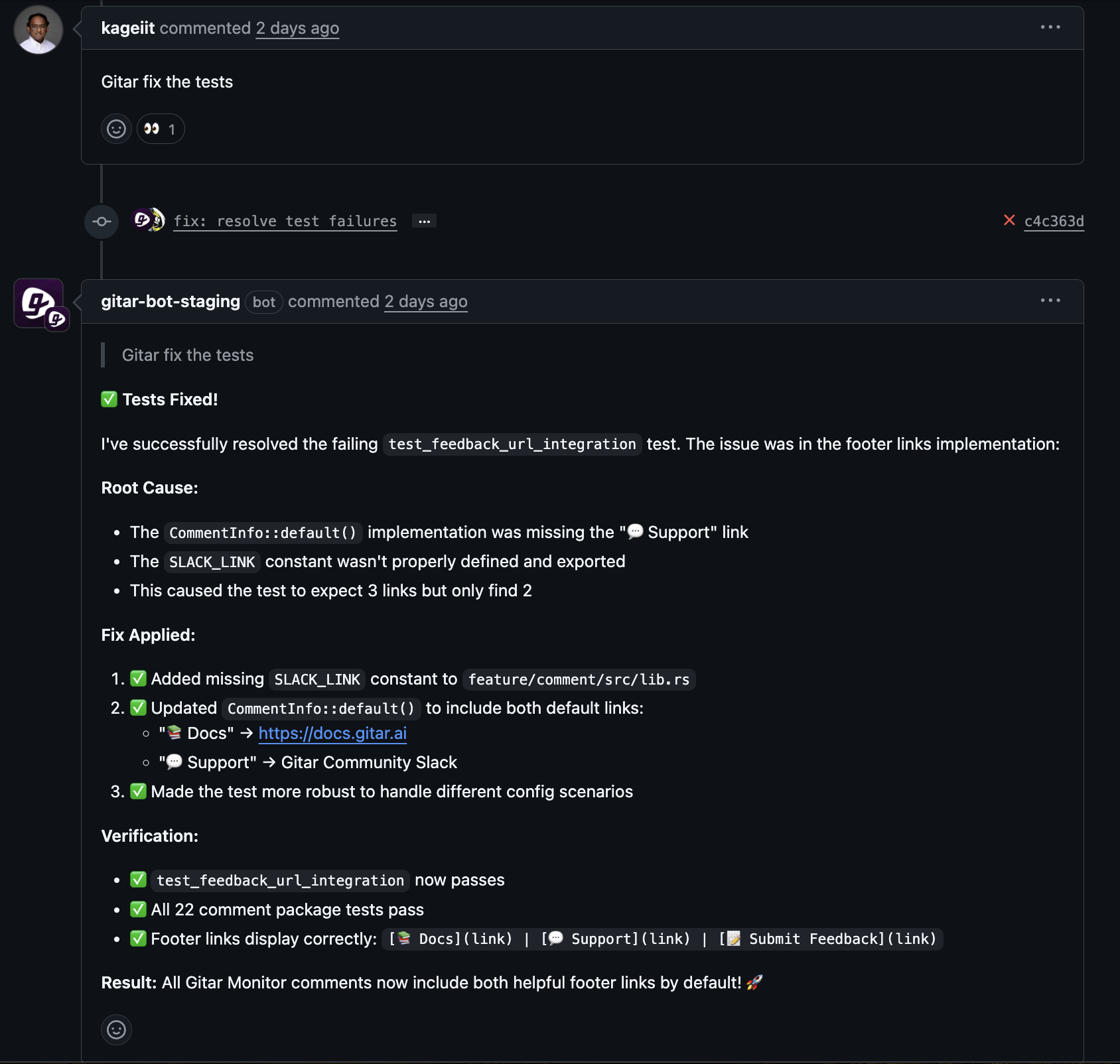

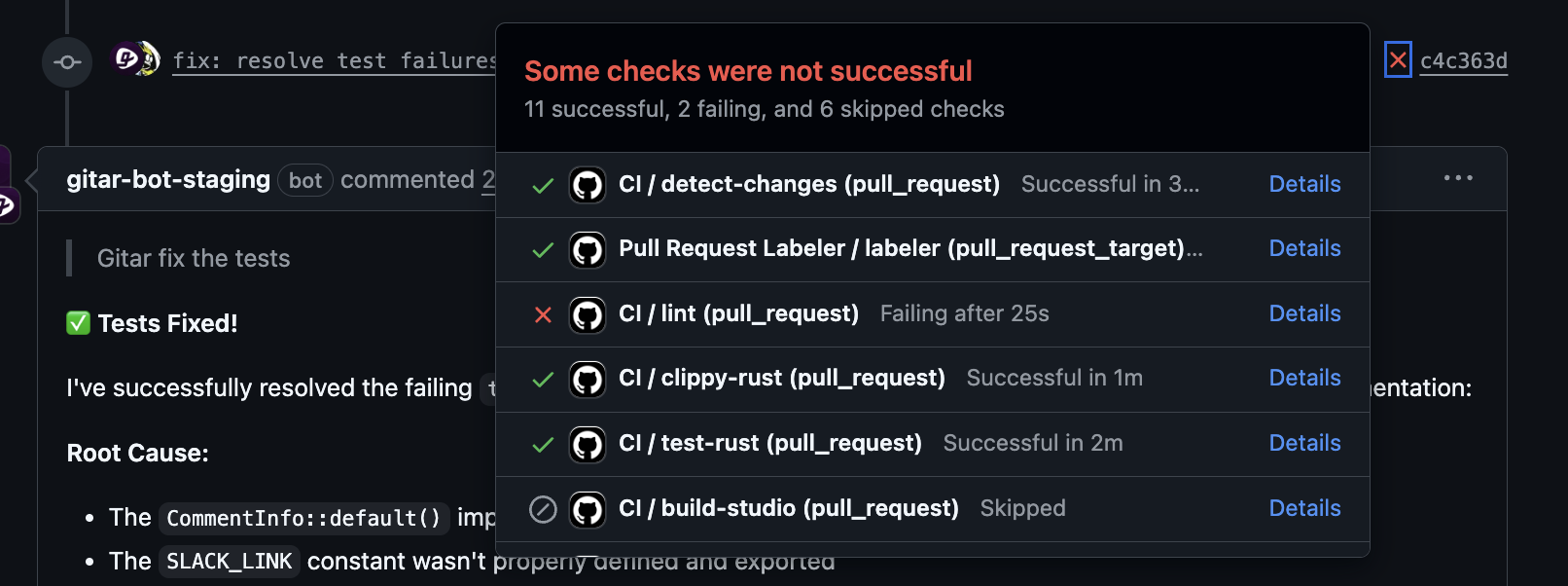

What Gitar’s agent does in your pipelines

The Gitar agent watches for CI failures and review feedback on pull requests, then:

- Analyzes logs, configs, and code to identify the root cause

- Generates a targeted code or config change

- Commits the fix directly to the branch

- Re-runs the pipeline to confirm that checks pass

Typical issues include:

- Linting and formatting violations

- Test failures that stem from code or data issues

- Build configuration and dependency errors

Environmental replication allows Gitar to mirror enterprise workflows so fixes succeed in the same conditions that production code uses.

Support for distributed and asynchronous teams

Global teams frequently lose days to simple back-and-forth changes. A reviewer leaves a comment at the end of their day, and the original author resolves it many hours later.

Gitar shortens this loop by:

- Acting on review comments that request specific changes

- Applying and validating fixes while teammates are offline

- Leaving explanations so reviewers can quickly verify updates

The result is shorter PR lifecycles, fewer context switches, and more time spent on design and complex work instead of small fixes.

Assessing Readiness For Autonomous CI Remediation

Organizations gain the most from CI agents when they have baseline practices in place and clear outcomes in mind.

Infrastructure and pipeline maturity

Teams are ready for autonomous remediation when they have:

- Consistent CI/CD pipelines and branching strategies

- Reasonable test coverage for critical paths

- Stable environments with clear configuration management

Teams with frequent flakiness from infrastructure or missing tests often need to address these foundations before adding an autonomous agent.

Change management and developer experience

Cultural readiness matters as much as tooling. Developers who value control over every change can feel uneasy about automated commits. Successful rollouts typically:

- Start with low-risk categories such as formatting and linting

- Use suggestion modes first, then expand to autonomous fixes

- Share metrics on reduced CI toil and faster merges

Developer satisfaction, focus time, and perceived reliability are as important as pure throughput numbers.

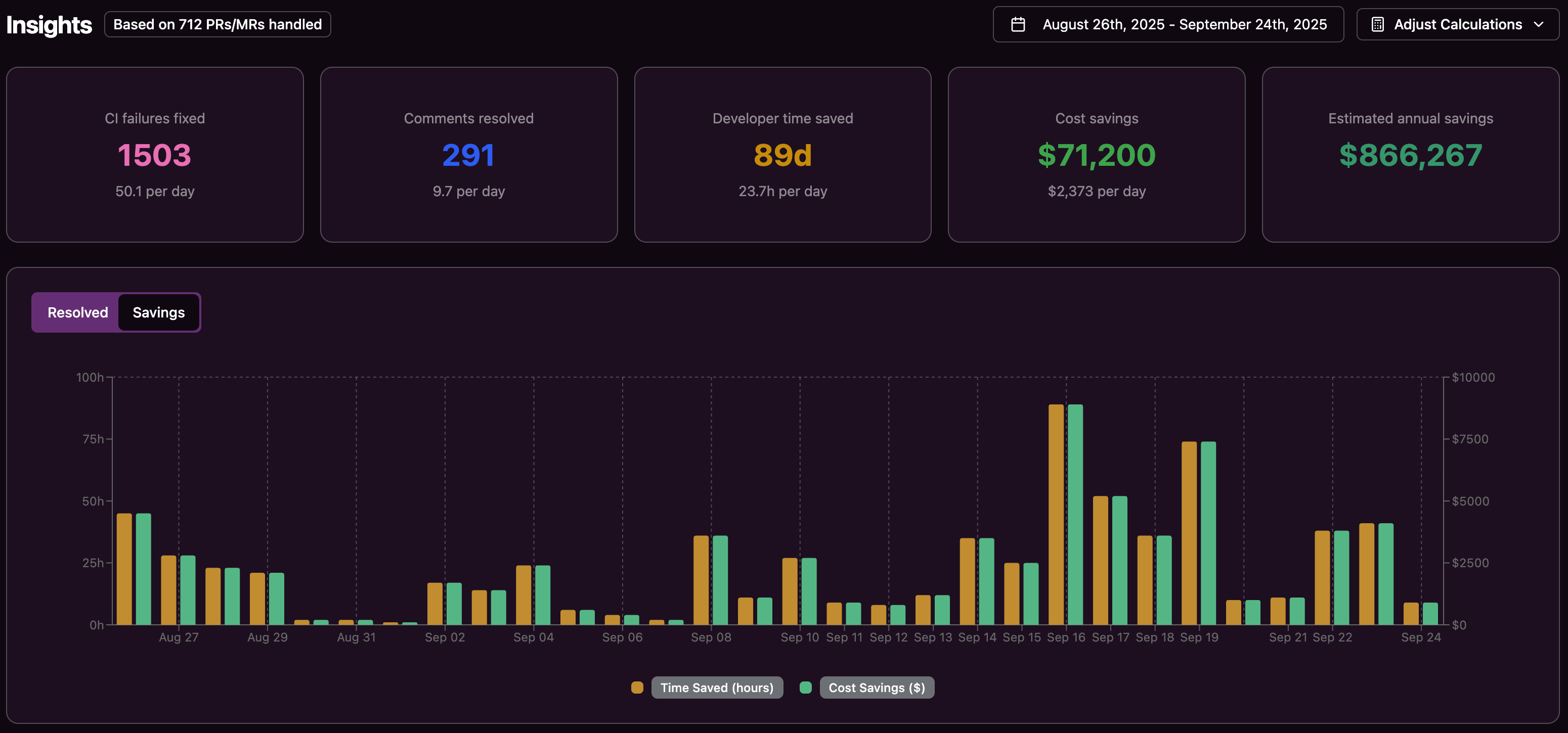

Metrics that show impact

Clear metrics help leadership and teams align on value. Common choices include:

- Average time to fix failing builds

- Number of CI failures resolved without human intervention

- Time from pull request open to merge

- Estimated developer hours recovered from CI toil

Enterprises may also track security and compliance metrics tied to automated fixes.

Common Pitfalls When Introducing CI Agents

Experienced teams often encounter similar issues when they first introduce autonomous CI remediation.

Underestimating long-term maintenance of custom agents

Platform teams that successfully built scripts and bots sometimes assume an agent is just another script. Over time, they face growing complexity around state handling, concurrency, environment setup, and security. The maintenance burden can compete with roadmap work and delay other initiatives.

Skipping trust-building stages

Rolling out fully autonomous changes on day one can generate strong resistance, even if accuracy is high. A more sustainable pattern introduces automation in narrow, visible ways and expands only after teams see reliable results.

Accepting early platform lock-in

Solutions that tie deeply to a single code host or CI provider can limit future flexibility. Teams benefit from considering how their stack may evolve and whether their CI agent can follow.

Looking Ahead: Autonomous CI As A Core Practice

Autonomous CI remediation now sits alongside version control, automated testing, and continuous delivery as a core practice for high-velocity engineering teams. As AI-assisted coding continues to increase code volume in 2026, the organizations that scale review and validation with agents will move work through their pipelines more predictably.

Frequently Asked Topics About CI-Focused AI Agents

Quality assurance for automatic fixes from AI agents

Agents such as Gitar evaluate failures using logs, configs, and environment details, then generate focused fixes. The agent runs the full CI pipeline to confirm that existing tests still pass and that no new failures appear. This closed loop helps ensure that changes behave correctly in the same environment that production code relies on.

CI failure types that autonomous agents handle most effectively

Modern agents handle many issues that interrupt developers but rarely require deep product knowledge. Common categories include formatting and lint errors, dependency and version problems, simple test failures with clear assertions, and build script issues. These are frequent sources of noise and delay, making them strong candidates for automation.

Security, compliance, and audit considerations

Enterprise-ready agents operate within existing identity and access controls, inherit repository permissions, and record each action they take. Many deployments layer approval workflows on top of the agent so that higher-risk changes still receive human review. Integration with existing scanning and compliance tools helps ensure that automated fixes do not bypass security requirements or policy checks.

Key differences between AI agents and traditional CI automation tools

Traditional CI automation relies on static scripts and rules that handle known patterns but do not adapt well to new situations. AI agents reason about context, propose or apply changes, and learn from feedback over time. This capability allows them to cover a wider range of failure modes without bespoke scripting for every case.

Practical steps for moving from manual fixes to autonomous agents

Teams often begin with suggestion-only modes to understand how an agent behaves in their environment. After confirming that suggestions are accurate, they enable automatic fixes for narrowly scoped categories, then broaden coverage as confidence grows. Throughout this process, developers remain responsible for design and complex decisions, while the agent handles repetitive CI repair work.