Key Takeaways

- Manual continuous integration (CI) work, including fixing broken builds and responding to review comments, slows release cycles and disrupts developer focus.

- Autonomous AI agents can replicate your CI environment, apply fixes, and validate results, which reduces context switching and shortens feedback loops.

- Distributed teams benefit from AI that implements review feedback overnight, so pull requests move forward without waiting on time zone overlaps.

- A phased rollout, clear metrics, and configurable trust levels help teams adopt AI in CI while maintaining control and code quality.

- Gitar applies and validates CI fixes for you, so your team ships faster with fewer interruptions. See how Gitar can fix your CI issues automatically.

The Bottleneck: Why Manual CI Slows Release Cycles

Manual CI work creates a visible bottleneck in modern software delivery. A pull request that looks ready to merge often hits a red build caused by a flaky test, dependency error, or style violation. The developer then stops feature work, opens logs, reproduces the issue locally, and reruns the pipeline.

Developers spend up to 30% of their time dealing with CI and code review issues, which can cost a 20-person team close to $1 million per year in lost productivity.

AI-assisted coding tools generate more code, which increases pull request volume and test runs. When CI remains manual, this extra code pushes more work to the right side of the pipeline, where validation, fixes, and review often stall.

Teams that rely on reactive CI work experience cascading delays, slower releases, and lower morale. Gitar addresses this by fixing broken builds without waiting on a developer.

Self-Healing CI: How Autonomous AI Accelerates Releases

Autonomous AI in CI shifts work from manual debugging to self-healing pipelines. Gitar runs as an always-on agent that investigates failures, crafts fixes, and validates them in your CI environment.

Core capabilities that improve release speed include:

- End-to-end fixing that applies, tests, and finalizes CI failure resolutions instead of only suggesting code changes.

- Environment replication that mirrors your CI stack, including SDK versions, dependencies, and third-party tools.

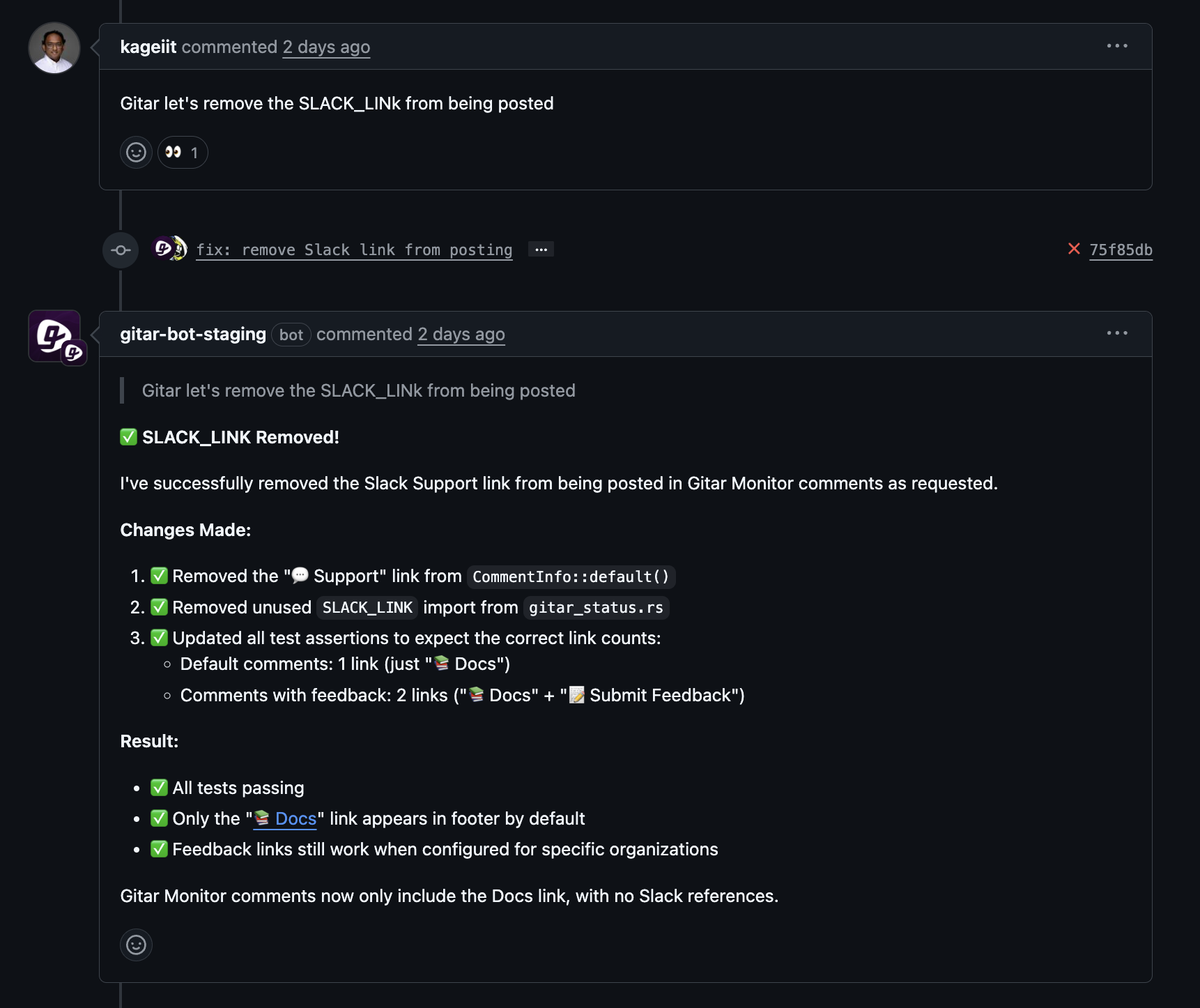

- Code review assistance that implements reviewer comments, reducing back-and-forth cycles.

- Support for common CI platforms so teams do not need to redesign existing pipelines.

- Configurable trust modes that range from suggestion-only to auto-commit, aligned with your risk tolerance.

This model keeps pipelines moving even when developers are focused elsewhere, so releases do not wait on small but frequent CI tasks.

How AI Changes CI Day to Day

Reducing Context Switching During CI Failures

Context switching erodes deep work time. One analysis shows that refocusing after an interruption can take more than 20 minutes, so a quick CI fix often consumes an entire hour of productive work.

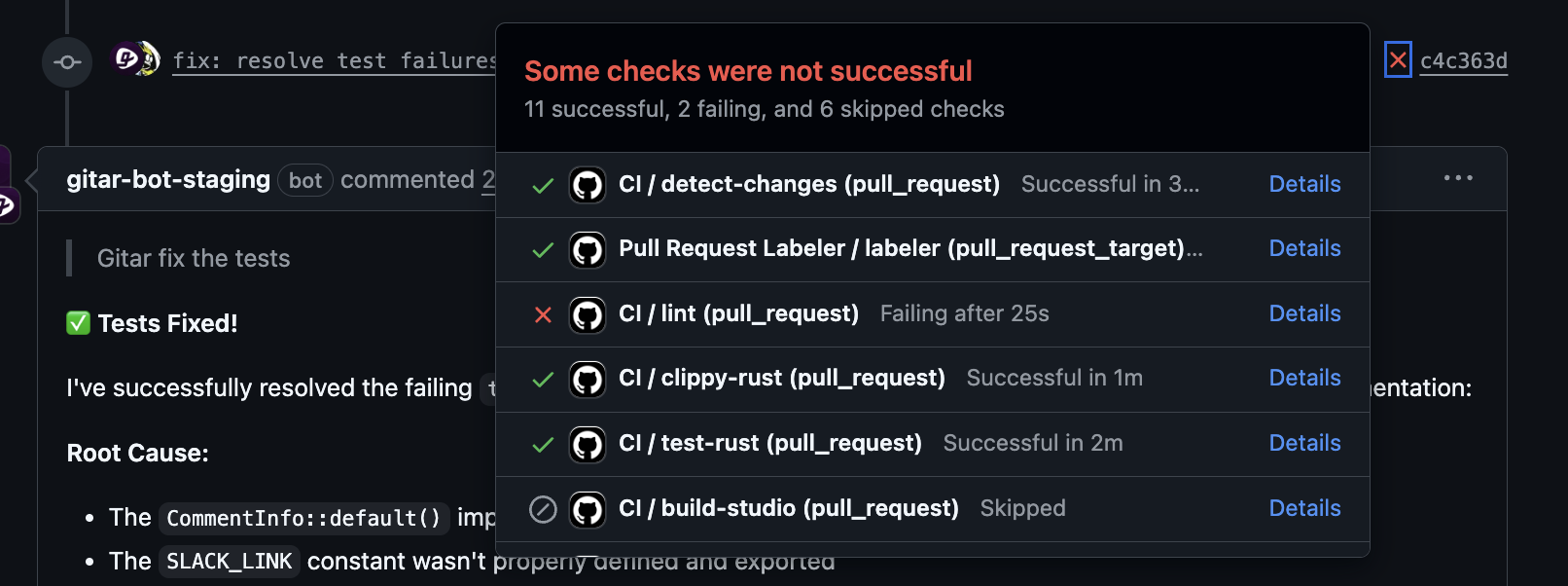

When a build fails, Gitar inspects logs, identifies the root cause, proposes a fix, runs tests in a replicated CI environment, and commits the change. Developers stay in their current task instead of jumping into emergency debugging.

Supporting Distributed Teams Across Time Zones

Distributed teams often lose full days to time zone delays. A pull request opened in San Francisco and reviewed in Bangalore can wait overnight for simple follow-up changes.

With Gitar, reviewers leave clear comments, and the agent implements the requested edits. The next time the original author checks the pull request, updates are already in place and CI is green or in progress.

Moving Beyond Suggestions to Validated Fixes

Most AI tools suggest code that developers still need to edit, test, and validate. That approach keeps humans in the critical path and can introduce new failures.

Gitar instead acts as a healing engine. It runs in the same environment that produced the failure and validates each change against that environment. That process reduces trial-and-error debugging and gives teams higher confidence in automated fixes.

Scaling CI for Higher Code Volume

Modern teams ship more code and more pull requests as they adopt AI-assisted coding. Without help in CI, each new change taxes testing infrastructure and reviewer capacity.

Autonomous CI agents scale with code volume. As more builds run, more failures get handled in parallel by the agent, so human effort does not increase at the same rate.

Manual CI vs Suggestion Engines vs Gitar

|

Capability |

Manual Process |

AI Suggestion Tools |

Gitar Healing Engine |

|

Fix implementation |

Developer debugs and writes code |

Suggests code, developer applies and tests |

Applies and validates fixes autonomously |

|

Environment context |

Local or partial CI view |

Limited CI awareness |

Full CI environment replication |

|

Developer interruption |

High, frequent context switches |

Moderate, review still required |

Low, work happens in the background |

|

Confidence in success |

Depends on manual testing |

Unvalidated suggestions |

Fixes tested in CI conditions |

Implementing AI in Your CI Pipeline

Assessing Where AI Can Help Most

Engineering leaders can start by quantifying where CI consumes time today. Useful signals include:

- Frequency of CI failures per pull request.

- Average time from failure to green build.

- Developer hours spent on log analysis, minor fixes, and review rework.

- Delays caused by cross-time-zone reviews.

Teams that see repeated failures, long feedback cycles, or heavy review overhead often realize strong gains from autonomous CI support.

Rolling Out Gitar in Phases

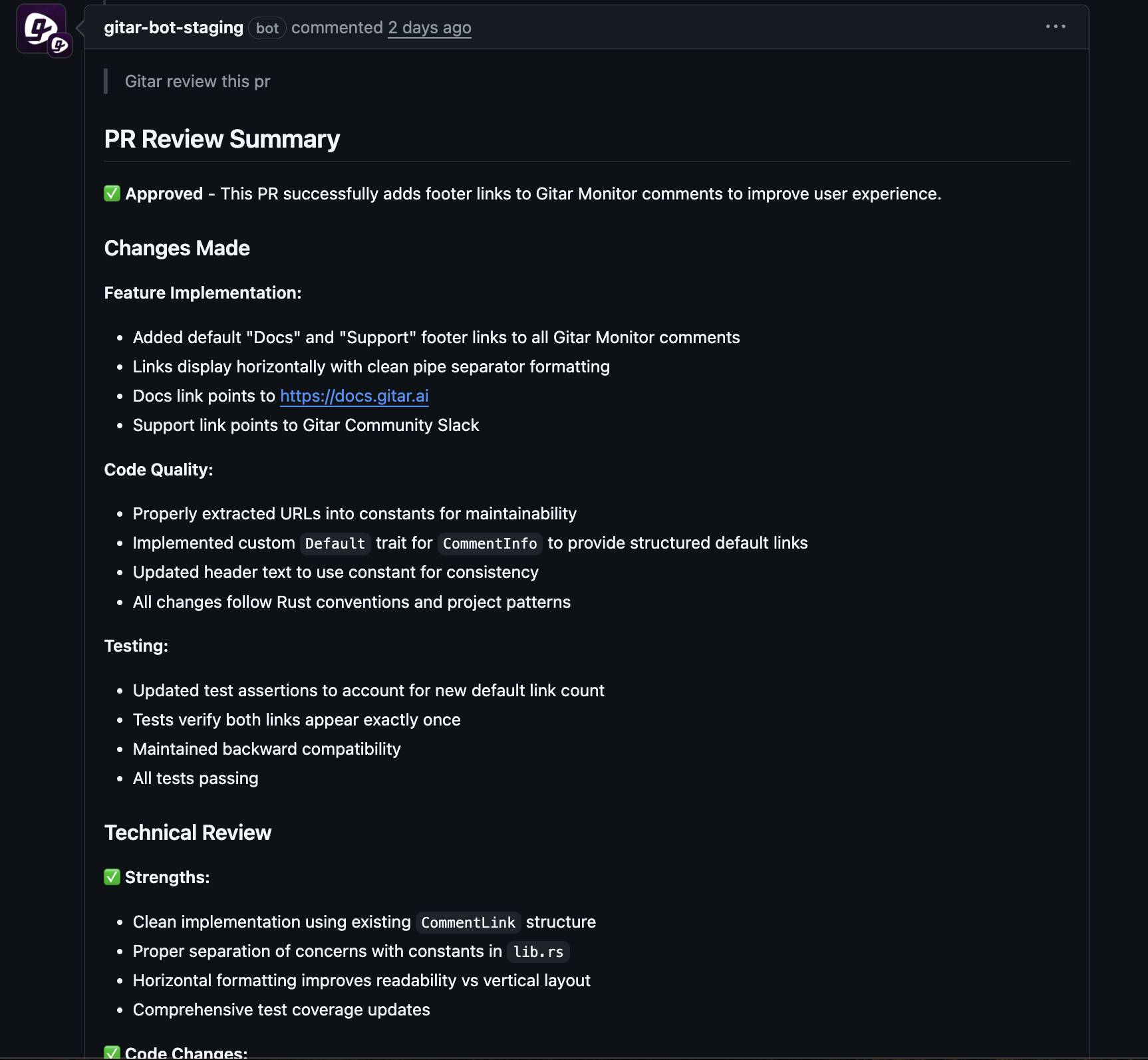

Phase 1 focuses on installation and safe defaults. Teams connect selected repositories through a GitHub or GitLab app and configure Gitar in suggestion-only mode, where it proposes changes that developers approve.

Phase 2 builds trust. Developers start to notice that fixes appear on pull requests before they even revisit the page. After enough successful outcomes, teams enable auto-commit for routine issues such as lint errors, flaky tests, and small configuration problems.

Phase 3 extends automation into review workflows. Senior engineers leave structured comments that Gitar interprets and implements, which reduces the time between review, revision, and merge.

Measuring ROI From Faster Release Cycles

Simple time cost analysis often reveals the impact. A 20-developer team that spends one hour per day on CI and review fixes invests around 5,000 hours per year in this work. At a loaded rate of $200 per hour, that equals about $1 million in annual cost.

If autonomous CI recovers even half of that time, the team gains roughly $500,000 in productive capacity, along with faster releases and fewer interruptions for developers. Gitar gives teams a direct way to capture this upside.

Common Challenges When Adding AI to CI

Teams often raise three main concerns: trust in automated fixes, complex CI environments, and overlap with existing AI tools.

Trust grows through gradual adoption. Suggestion-only mode keeps humans in full control, then auto-commit can apply to narrow categories of low-risk fixes. Teams observe behavior and adjust rules before expanding scope.

Complex enterprise CI setups that use multiple SDKs, language versions, or internal tools fit well with Gitar because it replicates the CI environment instead of inferring behavior from limited context.

Existing AI tools that help write or review code remain valuable. Gitar focuses on implementation and validation, so it complements those tools rather than replacing them.

Frequently Asked Questions About AI in CI

How does Gitar handle complex, enterprise-specific CI environments?

Gitar recreates the CI workflow, including specific JDK versions, multi-SDK dependencies, and integration tools. The agent tests fixes in that environment, so changes align with the same conditions that run in production-like pipelines.

How is Gitar different from AI code review tools?

Code review tools highlight issues and suggest edits, but developers still apply and test those changes. Gitar takes responsibility for fixing CI failures by editing code, updating configuration, running tests, and committing validated results.

What if developers do not initially trust automated fixes?

Teams can keep Gitar in suggestion-only mode while they review output and track accuracy. Over time, they often move routine fixes to auto-commit while leaving complex or high-risk changes under manual control.

How can we quantify the impact on release cycles and cost?

Teams can track hours spent on CI failures and review-driven rework before and after deployment. Comparing this to engineering cost, along with metrics such as time to green build and time to merge, gives a clear picture of Gitar’s impact.

Conclusion: Use AI to Keep CI Moving

AI in CI offers a shift from reactive debugging to proactive, autonomous repair. Instead of pausing work to fight builds, developers stay focused while an agent handles many failures in the background.

Gitar combines environment-aware fixes, review implementation, and configurable trust levels to help teams shorten feedback loops and release software more quickly.

Teams that adopt autonomous CI early enter 2026 with faster delivery, clearer pipelines, and more satisfied developers. Request a Gitar demo to see autonomous CI fixes in your own environment.