Key Takeaways

- AI code generation tools such as GitHub Copilot increase throughput, but they also shift bottlenecks to CI/CD and code review.

- Post-generation toil now consumes a significant share of developer time, especially in debugging failing builds and waiting on reviews.

- Autonomous AI that fixes CI failures and applies review feedback helps teams convert code volume into faster, more reliable merges.

- Engineering leaders gain clearer ROI by tracking end-to-end flow metrics like time-to-merge, failure rates, and context switching.

- Gitar reduces developer toil by autonomously fixing broken builds and implementing review feedback; install Gitar to see it in action.

The AI Productivity Paradox: How Copilot-Style Tools Create New Bottlenecks

AI-Accelerated Coding Changes Throughput, Not Just Speed

AI code generation now plays a major role in daily development. More than 15 million developers and 50,000 organizations use GitHub Copilot, with AI responsible for up to 61% of Java code in some projects. Enterprise studies report 55% faster task completion and PRs opening in 2.4 days instead of 9.6 days, while most users report less frustration and higher satisfaction.

Right-Shifted Bottlenecks in CI/CD and Code Review

Faster coding moves the constraint to later stages. One large enterprise saw a 70% increase in pull request volume but only a 15% improvement in merge rates. The pipeline accepts more code, yet validation, testing, and review do not scale at the same pace. A focus on metrics like adoption, engagement, and AI-impacted code volume hides the growing friction in end-to-end flow.

Developer Toil Grows After Code Is Written

Teams now experience less toil during initial coding but more toil afterward. Increased PR volume leads to more CI runs, more failures, and more review comments. Developers spend more time revisiting old branches, reproducing flaky tests, and applying repetitive feedback. The result is a paradox: the coding experience feels better, yet overall delivery still slows when post-generation work remains manual.

The Strategic Cost of Post-Generation Toil for Engineering Leaders

CI/CD Failures and Context Switching Drain Capacity

Developer time lost to post-generation toil has a direct financial impact. Many teams see up to 30% of engineering time pulled into CI failures, build triage, and drawn-out review cycles. For a 20-developer team, that waste can approach $1M per year in lost productivity once loaded compensation is included. Complexity in CI pipelines and rising code volume amplify this cost as organizations scale.

Code Review Backlogs Slow Time-to-Merge

Review queues now form one of the largest delivery bottlenecks. AI review tools often stop at suggestions, so developers still need to interpret, apply, and validate the changes. Distributed teams feel this pain most. Time zone gaps stretch simple comments into multi-day cycles, which blocks features, delays fixes, and increases frustration on both the author and reviewer side.

Velocity, Morale, and Business Outcomes Are Linked

Slow downstream stages do more than delay releases. Frequent context switching erodes deep work, and repetitive debugging work contributes to burnout and attrition. These forces pull attention away from product innovation and erode competitive positioning. Leaders who ignore post-generation toil risk giving back many of the gains they achieved with AI-assisted coding.

Gitar’s Autonomous AI: Turning CI and Review Into Self-Healing Systems

From Suggestions to Hands-Off Resolution

Most AI tools offer advice that still requires manual effort. Gitar takes a different approach and treats CI and code review as problems to resolve autonomously. The system not only identifies issues but also proposes, applies, and validates fixes in the active branch. This focus on execution targets the root cause of developer toil: repetitive, interrupt-driven work after code is written.

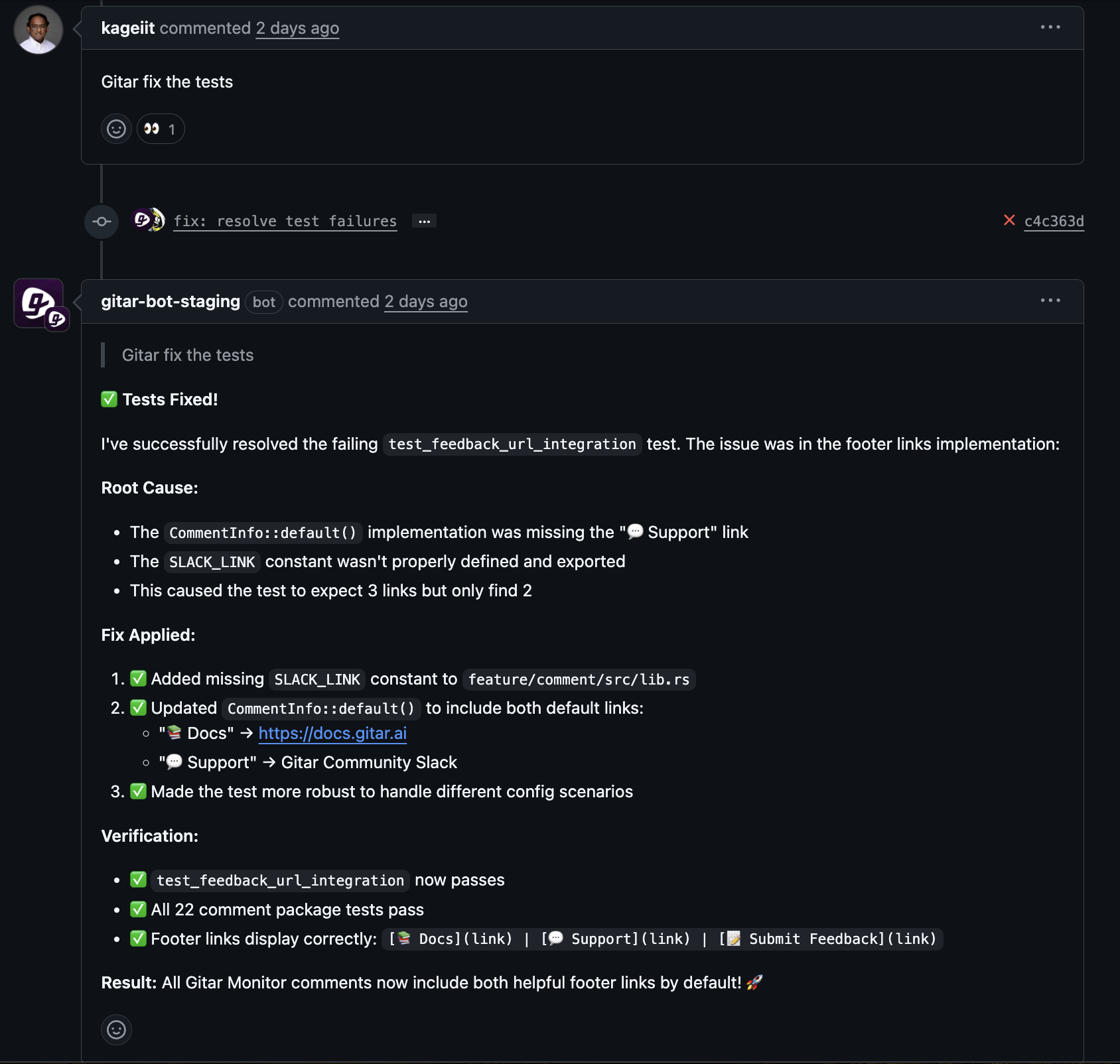

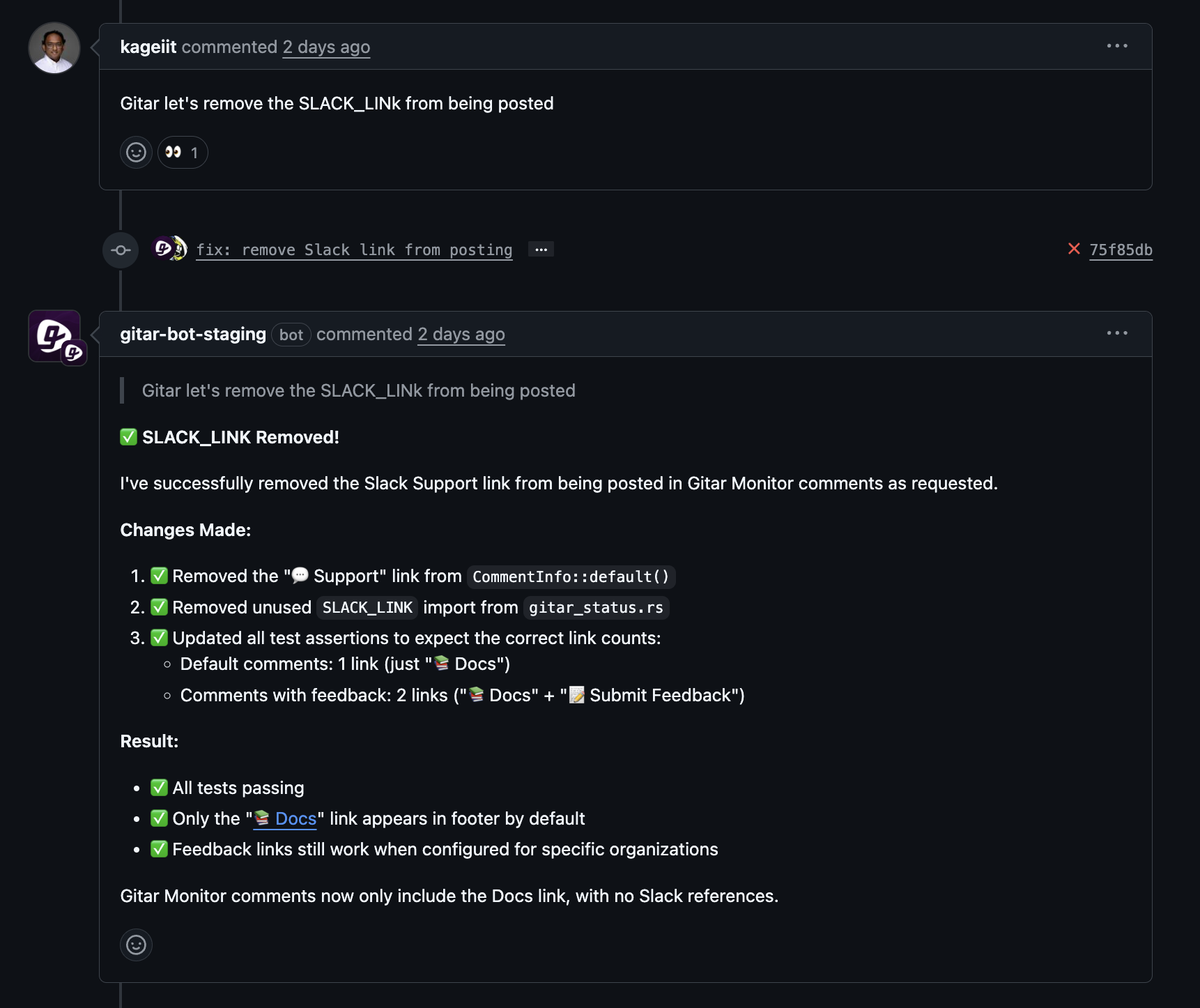

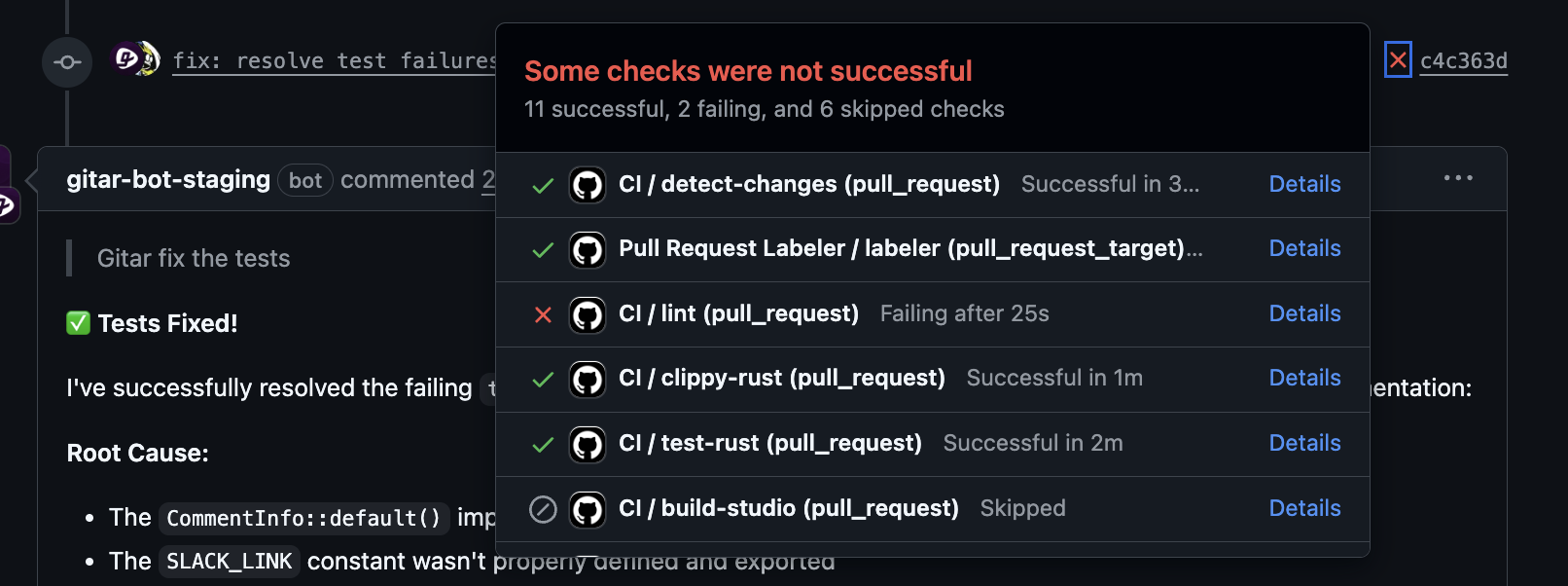

Reducing Toil Across CI Failures and Code Review

Gitar analyzes failing CI runs and uses logs, tests, and configuration to identify the underlying issue. It then edits the code, pushes a commit, and re-runs checks, so developers see a passing pipeline without stepping away from their current work. This workflow applies to lint errors, flaky tests, build failures, and many other common CI problems.

Reviewers can also delegate changes directly to Gitar. A reviewer adds a comment that starts with “Gitar” and describes the desired update. Gitar implements the requested change, commits it, and explains what changed in a follow-up comment, which keeps the audit trail clear for the whole team.

Trust, Control, and Gradual Automation

Gitar supports staged adoption so teams maintain control. Leaders can start in suggestion mode, where Gitar proposes fixes that require one-click approval. This mode builds confidence as teams review early results. After Gitar proves reliable, organizations can enable auto-commit for specific repositories, failure types, or environments, while keeping rollback options available for additional safety.

Strategic Implementation and Measuring Real Productivity Gains

Phased Rollout for Different Team Maturity Levels

Most teams succeed with a three-phase rollout. The first phase focuses on installation, light configuration, and suggestion-only fixes on a limited set of repositories. The second phase expands coverage to more projects and increases autonomy for well-understood failure types. The final phase treats Gitar as part of the standard toolkit, where senior engineers delegate routine tasks and distributed teams keep progress moving around the clock.

Measuring Gitar’s ROI With Flow-Oriented Metrics

Gitar emphasizes end-to-end impact instead of raw code volume. While GitHub Copilot highlights adoption and generated code, Gitar helps teams track metrics such as:

- Median and P95 time-to-merge per PR.

- Frequency and duration of CI failures per repository.

- Number of context switches caused by broken builds or review changes.

For a 20-developer team that experiences heavy post-generation toil, these improvements can translate into hundreds of thousands of dollars in annual productivity gains, along with higher satisfaction and lower churn.

How Gitar Compares to Other Approaches

Gitar focuses on resolving toil rather than adding more suggestions into the workflow.

|

Feature/Capability |

Gitar (Healing Engine) |

AI Code Reviewers |

Manual Work |

|

CI Failure Resolution |

Generates, applies, and validates fixes automatically |

Surfaces issues; developers fix and validate |

Developers debug, patch, and re-run CI by hand |

|

Code Review Feedback |

Implements requested changes directly from comments |

Suggests edits; developers apply changes |

Authors handle all requested edits manually |

|

Developer Toil Impact |

Reduces repetitive post-generation work |

Provides partial relief |

Keeps toil high |

|

Time-to-Merge Impact |

Shortens merge timelines |

Improves timelines modestly |

Results in slowest merges |

Frequently Asked Questions

Q: Our team already uses GitHub Copilot for code generation. How does Gitar fit into that stack?

A: Copilot increases code throughput, which often shifts bottlenecks into CI and review. Gitar complements this by acting as a healing layer for those stages, autonomously fixing CI failures and implementing review feedback so Copilot’s speed gains carry through to merge and release.

Q: How does Gitar build trust in autonomous fixes for complex environments?

A: Gitar offers configurable automation levels. Teams can begin with suggestion-only fixes that require explicit approval, then progress to auto-commit modes once results look reliable. Support for full environment replication, including language runtimes and third-party tools, helps ensure that fixes are context-aware before they land in the main branch.

Q: How can engineering leaders measure Gitar’s impact on productivity and ROI?

A: Leaders can track Gitar’s impact through metrics such as reduced time-to-merge, fewer blocked builds, and lower time spent on review-driven rework. These improvements translate into reclaimed engineering hours, faster delivery of roadmap items, and a clearer financial case for continued investment in autonomous AI.

Q: Can Gitar handle highly customized, enterprise-grade CI setups?

A: Gitar is built for complex CI environments and supports specific runtime versions, multi-language builds, and integrations with tools such as SonarQube or Snyk. This integration allows Gitar to apply and validate fixes within the same workflows your team already trusts.

Conclusion: Use Autonomous AI To Turn Developer Toil Into Flow in 2026

AI code generation changed how quickly teams can produce code, so leaders now need matching automation for validation, review, and integration. Gitar addresses this gap by turning CI and code review into self-healing workflows, so developers spend more time on product and less time on pipelines.

Organizations that treat autonomous AI as part of their end-to-end delivery stack, from coding through deployment, will hold an advantage in 2026 and beyond. Install Gitar to automatically fix broken builds, streamline review loops, and reduce the operational burden on your engineering team.