Key Takeaways

- AI coding assistants that only suggest code often shift work instead of removing it, especially around CI failures and review cycles.

- Most ROI gains now come after code is written, in faster validation, fewer context switches, and quicker time to merge.

- Autonomous healing tools like Gitar focus on fixing CI failures and implementing review feedback so developers stay in flow.

- A simple ROI model that multiplies reclaimed developer hours by fully loaded cost can show six-figure savings for mid-size teams.

- Teams that want to reduce CI toil and review back-and-forth can start with Gitar in a low-risk mode and scale up over time: Install Gitar and fix broken builds automatically.

Reduce AI Coding Assistant Waste By Targeting CI And Review Bottlenecks

Engineering leaders now need clear ROI for every AI budget line. Many teams already use AI coding assistants, but most operate as suggestion engines. They draft code or comments, and developers still need to apply, test, and debug those changes.

This model creates a gap between perceived and real value. Developers gain some speed while writing code, yet they still spend large blocks of time on CI failures, flaky tests, and back-and-forth code reviews. A 20-person team can easily lose around $1M per year to these bottlenecks once fully loaded costs and delays across projects are included.

Faster code generation also increases load on downstream systems. More pull requests means more tests to run, more failures to investigate, and more review comments to resolve. The core problem in 2026 is less about generating code and more about validating and merging it without constant context switching.

Use Autonomous CI Healing To Turn AI Into Measurable ROI

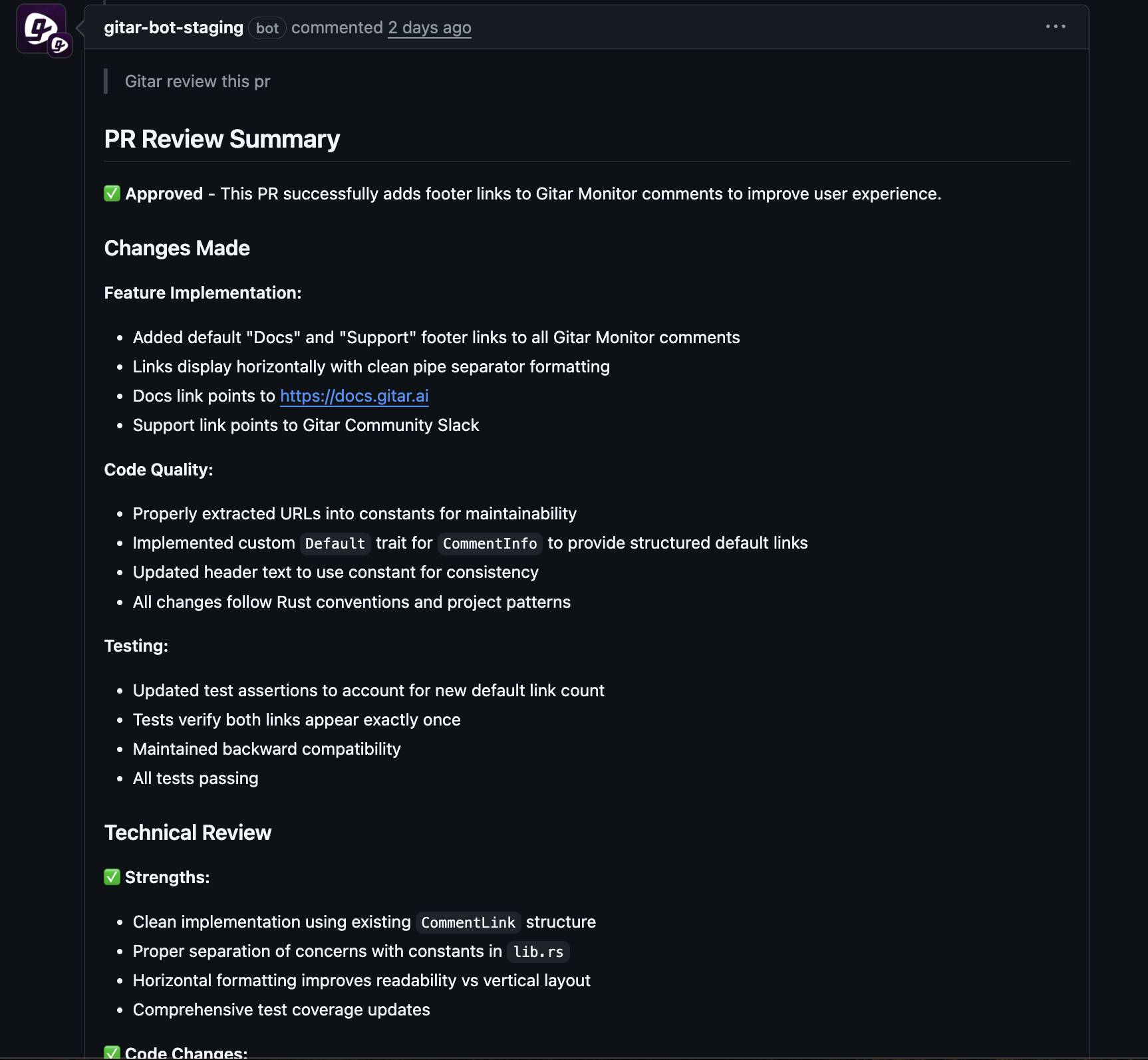

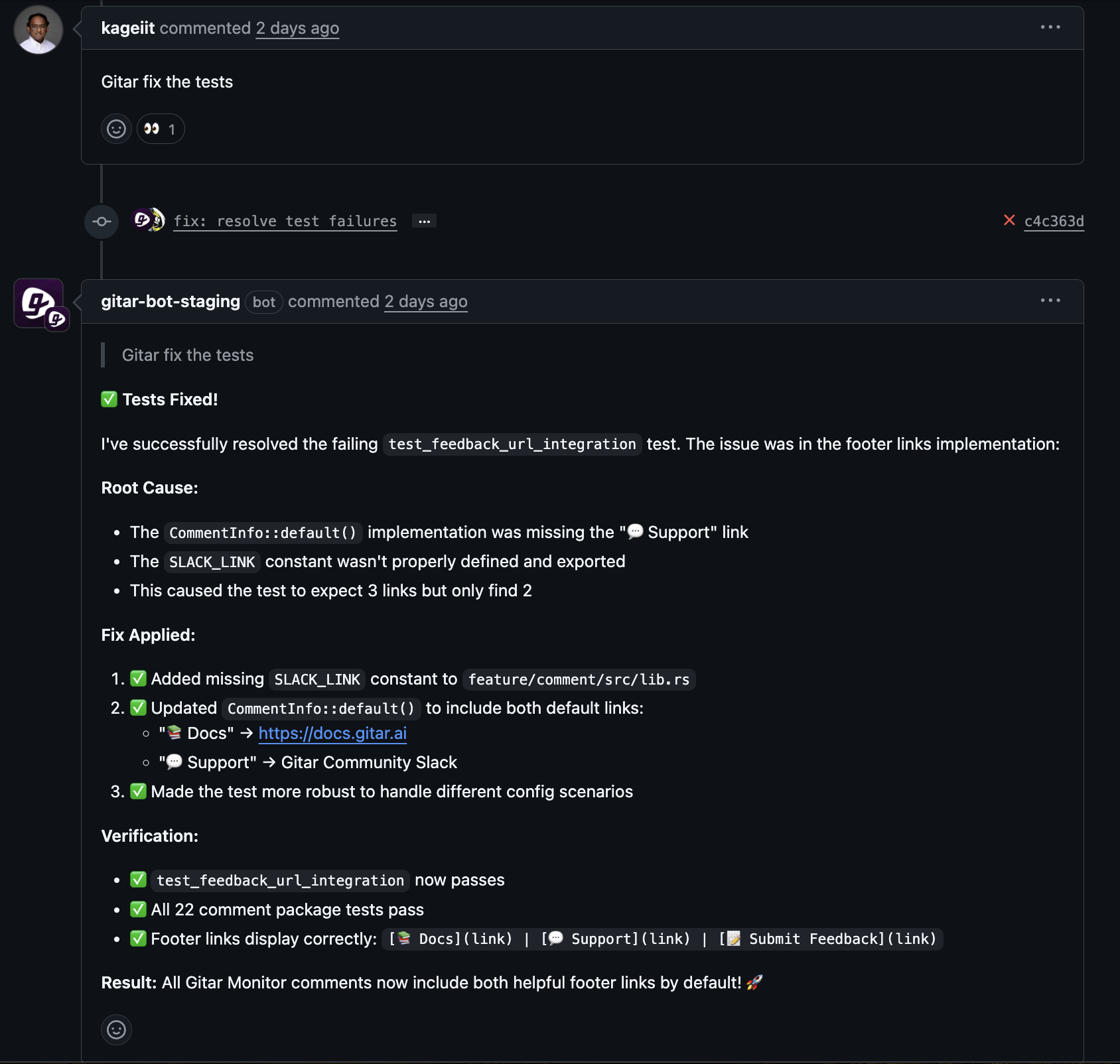

Gitar focuses on the post-commit phase. It acts as an autonomous agent that fixes failing CI pipelines and implements code review feedback so developers can stay on their current task instead of dropping everything to debug builds.

Key elements of Gitar’s approach to improving productivity:

- End-to-end autonomous fixing: Applies changes, runs the full CI workflow, and only surfaces pull requests once all CI jobs pass.

- Enterprise environment replication: Mirrors complex workflows, including specific JDK versions, multiple SDKs, and third-party scans such as SonarQube and Snyk, to keep fixes accurate.

- Configurable trust model: Supports a range of modes, from suggestions that need a click to commit, up through fully autonomous commits with rollback available.

- Code review actioning: Reads human review comments, updates the code to match, and pushes commits so teams avoid time zone delays and tedious rework.

- Cross-platform compatibility: Works with GitHub Actions, GitLab CI, CircleCI, BuildKite, and other CI platforms rather than locking into one ecosystem.

Install Gitar to turn failing builds into green pull requests with minimal developer involvement.

Compare Suggestion Engines And Healing Engines On Real ROI

Define ROI In Terms That Match Developer Reality

ROI for AI coding assistants should reflect the full lifecycle of a change, not just how quickly the first draft appears. Useful metrics include:

- Developer hours saved per week from less CI debugging and review rework.

- Shorter lead time from pull request opened to merged.

- Fewer context switches and interruptions during focused work.

- Lower delivery cost for each feature or bug fix.

Completed, validated actions matter more than raw suggestions. A tool that fixes a failing job and gets a pull request to green creates direct value. A tool that adds more code but leaves testing and debugging to humans can create extra work.

Side-By-Side View Of Capabilities And ROI Impact

|

Feature or metric |

Gitar (healing engine) |

AI code reviewers (for example, CodeRabbit) |

IDE assistants (for example, Copilot) |

Manual fixes |

|

Primary function |

Autonomous CI failure resolution and review comment actioning |

AI-generated review comments and suggestions |

AI-assisted code generation while coding |

Manual debugging and fixing |

|

Main outcome |

Consistently green builds through validated fixes |

Suggestions that require developer changes |

Code that still needs tests and integration |

Developer investigates and patches issues |

|

Impact on toil |

Removes much CI-related context switching |

Reduces some review effort, but work remains manual |

Helps write code, does not solve CI or review bottlenecks |

Highest level of toil and interruptions |

|

Typical time to resolution |

Minutes, driven by automation |

Hours, due to waiting for developer action |

Not focused on resolution |

Hours to days, depending on complexity |

|

Fix validation |

Runs full CI pipeline before surfacing changes |

No automatic validation of suggested fixes |

No post-commit validation |

Manual test and pipeline runs |

|

Cross-platform support |

GitHub, GitLab, CircleCI, BuildKite |

Mainly GitHub or GitLab |

Dependent on IDE choice |

Works anywhere but without automation |

|

Distributed team impact |

Reduces time zone delays by actioning feedback automatically |

Still depends on humans to apply changes |

No impact after commit |

Strongly affected by time zones and handoffs |

|

Primary ROI driver |

Less time on CI debugging and review rework |

Faster review feedback |

Faster initial coding |

No structured ROI improvement |

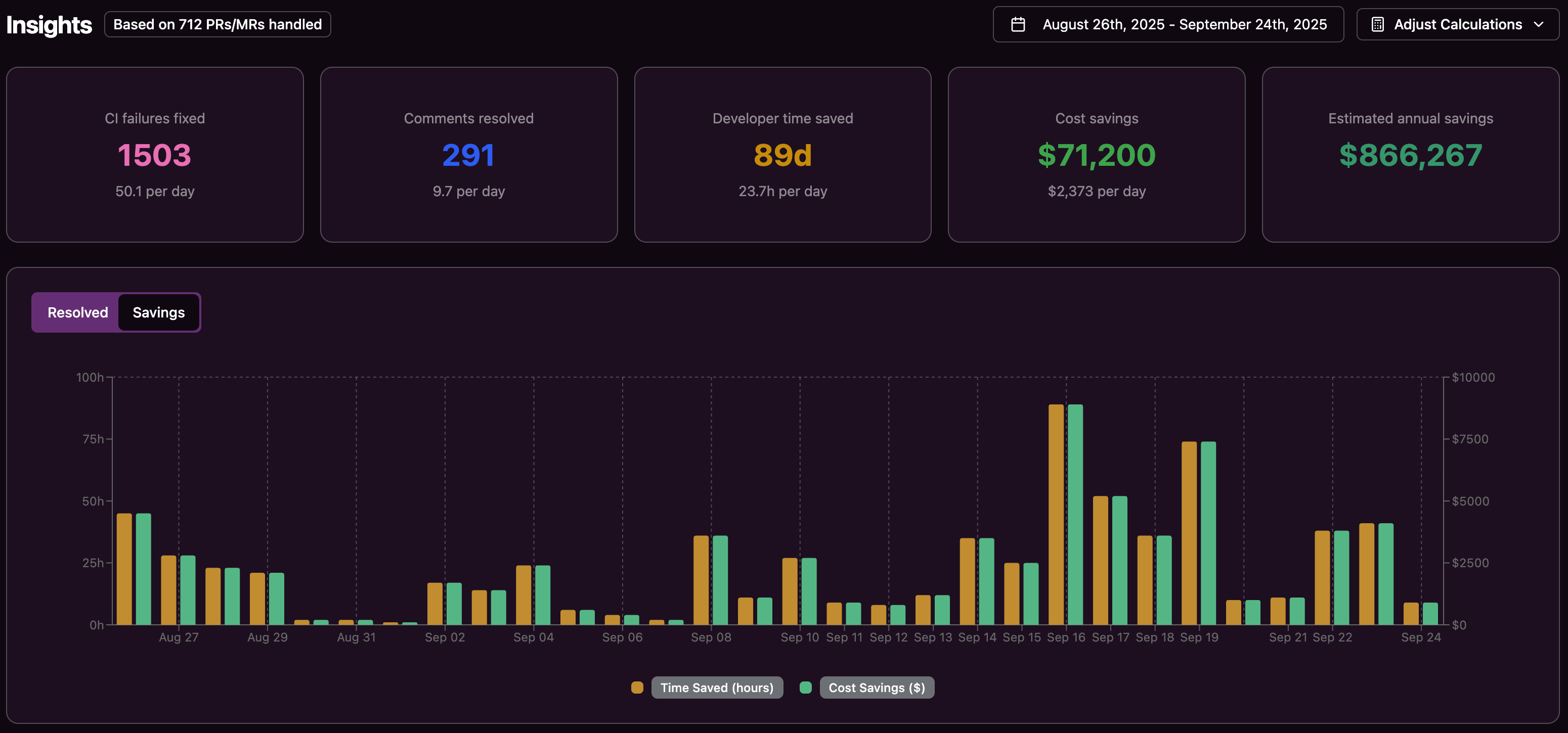

Measure AI Coding Assistant Value With A Simple ROI Model

Developer time converts cleanly into dollars once you use fully loaded cost. For a developer with a salary near $140K in recent years, the all-in cost often sits around $200 per hour after benefits and overhead.

A basic ROI model can start with three inputs:

- Average hours per week each developer spends on CI debugging and review-related rework.

- Number of developers on the team.

- Estimated percentage reduction in that time from an autonomous tool.

Multiplying the total hours reclaimed by the hourly cost gives an annual savings estimate. For example, a 20-person team that spends a combined 100 hours per week on CI and review churn, and cuts that time in half, would recover around 2,600 hours per year. At $200 per hour, that translates to about $520K in annual value.

Implementation cost matters as well. Many AI tools need custom integration, workflow changes, or ongoing prompt tuning. Gitar connects through a GitHub or GitLab app and a short setup, which keeps deployment overhead low compared with heavier platforms.

Choose The Right AI Coding Assistant For Your Team’s ROI Goals

Teams can use a simple decision lens to decide where autonomous healing adds the most value.

- Autonomy versus suggestions: Teams with frequent CI failures or heavy review load tend to gain more from a tool that fixes problems than from one that only recommends changes.

- CI complexity: Organizations with multi-language builds, custom test suites, and tools such as SonarQube or Snyk benefit from assistants that can mirror those environments accurately.

- Workflow integration: Low-friction integrations that fit existing pull request and review patterns drive faster adoption and earlier ROI.

- Trust and control: Configurable modes let teams start with human-reviewed suggestions, then move toward autonomous commits after they see consistent quality.

- Distributed collaboration: Global teams see strong gains when CI fixes and review feedback can be applied while some teammates are offline.

Install Gitar to reduce CI toil and shorten pull request cycles without disrupting current workflows.

AI Coding Assistant ROI FAQ For Engineering Leaders

Does Gitar replace my existing AI code reviewer such as CodeRabbit?

Gitar and AI code reviewers solve different parts of the lifecycle. Reviewers highlight issues and suggest improvements, while Gitar applies and validates many of those changes inside real CI pipelines. Many teams pair a reviewer for analysis with Gitar for actioning so feedback turns into green builds with less manual work.

How quickly can a team see ROI with Gitar?

Gitar starts to create value as soon as it fixes the first real CI failure. Developers avoid dropping work to chase linting issues, flaky tests, or small review changes, and those reclaimed minutes add up across every pull request.

What if my team is cautious about automated CI fixes?

Teams can start with a conservative mode where Gitar only proposes fixes as comments or suggested changes. Developers review those suggestions, accept or edit them, and build confidence in the quality over time. After that, teams can enable more autonomous modes while keeping rollback options in place.

How does Gitar handle complex enterprise CI environments?

Gitar models the full CI workflow, including language versions, dependency graphs, multiple build stages, and integrated scanners or testing frameworks. That context lets it propose and apply fixes that match the reality of each pipeline rather than relying on generic patterns.

Can Gitar work with our current tools and processes?

Gitar connects through GitHub or GitLab apps and works alongside existing CI providers such as GitHub Actions, GitLab CI, CircleCI, and BuildKite. It respects current pull request and review practices while adding automation that reduces the manual steps between red and green builds.

Unlock More Engineering ROI With Autonomous AI In 2026

Suggestion-based AI tools help developers write code faster, but they leave the hardest and most expensive work untouched. CI failures, review back-and-forth, and constant context switching still consume a large share of engineering time.

Autonomous healing engines like Gitar address this gap by fixing failing pipelines and implementing review feedback directly in the CI environment. That focus on completed, validated actions turns AI from a code-writing helper into a measurable ROI driver across the delivery pipeline.