Key Takeaways

- Reactive debugging in CI/CD consumes significant engineering time, introduces context switching, and delays delivery.

- Machine learning can automatically fix many CI failures and address code review feedback, shifting work from manual debugging to supervised automation.

- Adopting ML for code issues reduces technical debt, improves developer velocity and morale, and keeps CI/CD infrastructure costs under control.

- Leaders gain better outcomes by rolling out ML in phases, aligning with existing workflows, and choosing tools built for complex CI environments.

- Gitar provides an autonomous fixing engine for CI and code review; teams can install Gitar to cut time spent on broken builds and ship more reliable code.

The High Cost of Reactive Coding: Why Current Approaches Fall Short

The CI/CD Gauntlet and Context Switching Tax

Most engineering teams lose substantial time to debugging CI failures and addressing review comments. Developers can spend up to 30% of their time on CI and code review issues, creating a clear productivity bottleneck. For a 20-person engineering team, this can reach roughly $1M in annual productivity loss once fully loaded costs and schedule impact enter the picture.

Context switching amplifies this cost. Developers open a pull request, start on the next task, then receive a CI failure or review comment hours later. Regaining the original context can take far longer than the actual fix, turning simple issues into extended interruptions that erode deep work time.

The Right-Shift Bottleneck

AI-assisted coding tools such as GitHub Copilot and Cursor accelerate code creation. That speed shifts the constraint from writing code to validating and merging it. More code results in more pull requests, longer queues for review, and more CI runs and potential failures. Teams that increase code output without modernizing validation workflows end up waiting on builds and reviews instead of shipping value.

Distributed Teams and Time Zone Delays

Global teams face additional friction. A developer on the US West Coast who depends on a reviewer in Bangalore may wait a full day for each review cycle. Tools that only suggest changes, and still require manual edits and reruns, add another round of back-and-forth. These delays compound across many pull requests and slow the entire organization.

The Power of ML: Transforming Code Issue Resolution

Machine learning shifts code quality work from reactive debugging to proactive, automated resolution. ML systems can ingest build logs, test output, and repository context, then propose or apply targeted fixes to unblock the pipeline.

Efficient Problem Solving in CI

Modern ML systems specialize in issues that frequently break builds. They can diagnose and fix common CI problems such as lint violations, flaky or failing tests, and build configuration errors. Automated fixes keep pipelines flowing and free developers from repetitive, low-leverage debugging.

Strategic Advantages: Why Engineering Leaders Benefit from ML for Code Issues

Reduction in Technical Debt

ML-based fixing reduces the chances that small problems accumulate into larger structural issues. Automated corrections enforce standards early and consistently, which supports a healthier codebase and reduces the need for large refactors later.

Higher Developer Velocity and Morale

Teams move faster when developers can focus on design, architecture, and feature work instead of chasing CI failures. Automation reduces interruptions, lowers frustration, and helps prevent burnout, which supports retention and long-term productivity.

Improved Software Quality and Reliability

Consistent, automated checks and fixes improve reliability. Pipelines fail less often for known issues, and releases reach production with more predictable behavior. Teams can release more frequently with greater confidence.

Optimized CI/CD Infrastructure Costs

Smarter pipelines reduce waste. Fewer failed runs, fewer retries, and more targeted fixes lower compute usage and queue times, which keeps CI/CD infrastructure costs under control.

Gitar: An Autonomous Solution for Fixing Code Issues

Gitar applies machine learning directly to CI failures and review workflows. Instead of only flagging problems, it can implement fixes, run the relevant checks, and keep pull requests moving toward a green build. This approach turns many CI and review tasks into supervised automation rather than manual work.

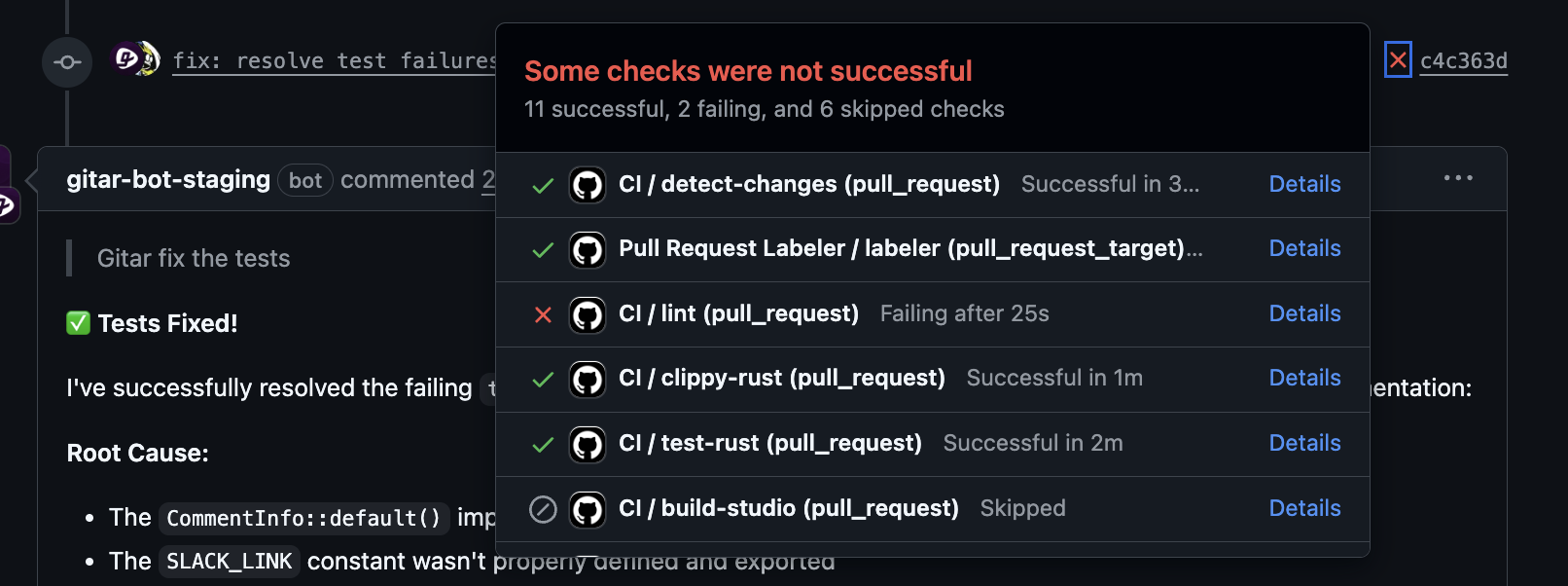

End-to-End Autonomous Fixing

When CI checks fail, whether from linting errors, test failures, or build issues, Gitar analyzes the failure context, generates a targeted code change, applies it, and commits the fix back to the pull request branch. Many issues resolve without developer intervention, which shortens feedback loops and reduces idle time.

The trust model is configurable. Teams can start in a conservative mode, where Gitar posts suggested changes that developers approve with a click. As confidence grows, teams can shift to more automated modes that commit fixes directly while preserving rollback options and clear audit trails.

Full Environment Replication and Cross-Platform Support

Gitar can emulate real CI workflows, including specific JDK versions, polyglot builds, and complex test configurations. This environmental awareness increases the chance that fixes pass in the same conditions used in production pipelines.

Support spans popular CI platforms such as GitHub Actions, GitLab CI, CircleCI, and BuildKite. Teams can keep existing tools and add autonomous fixing capabilities on top.

Configurable Trust and Intelligent Code Review

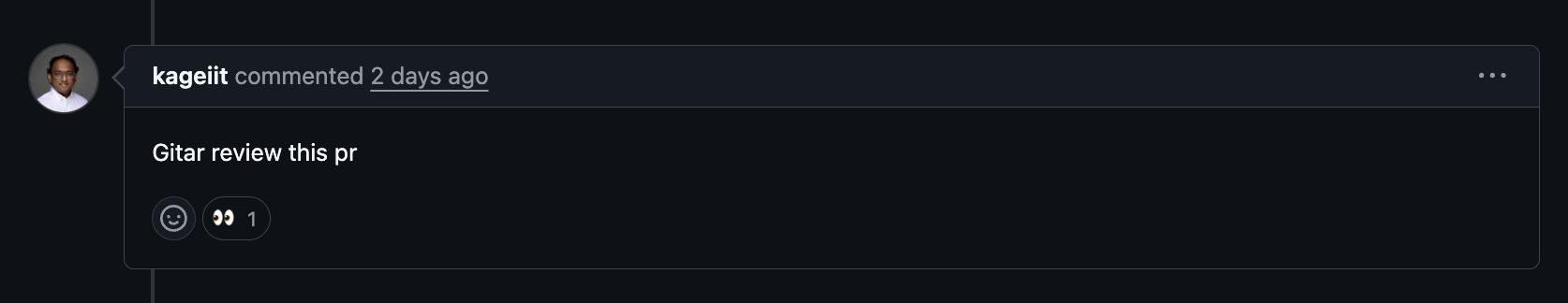

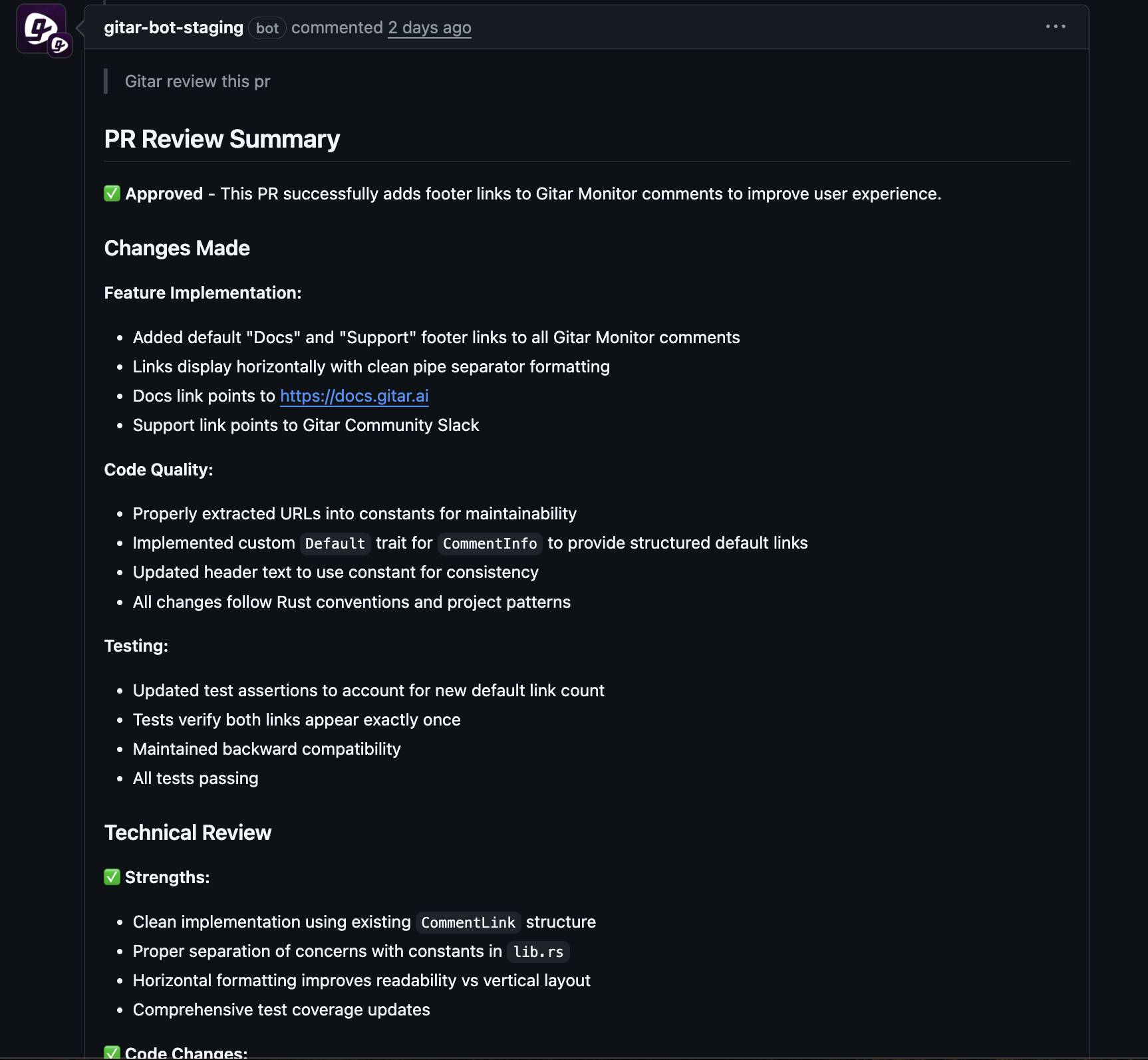

Gitar also supports the human review process. Reviewers can request AI-generated summaries of pull requests and high-level feedback on design choices. They can add comments such as “remove this deprecated path” or “extract this into a helper,” and Gitar interprets the instruction, applies the change, and pushes a commit with an explanation.

Distributed teams benefit in particular. A reviewer can leave instructions at the end of their day, and Gitar can implement those changes so that the original author sees ready-to-review updates the next morning.

The Aha Moment for Developers

Many teams notice a clear shift the first time a CI failure resolves itself with a new, well-documented commit from Gitar. CI moves from a source of disruption to a system that handles routine issues in the background while developers stay focused on their work.

Strategic Implementation: Integrating ML into Your Workflow

Organizational Readiness and Data Strategy

Effective ML adoption depends on access to build logs, test results, and repository history. Gitar starts delivering value quickly by learning from existing CI output, then refines its behavior as it observes more of a team’s patterns and conventions.

Build vs. Buy for ML Capabilities

Engineering leaders must balance control with cost. Building internal ML systems for CI/CD requires specialized talent, infrastructure, and ongoing maintenance. Purpose-built tools like Gitar provide tested workflows and ongoing model improvements without that internal overhead.

Phased Adoption and Change Management

Gradual rollout supports trust. Teams often begin with suggestion-only modes, track accuracy, and document outcomes. As confidence grows, they expand the scope of automated fixes and adjust policies to match risk tolerance and compliance needs.

Healing Engines vs. Suggestion Engines: Choosing the Right ML Approach

ML tools generally fall into two categories. Healing engines implement and validate fixes in CI. Suggestion engines provide advice that developers must still apply and verify. This difference determines how much manual effort remains.

|

Feature |

Gitar (Healing Engine) |

AI Reviewers (Suggestion Engine) |

Manual Debugging |

|

Automated Fix Application |

Yes |

No |

No |

|

Fix Validation in CI |

Yes |

No |

Manual |

|

Environmental Context |

Full replication |

Limited |

Manual |

Suggestion engines still depend on engineers to implement and test every recommendation. Healing engines such as Gitar handle both the code change and the validation step, which provides greater leverage at scale.

Navigating Common Pitfalls in Adopting ML for Code Issues

Limits of General-Purpose AI

General-purpose language models often struggle with complex CI environments, proprietary tooling, and unusual build setups. Specialized systems like Gitar embed CI/CD context into their design, which improves reliability when working with enterprise pipelines.

Trust, Control, and Governance

Automated code changes must meet organizational standards for safety and oversight. Teams should favor tools that offer adjustable trust levels, simple rollback, and detailed logs of each automated change. Gitar provides these controls so teams can align automation with their policies.

Future-Proof Your Engineering: Move Toward Autonomous Code Issue Resolution

As AI-assisted coding increases output, the real constraint shifts to validation, integration, and release. Teams that continue to rely on manual debugging and review cycles will see growing pressure on delivery timelines and developer capacity.

Gitar helps organizations move toward self-healing pipelines by automating fixes for many CI failures and review-driven changes. This approach improves developer experience, reduces operational costs, and supports faster, more reliable releases.

Frequently Asked Questions About Leveraging ML for Code Issues

How do ML solutions handle complex, unique enterprise CI environments?

Advanced ML solutions for CI/CD can mirror complex enterprise workflows, including specific SDK versions, multi-language builds, and varied testing tools. Gitar is designed to emulate these configurations so that automated fixes behave reliably under real pipeline conditions.

What ROI can engineering leaders expect from ML-driven code issue resolution?

Return on investment often appears as reclaimed developer time and reduced delays. For a 20-developer team that spends one hour per day on CI issues, the annual productivity impact can reach about $1M. Even if ML automation eliminates only half of that work, leaders can see hundreds of thousands of dollars in savings along with improved satisfaction and lower burnout.