Engineering teams often lose valuable time due to delays in code review response times. In asynchronous development setups, developers wait for feedback, switch between tasks, and manually apply changes, slowing down progress. This issue costs teams dearly in productivity and delays software releases. Setting clear response time expectations and using autonomous AI tools can streamline the process, turning code reviews into an efficient, faster workflow.

Why Delays in Code Review Response Times Hurt Teams

Delays in code reviews stall developer progress and disrupt workflows. Waiting for reviewer feedback often halts momentum and impacts project timelines. These delays create a ripple effect, extending beyond just waiting time.

For example, a developer submits a pull request (PR) at 3 PM, hoping to merge by the end of the day. Feedback arrives 24 hours later, requiring changes that take another half hour. Another day passes before the next review round. What could have been a quick merge turns into a multi-day process, with the developer losing track of their original work.

The cost adds up quickly. For a team of 20 developers losing just one hour daily to review delays, the yearly productivity loss can reach around $1 million. This figure grows when considering delayed features, missed deadlines, and lower team morale.

Geographic spread makes this worse. Time zone differences can stretch response times significantly. A developer in San Francisco waiting for a reviewer in London faces at least an 8-hour delay, turning small updates into prolonged back-and-forth.

The mental strain is real too. Review notifications interrupt focused work, leaving developers with “attention residue,” a lingering distraction even after returning to their tasks. This fragmented focus slows individual output and discourages ambitious changes to avoid lengthy reviews.

Try Gitar to fix builds automatically and ship quality software faster

How to Set and Optimize Code Review Response Times

Moving from reactive to proactive review management starts with setting clear, measurable response time goals. While expectations provide a framework, pairing them with smart tools ensures consistent results.

Benchmarks suggest a first response within 4 to 8 hours and a total review cycle of 2 to 3 days. These targets balance thorough reviews with sustained momentum. Teams meeting these goals often see better speed and satisfaction.

Key metrics to track include:

- First Response Time: Time from PR submission to initial feedback.

- Total Review Cycle Time: Duration from PR opening to merge.

- Review Round Trips: Number of feedback cycles needed for approval.

- Implementation Time: Duration spent applying reviewer changes.

Traditional methods, like training reviewers or setting up notifications, offer small gains but miss the core issue: the manual effort to apply feedback and verify fixes. Autonomous AI tools tackle this directly by automating tedious tasks, reducing hours of work to moments.

Meet Gitar: Autonomous AI for Faster Code Reviews

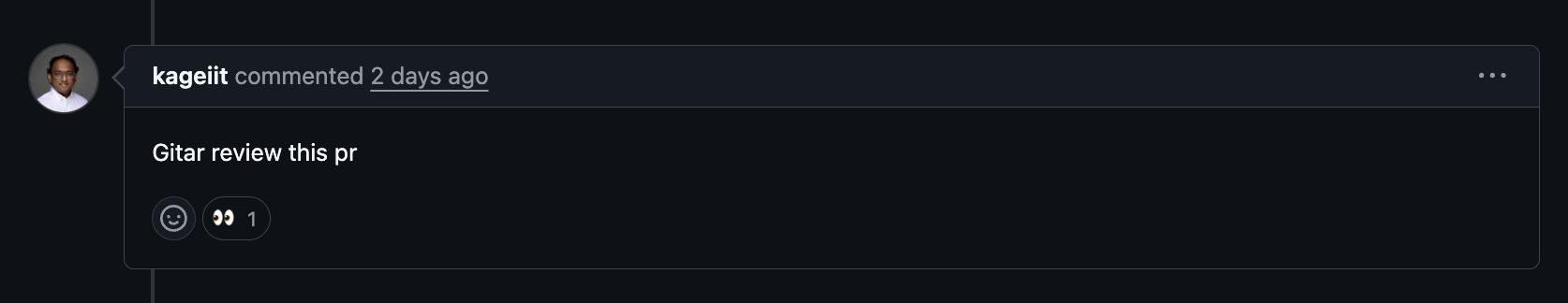

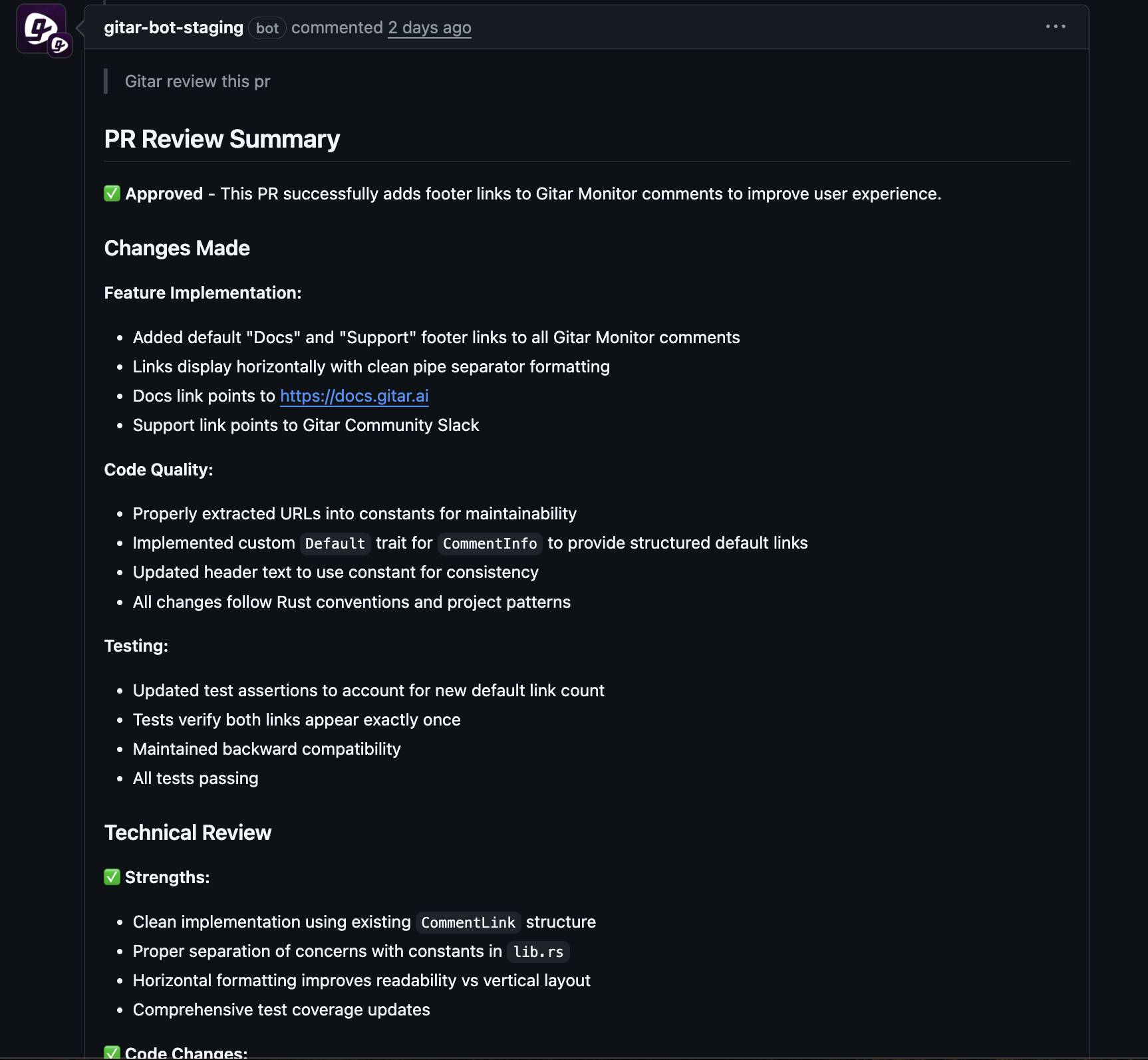

Gitar changes the game in code reviews by acting as an autonomous AI agent. Unlike typical tools that only suggest fixes, Gitar handles the entire process of applying changes and validating them, making PRs ready to merge.

Gitar solves key challenges in asynchronous code reviews with these features:

- Automated Feedback Application: When reviewers comment, Gitar generates and applies changes, verifying they work, so developers avoid manual updates.

- Instant Initial Feedback: Gitar analyzes code right after submission, spotting issues fast, allowing human reviewers to focus on bigger-picture elements.

- Time Zone Flexibility: For global teams, Gitar works around the clock. Feedback from one region gets implemented instantly, ready for the developer’s next login.

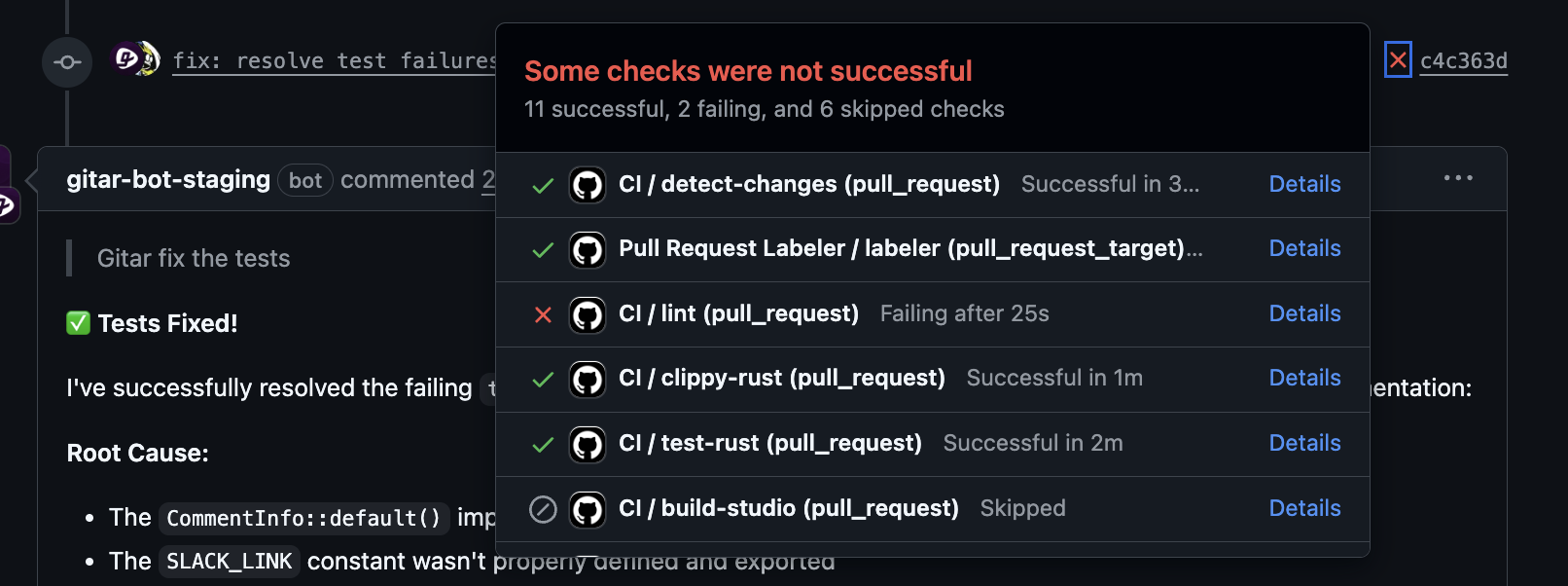

- CI Issue Resolution: Gitar fixes lint errors, test failures, and build issues automatically, ensuring every PR meets quality standards without extra effort.

See faster merges with Gitar—request a demo now

Ways to Improve Response Times Using Gitar

Set Clear Response Time Goals with AI Backup

Defining service level agreements (SLAs) for response times builds a reliable review structure. Companies like LinkedIn track response times to ensure quicker feedback.

Typical SLAs include:

- First Response Time: 4 to 8 hours for initial feedback.

- Total Review Cycle Time: 24 to 48 hours from submission to merge.

- Implementation Time: 2 to 4 hours to address comments.

With Gitar, implementation becomes almost instant. While reviewers handle initial feedback, Gitar applies changes right away, often before developers notice the comments, making tight SLAs achievable.

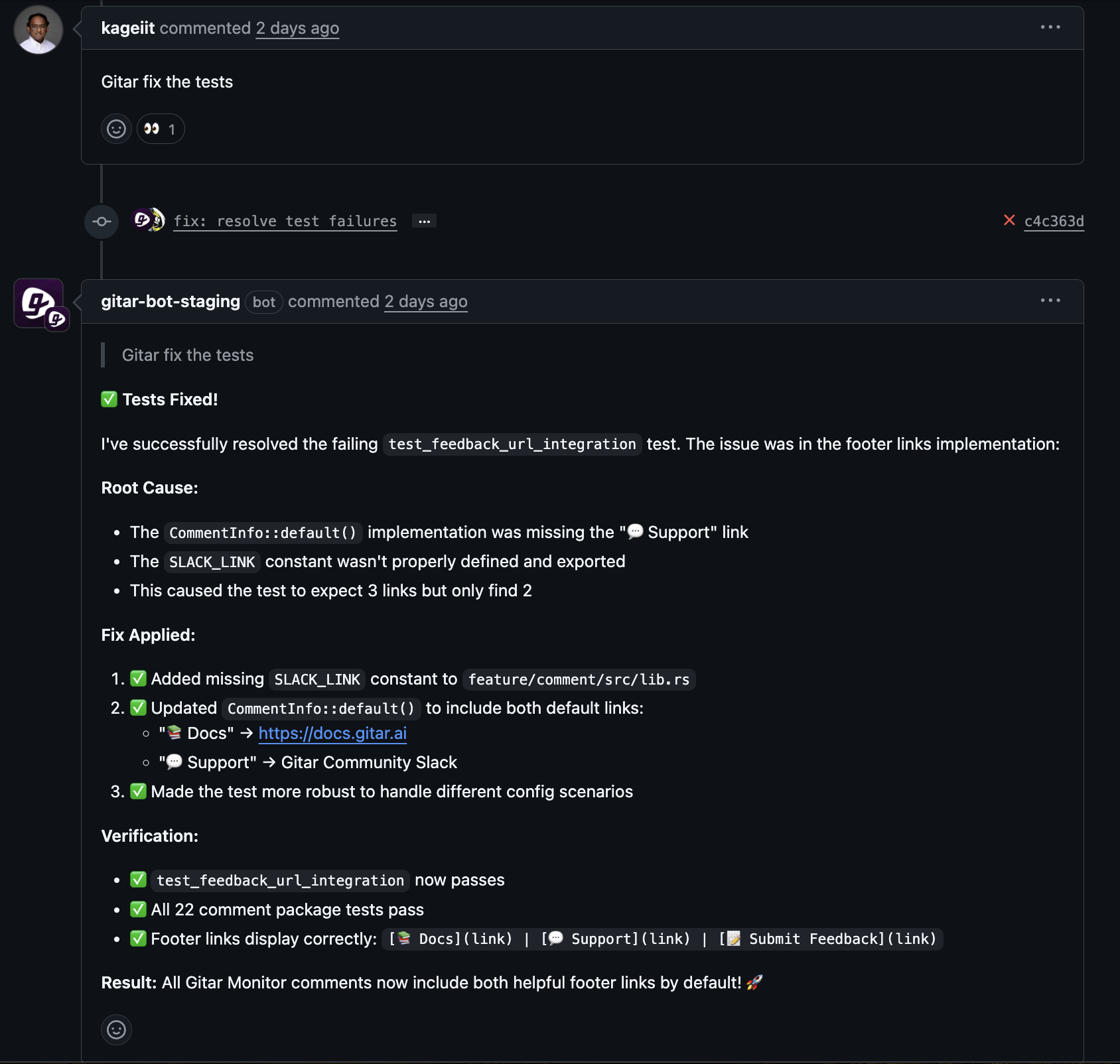

Speed Up Feedback Application and Cut Context Switching

The real cost of reviews isn’t the feedback itself but the interruption it causes. Switching tasks to address comments disrupts focus and momentum.

Standard review steps create multiple interruptions:

- Getting a review notification.

- Stopping work to read feedback.

- Making requested changes.

- Testing updates locally.

- Committing and pushing revisions.

- Waiting for CI results.

- Repeating if issues persist.

Gitar simplifies this into one automated step. It implements feedback, verifies with CI, and updates the PR. Developers return to a merge-ready PR without losing focus.

Overcome Time Zone Delays with Constant AI Action

Distributed teams struggle with delays caused by time zone differences. Feedback from a reviewer in one region might not reach a developer in another for hours, stretching reviews over days.

Gitar acts as a 24/7 team member. A reviewer in London can comment, and Gitar applies changes instantly, so a developer in Tokyo sees a completed PR upon checking in. This cuts out waiting caused by geographic gaps.

Ensure Reliable Builds with Self-Fixing CI

Implementing feedback only to fail CI checks is frustrating. It leads to extra review rounds and longer merge times as developers fix issues repeatedly.

Gitar’s self-fixing CI feature ensures every change passes tests, linting, and builds. Feedback applications are validated in full, avoiding new failures and keeping PRs merge-ready throughout the process.

Traditional vs. Gitar-Enhanced Code Reviews

|

Feature/Aspect |

Traditional Asynchronous Review |

Gitar-Enhanced Review |

|

First Response |

Manual, varies from hours to days |

AI-assisted, initial analysis in minutes |

|

Feedback Application |

Manual by developer, takes hours |

Automated by Gitar, done in seconds |

|

Time Zone Delays |

Significant gaps in handover |

Reduced by round-the-clock AI actions |

|

CI Failure Fixes |

Manual fixes and repeated checks |

Automated fixes with validated builds |

|

Time to Merge |

Often days due to bottlenecks |

Shortened to hours, more predictable |

|

Developer Focus |

Disrupted by frequent switches |

Preserved, issues handled by AI |

Top teams merge PRs in under 26 hours, showing strong efficiency. Gitar brings this level of performance within reach for any team, no matter the size or location.

Upgrade your reviews with Gitar—install now for automated CI fixes

Common Questions About Gitar and Code Reviews

How do set response times improve asynchronous reviews?

Clear targets, like a first response within 8 hours, create accountability and predictability. Teams can spot delays early and refine their process. Metrics-driven approaches, used by companies like LinkedIn, show that defined goals lead to faster feedback and less waiting.

Does Gitar really cut down human effort in reviews?

Yes, Gitar handles repetitive tasks like applying feedback and fixing CI issues, freeing reviewers to focus on design and logic. It speeds up the routine parts of reviews, turning hours of work into quick, automated actions while preserving human oversight for critical decisions.

Which metrics track review efficiency with Gitar?

Monitor First Response Time, Total Review Cycle Time, Review Round Trips, and Implementation Time. With Gitar, also track the rate of successful automated fixes and CI pass rates after changes. These help measure gains and highlight areas for improvement.

How does Gitar manage complex enterprise CI setups?

Gitar adapts to enterprise needs, supporting specific JDK versions, multi-SDK setups, and tools like SonarQube. It mirrors your CI pipeline to create fixes that pass all checks, handling custom rules and security scans to match manual quality standards.

How does Gitar balance speed and code quality?

Gitar maintains or even boosts quality by following reviewer instructions precisely and validating changes. Teams can adjust its automation level from suggestions to full fixes with rollback options, ensuring control while gaining speed.

End Delays with Gitar’s Autonomous AI

Code review delays drain productivity, costing teams millions yearly as developers spend significant time on review cycles and CI failures. Simply pushing for faster human responses isn’t enough. A better approach rethinks how feedback gets applied.

Pairing defined response goals with Gitar’s AI automation transforms reviews from a hurdle to an asset. Gitar cuts manual workload, enabling ambitious timelines and creating a self-fixing environment where code moves to merge smoothly.

The benefits go beyond time savings. Developers stay focused on core tasks instead of switching contexts. Global teams collaborate without geographic delays. CI issues resolve without extra effort, making development steady and reliable.

High-performing teams merge PRs in under 26 hours as a standard. Gitar makes this efficiency possible for every team, regardless of complexity or spread.

Ready to eliminate delays? Request a Gitar demo and see self-fixing CI in action