Key Takeaways

- Flaky tests waste a significant share of developer time, increase infrastructure spend, and slow down delivery, especially as teams and CI pipelines scale in 2026.

- Quantifying flaky-test cost requires looking beyond raw debugging hours to include productivity loss, infrastructure overhead, morale impact, and production risk.

- Common tactics such as manual debugging, quarantining, and dashboards help visibility but rarely eliminate the recurring cost of CI failures.

- Autonomous CI tools that replicate your environment and apply validated fixes can reclaim hundreds of hours per quarter and improve developer experience.

- Gitar provides autonomous CI failure repair and code-review assistance so teams can automatically fix broken builds and flaky tests; install Gitar to reduce CI failure costs and ship with greater confidence.

Why Flaky Tests Create Significant Engineering Costs

Flaky tests return inconsistent results without any relevant code change. Builds fail at random, developers rerun pipelines, and teams spend time proving that nothing is actually broken.

This behavior turns a test suite into an unreliable signal. Engineering leaders then face longer feedback loops, slower decisions, and growing skepticism about automation. As organizations scale, the cumulative financial burden can reach hundreds of thousands of dollars per year, once developer time, infrastructure, and risk are included.

How To Quantify The Cost Of Flaky Tests

A structured cost model helps connect flaky tests to budget and roadmap impact. Key components include productivity loss, infrastructure use, morale costs, and risk.

Developer Productivity Loss And Opportunity Cost

Flaky tests consume a recurring share of developer time. One analysis estimated that flaky tests can consume up to 16% of a developer’s time. A five-year case study found that at least 2.5% of total productive developer time went to investigation and repair.

For a 20-person team, even one hour per day per developer spent on CI triage can equate to an annual productivity loss of about $1 million. Flaky tests also reduce development velocity, which delays features and revenue.

Infrastructure And Operational Overhead

Repeated reruns drive up compute and CI minutes. Large repositories may spend hours each day executing the same tests again to clear random failures. Creation and diagnosis costs also rise as teams manage complex test environments, sometimes requiring a dedicated platform or test-engineering staff.

Developer Morale And Cognitive Load

Unreliable CI adds constant background noise for engineers. Context-switching between feature work and unexplained failures interrupts deep work and erodes trust in the pipeline. This noise degrades cognitive focus and increases frustration, which can eventually affect retention and hiring costs.

Technical Debt And Production Risk

Teams often learn to ignore noisy tests. Genuine failures then risk being dismissed as flakes. Over time, the test suite bloats, and maintenance costs rise. An estimated 15–30% of all automated test failures are due to flakiness, which illustrates how much signal gets buried.

A Simple Financial Model For Flaky Tests

Leaders can estimate cost with a basic formula:

- Failures per week

- × average time wasted per failure

- × number of developers affected

- × blended hourly cost

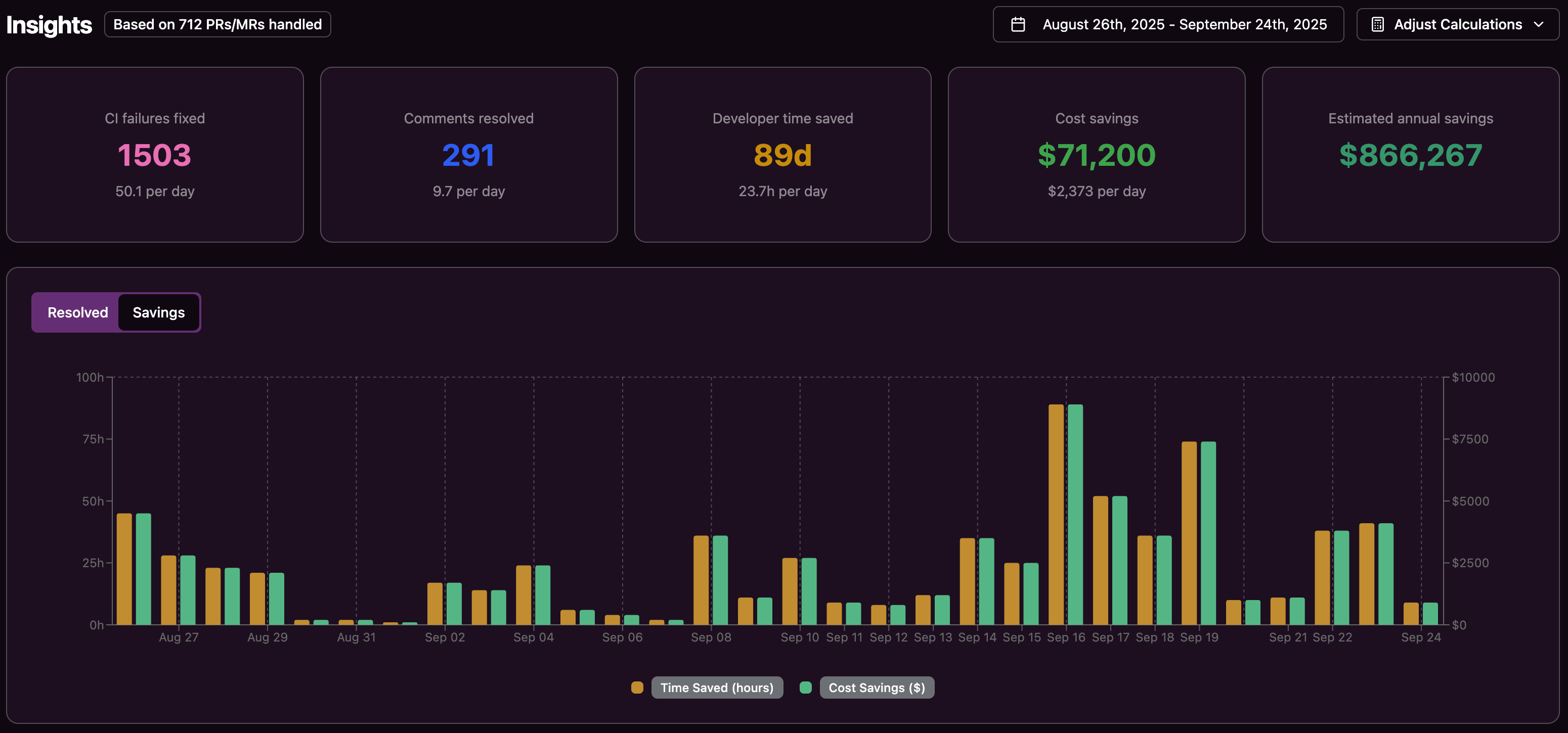

For larger engineering organizations, quarterly losses can range from thousands of dollars to more than $70,000, even before considering production incidents or delayed roadmap items.

Install Gitar to reduce time spent on failed builds and reclaim developer hours from flaky tests.

Why Traditional Flaky-Test Tactics Often Fall Short

Most teams already invest in manual debugging, quarantining, and dashboards. These steps help visibility but usually leave the core cost drivers in place.

Manual Debugging And Quarantining

Manual triage and debugging remain necessary but scale poorly. Non-deterministic failures demand repeated runs, log review, and test refactoring. Industry discussions describe a significant effort simply to keep pipelines stable. Quarantining unreliable tests then shifts them out of the critical path without fixing root causes, creating a backlog of technical debt.

Observability, Dashboards, And Dedicated Teams

Analytics tools highlight which tests are flaky and how often they fail. These insights still require a person to design a fix, write a patch, and wait for another CI cycle. Some organizations eventually create dedicated flaky-test teams, which reduces noise but adds ongoing headcount and manual effort.

AI Suggestion Engines

Modern AI code-review tools propose improvements and fixes. They rarely apply those changes end-to-end. Developers still need to interpret suggestions, edit code, push commits, and wait for a green build. Context-switching remains, and CI failures continue to block flow.

How Gitar Uses Autonomous AI To Reduce CI Failure Costs

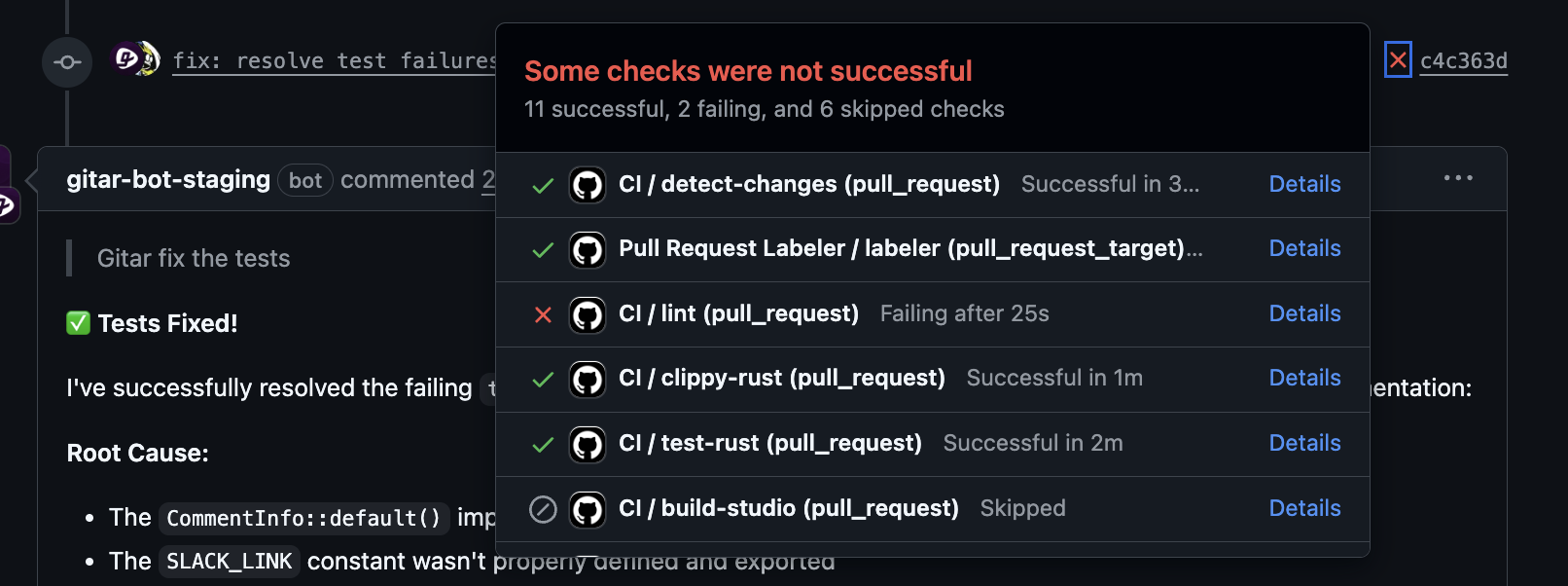

Gitar focuses on autonomous CI healing rather than suggestions alone. The system observes failures, reproduces them in a replica of your environment, and applies validated fixes back to the pull request branch.

End-To-End Autonomous Fixing

Gitar analyzes CI logs, identifies root causes, generates the necessary code change, applies it, and pushes a commit. The platform covers common failures such as:

- Lint and formatting errors

- Unit and integration test failures, including snapshot updates and assertion fixes

- Build issues such as dependency mismatches or misconfigured scripts

Accurate Environment Replication

Gitar emulates complex CI workflows, including specific JDK versions, multiple SDKs, and third-party tools such as SonarQube, Snyk, and snapshot testing frameworks. This replication helps ensure that proposed fixes actually pass in your pipelines rather than only on a generic model of the code.

Configurable Trust Model

Teams can start in a conservative mode, where Gitar posts suggested fixes for a one-click merge. As confidence grows, organizations can shift to more autonomous modes that commit directly, with clear rollback options if needed.

Cross-Platform Support And Code Review Assistance

Gitar integrates with GitHub Actions, GitLab CI, CircleCI, BuildKite, and other common CI systems. Its agent architecture handles concurrent jobs and asynchronous events so that fixes arrive quickly.

The platform also supports human reviewers. A reviewer can tag Gitar in a pull request to request a code review or ask it to implement a specific change. This reduces latency for distributed teams where time zones often delay review cycles.

Implementing Autonomous CI Safely In Your Organization

Successful adoption of autonomous CI requires attention to readiness, rollout, and measurement.

Assessing Readiness And Aligning Stakeholders

Teams benefit from a baseline assessment of CI maturity, test stability, and appetite for automation. Engineering managers, staff engineers, and platform or DevOps teams should share a clear view of current CI pain and expected outcomes.

Positioning Gitar as a tool that augments developers and removes repetitive work helps address concerns about control and code quality.

Phased Rollout For Confidence

A simple three-phase rollout often works well:

- Phase 1: Install Gitar as a GitHub App, connect it to existing CI, and run in suggestion-only mode on a small set of repositories.

- Phase 2: Expand coverage after the first successful fixes and move selected projects to auto-commit for low-risk changes.

- Phase 3: Enable advanced workflows such as automated handling of review comments and multi-repository support, based on team feedback and metrics.

Measuring ROI From Reduced CI Failure Costs

Leaders can track impact using metrics such as:

- Reduction in CI build failures and reruns

- Decrease in average PR merge time

- Hours of developer time reclaimed from CI triage and code review overhead

- Developer satisfaction scores related to tooling and workflow

For a 20-developer team at a loaded rate of $200 per hour, one wasted hour per day per developer represents about $1 million per year in lost productivity. If Gitar eliminates even half of that time through autonomous fixes and review assistance, the organization gains roughly $500,000 in annual value.

Gitar Compared With Common CI Failure Management Approaches

|

Aspect |

Gitar (Autonomous CI Healing) |

Traditional CI (Manual) |

AI Code Reviewers (Suggestions) |

|

CI failure resolution |

Analyzes failures, generates fixes, applies changes, and validates builds automatically. |

Requires developers to investigate, patch, commit, and rerun pipelines. |

Proposes fixes but depends on manual implementation and validation. |

|

Developer productivity |

Reduces interruptions and preserves flow by handling many failures autonomously. |

Consumes significant time in debugging, reruns, and context-switching. |

Still interrupts the flow for implementation and follow-up. |

|

Environment context |

Replicates CI environments, including SDKs, dependencies, and third-party tools. |

Varies by team; often difficult to reproduce issues locally. |

Focuses mainly on code, with limited insight into the full CI context. |

|

Primary value |

Reclaims developer time and accelerates time to merge by turning CI into a self-healing system. |

Provides a functional pipeline when tests pass, but relies on manual work when they do not. |

Improves review quality and summaries but does not remove CI toil. |

Frequently Asked Questions About The Cost Of Flaky Tests

How do flaky tests impact a company’s bottom line beyond developer time?

Flaky tests delay feature releases, which pushes back revenue and weakens competitive position. They increase infrastructure costs through repeated CI runs and expand the chance that real defects slip into production, which can cause incidents and reputational damage. Over time, they also reduce team morale and make it harder to execute ambitious roadmaps.

We already use AI code review tools. How is Gitar different in addressing CI failure costs?

Typical AI code reviewers highlight issues and suggest patches. Gitar focuses on autonomously fixing CI failures by generating, applying, and validating changes in your pipelines. This approach reduces context-switching and manual effort, so engineers spend more time on feature work and less on CI maintenance.

What organizational patterns keep flaky-test costs high?

Common patterns include accepting flakes as normal, failing to assign ownership for test stability, and not tracking the time spent on CI triage. These behaviors allow both the technical and psychological costs to grow without explicit budget visibility.

How does Gitar ensure its automated fixes are reliable?

Gitar replicates the CI environment, including language runtimes, dependencies, and integrated tools such as SonarQube and Snyk. Fixes are validated through the full pipeline before they merge, and teams can choose conservative modes that require explicit approval before a change lands.

Conclusion: Turning Flaky-Test Cost Into A Managed Line Item

Flaky tests represent a measurable drain on engineering productivity, infrastructure budget, and team morale. Treating them as a normal inconvenience hides the real financial impact and masks the risk.

Autonomous CI healing with tools like Gitar allows teams to treat flaky tests and routine CI failures as a controllable operational cost. By reclaiming developer hours and stabilizing pipelines, organizations in 2026 can focus more energy on product innovation and less on repetitive debugging. Install Gitar to start reducing flaky-test overhead and improve the reliability of your CI pipeline.