Key Takeaways

- Pricing for integration testing platforms in 2026 often looks simple on the surface but hides costs tied to usage, contracts, and required services.

- Total cost of ownership depends as much on developer productivity and maintenance effort as on subscription fees.

- Common pricing models, including tiered, consumption-based, and managed services, can create cost spikes if teams cannot accurately predict usage.

- Reducing developer toil from CI failures and manual fixes usually delivers greater ROI than marginal savings on platform licenses.

- Gitar provides autonomous CI fixes that cut developer time spent on broken builds and code review loops, which improves ROI from existing CI investments; see how Gitar works in your environment.

Why Integration Testing Platform Pricing Comparison Matters in 2026

The Evolving Landscape of Integration Testing

Integration testing platforms now rely heavily on automation, parallelization, and advanced analytics. Many automated backend testing tools use tiered subscriptions that vary by parallel runs, test volume limits, and analytics access. Distributed teams and AI-assisted coding increase code volume, which raises the number of pull requests, tests, and CI runs that platforms must handle.

Legacy pricing models often assume predictable workloads. When teams expand automation, they may find that costs scale faster than expected and that critical features, such as advanced debugging or compliance controls, sit behind higher-priced tiers.

Beyond the Sticker Price: Total Cost of Ownership

Total cost of ownership includes more than subscription fees. Implementation effort, training, maintenance, and support all add to spend. The highest cost usually comes from developer productivity loss when CI failures, slow runs, and manual fixes interrupt focus.

For teams of 20 or more developers, time lost to CI failures and code review delays can reach seven figures annually. Decisions that focus only on license price miss the opportunity to reclaim that time by reducing toil and context switching.

Start reducing CI-related developer toil with Gitar

Common Integration Testing Platform Pricing Models

Subscription Tiers and Feature-Based Pricing

Most platforms use tiered plans, such as basic, pro, and enterprise. These plans often combine limits on users, test volume, parallel runs, and premium features. Lower tiers may restrict concurrency or observability, while upper tiers unlock advanced analytics, security features, and priority support.

Teams that start on lower tiers commonly face upgrades once they need more environments, better debugging tools, or compliance features. Mid-contract upgrades can significantly raise annual spend if they were not planned during the initial evaluation.

Consumption-Based Pricing: Predictability vs. Flexibility

Consumption-based models charge by metrics such as tests executed, data processed, or CI minutes. Examples include pricing around a fixed cost per gigabyte processed. These models can suit stable workloads, but often create unpredictable invoices for teams with spiky or fast-growing CI usage.

A single release with widespread test failures can trigger multiple reruns and retries, multiplying usage charges. Finance and engineering leaders then struggle to forecast monthly costs with confidence.

Transaction-Based and API Call Pricing

Some platforms bill per API call, connector, or integration transaction. API and integration services frequently mix flat-rate plans, usage-based charges, and custom enterprise pricing. This model can work for small, predictable integrations but becomes complex when testing spans many services, environments, and data flows.

Tracking and predicting API consumption across multiple test suites and pipelines requires careful monitoring. Without this discipline, teams can lose visibility into unit economics and encounter unplanned overages.

Managed Services and Custom Enterprise Pricing

Many enterprise offerings combine software access with managed services. Vendors often bundle consulting, custom connectors, and support into hybrid pricing that mixes platform and service fees. These packages can reduce initial setup effort but also create ongoing dependencies.

Custom contracts may include minimum spend, auto-renewal terms, or limits on usage profiles. Without a clear line-of-sight into each component, organizations can underestimate the true long-term cost.

Hidden Costs and Strategic Pitfalls

Vendor Lock-In, Complex Contracts, and Add-On Fees

Common hidden costs include mandatory services, extra environmental fees, and separate charges for governance or API management modules. As teams adopt proprietary features, migration becomes harder, which increases switching costs over time.

Contracts sometimes include automatic renewals, step-up pricing, or penalties for reducing usage. Add-on fees for capabilities such as compliance reporting, enhanced security, and higher support tiers can double the effective platform cost if not scoped early.

Integration, Implementation, and Operational Overhead

Implementation effort ranges from simple configuration to extensive custom integration. DIY AI tools may require prompt engineering and in-house orchestration, while more complete solutions aim for rapid setup with minimal scripting.

Ongoing operations include platform administration, troubleshooting CI issues, and updating integrations as the stack changes. These tasks translate directly into developer and DevOps hours that should be factored into TCO.

The Cost of Developer Toil and Inefficiency

The largest hidden expense usually arises from developer toil. Complex licensing and advanced functionality increase the importance of modeling different usage scenarios, yet many teams still overlook the time engineers spend diagnosing failures and re-running tests.

Each context switch from feature work to CI troubleshooting interrupts the flow. Suggestion-only AI tools still depend on engineers to apply and validate fixes, so the main productivity drag remains.

Explore how Gitar reduces CI failures and manual debugging

Gitar: Autonomous CI Fixes and a Different ROI Model

From Suggestions to Applied Fixes

Gitar focuses on fixing CI failures rather than only suggesting changes. The platform identifies the cause of a failure, applies a candidate fix, runs the full CI workflow, and surfaces a passing pull request for review. Developers then review and merge instead of spending cycles on diagnosis and patching.

This model removes much of the manual loop of “investigate, experiment, rerun CI, repeat” that slows teams and delays releases.

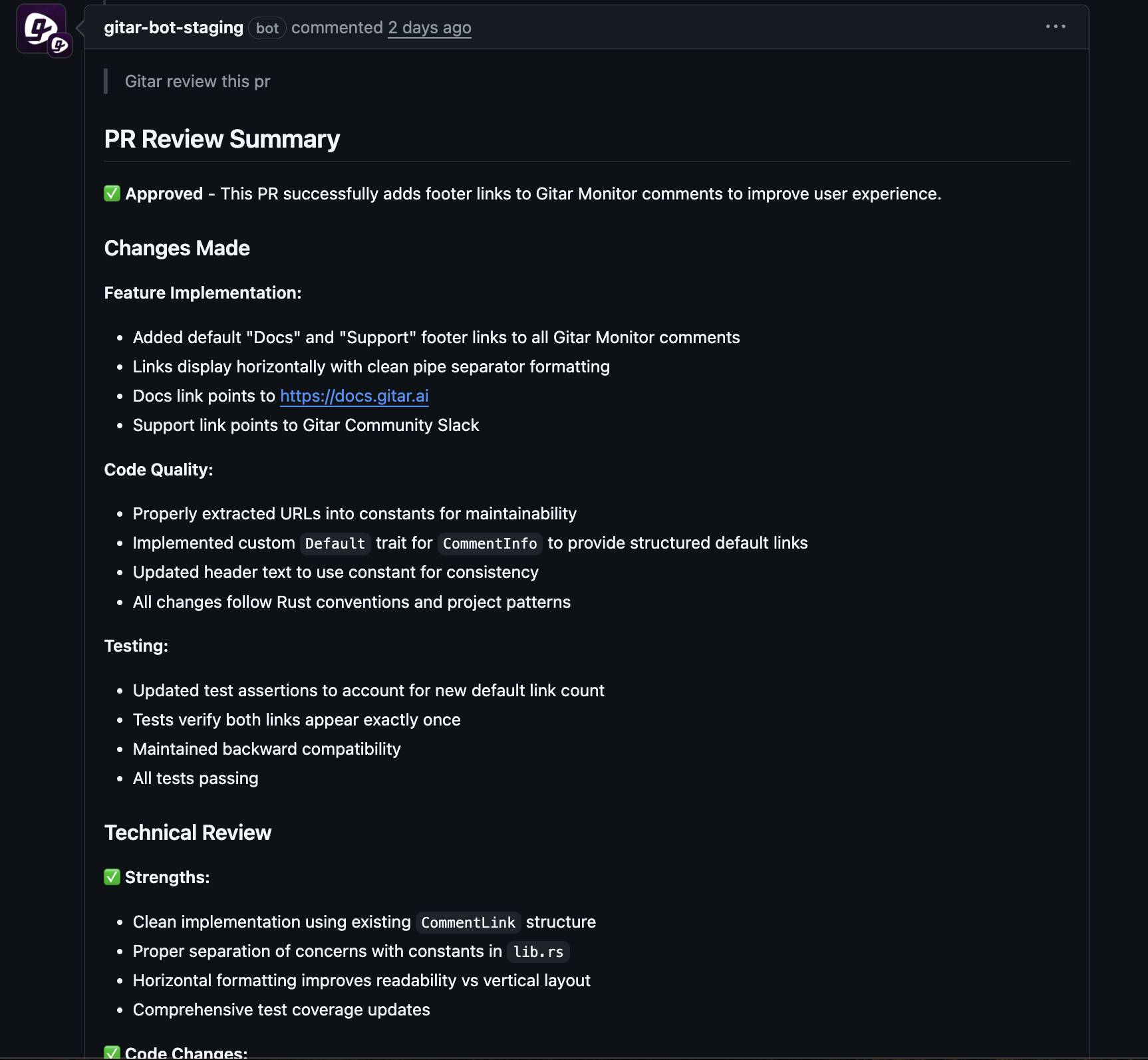

How Gitar Delivers Measurable Value

Gitar centers pricing and value on reclaimed developer time and improved throughput. For a 20-person engineering team, savings can approach seven figures per year by reducing time spent on CI failures and extended review cycles. Automation of AI-related functionality can offset upfront investments through lower manual effort and faster delivery.

The platform shortens time-to-merge by automatically addressing CI issues and code review comments. Distributed teams benefit in particular when fixes land between time zones without waiting for the original author.

Gitar also reduces wasted CI minutes by cutting reruns and exploratory debugging jobs, which can lower infrastructure costs in high-volume environments.

Key Differentiators of Gitar’s Autonomous CI Platform

Gitar replicates full build and test environments, including language versions, dependency graphs, security scans, and snapshot tests. This approach helps ensure that applied fixes work in real-world enterprise pipelines rather than in simplified sandboxes.

Teams can configure trust levels, from suggestion-only modes to auto-commit settings with human review. Support for multiple CI systems, such as GitHub, GitLab CI, CircleCI, and BuildKite, fits organizations with mixed tooling while keeping developers in the loop for oversight.

Integration Testing Platform Pricing: Gitar vs. Traditional Tools

The table below summarizes how Gitar compares with traditional or suggestion-based integration testing platforms across core dimensions that influence pricing and ROI.

|

Feature / Metric |

Traditional / Suggestion-Based Platforms |

Gitar (Autonomous CI Fixes) |

|

Primary function |

Analyzes code and suggests fixes |

Finds failures, applies fixes, validates, and proposes merge |

|

Manual effort |

High, developers implement and validate suggestions |

Low, developers review and merge prepared fixes |

|

CI time consumed |

Often high due to repeated failed runs and retries |

Reduced through targeted fixes and fewer reruns |

|

Impact on developer flow |

Frequent context switches for debugging |

Fewer interruptions because fixes run in the background |

|

Time-to-merge |

Extended by manual review and fix cycles |

Shortened through automated resolution of CI and review feedback |

|

Primary cost driver |

Users, usage, feature tiers, and services |

Value tied to developer productivity and velocity |

|

Hidden costs |

Developer toil, delays, and contract constraints |

Focused on measurable productivity gains and CI efficiency |

Evaluate Gitar’s autonomous CI fixes for your team

Conclusion: Improving Integration Testing ROI with Gitar

Integration testing platform choices in 2026 require more than a comparison of list prices. Tiered, usage-based, and service-heavy models can mask long-term costs while leaving the largest expense, developer time, largely unaddressed.

Gitar changes the equation by reducing CI failures, shortening time-to-merge, and limiting manual debugging work. These outcomes directly support engineering leaders who prioritize predictable costs, faster delivery, and lower operational friction.

Organizations that move from suggestion-only tools to autonomous CI fixes position their teams for higher throughput and more reliable delivery. See how Gitar fits into your integration testing and CI strategy.

Frequently Asked Questions to Inform Your Pricing Comparison

How do traditional integration testing platforms typically manage their pricing, and what are their common limitations?

Traditional platforms usually combine tiered subscriptions with usage caps and, in some cases, consumption-based billing. Limitations include cost spikes when workloads grow, separate pricing for advanced features, and limited consideration of the time engineers spend implementing suggestions or debugging failures.

What are the primary hidden costs that engineering leaders should consider when evaluating integration testing platforms in 2026?

Key hidden costs include required professional services, extra environment fees, transaction or API-based overages, and separate charges for modules such as API management or governance. Time lost to context switching and manual debugging, along with vendor lock-in from complex contracts, can significantly increase the total cost of ownership.

How does a platform like Gitar, focused on autonomous CI fixes, change the pricing and ROI conversation compared to suggestion-based tools?

Gitar changes the focus from license metrics to outcomes by delivering applied fixes that pass CI, rather than only suggestions. This approach reduces developer toil, cuts CI reruns, and accelerates time-to-merge, so the return on investment reflects productivity gains rather than just lower tooling costs.

Our CI setup is unique and complex; how can a platform guarantee effective fixes in such an environment?

Gitar runs fixes in replicated environments that mirror real pipelines, including language versions, dependencies, scans, and snapshot tests. This design improves the reliability of fixes in complex setups and reduces the risk of configuration-specific issues that generic tools may miss.

What should we expect in terms of implementation timeline and ongoing maintenance costs when comparing integration testing platforms?

Implementation timelines range from hours for lightweight, integration-focused tools to weeks or months for heavily customized platforms. Ongoing costs include administration, integration updates, training, and troubleshooting. Platforms such as Gitar that emphasize autonomous operation and environment replication aim to keep ongoing maintenance lower by reducing manual intervention in day-to-day CI work.