Key Takeaways

- GitLab CI reliability in 2026 is a core engineering concern that directly affects delivery speed, developer experience, and customer trust.

- Pipeline design, flaky tests, and dependency issues remain the most frequent GitLab CI failure causes, but teams can reduce these with focused configuration and analytics.

- Clear pipeline structure, fast feedback, secure configuration, and data-driven tuning create a more resilient GitLab CI foundation.

- Autonomous remediation on top of GitLab CI, rather than manual debugging alone, helps teams cut context-switching and shorten time-to-merge.

- Gitar adds an autonomous fixing layer on top of GitLab CI; teams can install Gitar to automatically diagnose and fix broken builds.

Protect Delivery Timelines With Reliable GitLab CI

GitLab CI reliability becomes a strategic issue once a team ships software at scale. Pipeline failures do more than slow individual developers; they delay releases, increase risk, and erode trust with stakeholders.

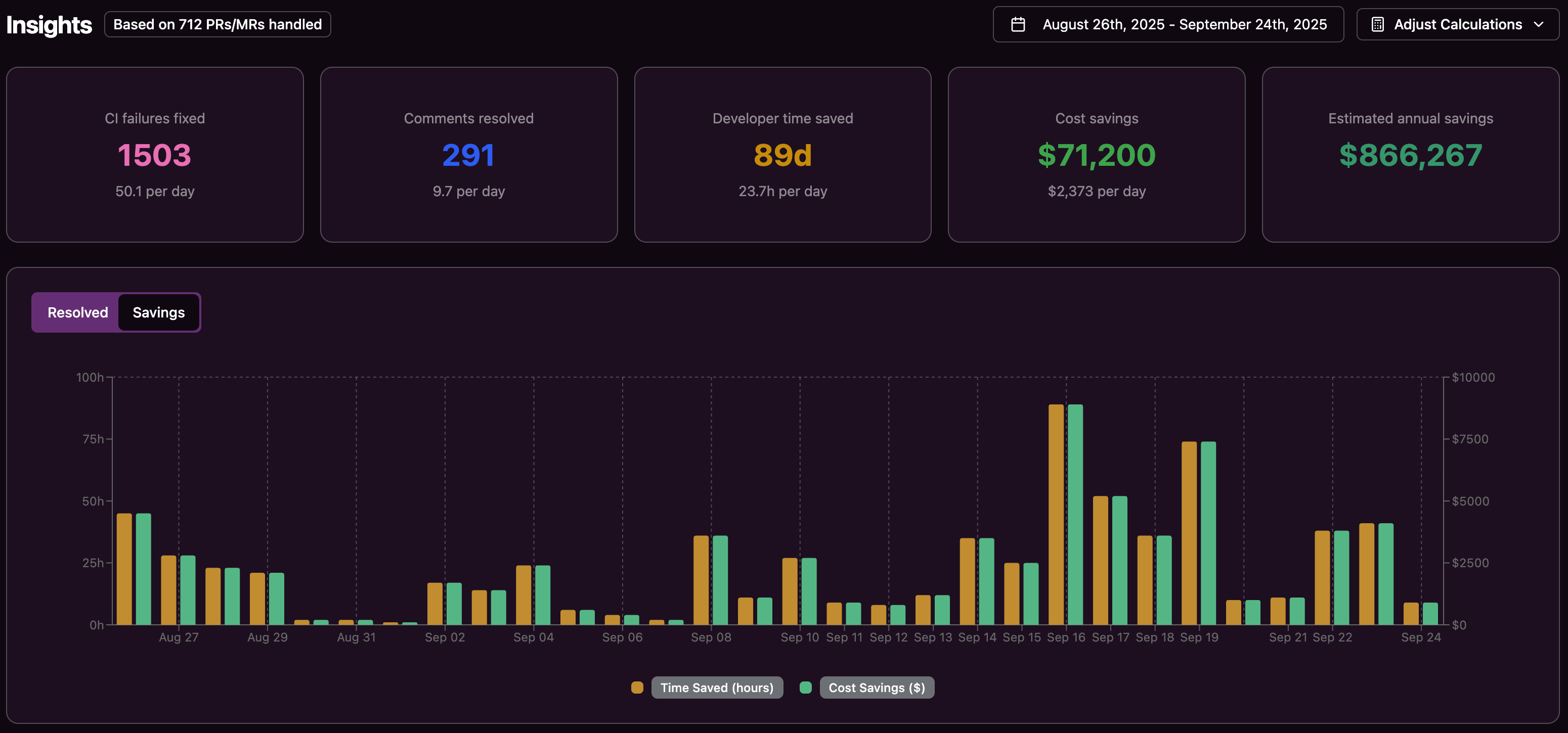

Each broken pipeline produces a ripple effect: delayed project milestones, reduced time-to-market, developer burnout, and, in some cases, missed revenue. Many engineering teams see developers spend up to 30% of their time dealing with CI and code review problems, which can reach roughly $1 million per year in lost productivity for a 20-person team.

Reactive debugging alone does not keep up with this demand. Context-switching from feature work to CI troubleshooting repeatedly breaks focus, so short fixes turn into long interruptions. AI-assisted coding has also increased code volume, which creates more pull requests, more tests to run, and many more opportunities for CI instability. Teams now benefit from systems that not only detect failures but also help resolve them quickly and consistently.

Fix Common GitLab CI Failure Triggers Early

Failure prevention in GitLab CI starts with understanding the most common structural and behavioral problems in pipelines.

Pipeline Design and Runner Constraints

GitLab highlights pipeline inefficiencies such as long-running jobs, many stages, large images, heavy dependencies, and under-provisioned runners. These choices create fragile pipelines where small code changes can trigger large failures.

Runner issues around provisioning, availability, and dependency installation times also introduce instability. Pipelines that rely on large container images or ad hoc environment setup tend to fail more often and are harder to debug.

Flaky Tests and Intermittent Failures

Flaky tests and intermittent failures are major contributors to CI noise and instability. When developers cannot trust test results, they start rerunning jobs, retrying at random, or ignoring failures, which undermines quality gates and slows delivery.

CI/CD Anti-Patterns and Dependency Drift

Common CI/CD anti-patterns include monolithic pipelines, hardcoded secrets, and overuse of end-to-end tests on every commit. These practices increase failure blast radius and extend feedback loops.

Typical failure clusters include build errors, test failures, and dependency conflicts. Many of these stem from mismatches between developer machines and CI environments or from configuration drift over time, especially in distributed teams working across time zones.

Design GitLab CI Pipelines for Resilience

Resilient GitLab CI pipelines rely on predictable structure, fast feedback, and auditable configuration.

Clarify Pipeline Structure and Stages

A well-structured .gitlab-ci.yml file with clear stages and dependencies helps teams avoid monolithic pipelines and isolate failures. Smaller, focused stages make it easier to see where a change failed and limit the impact on other jobs.

Use Segmentation, Parallelization, and Early Gates

GitLab recommends parallelizing test jobs within a stage to reduce runtime and improve feedback speed. This pattern helps localize failures while keeping pipelines efficient.

Automated quality gates for tests, code quality checks, and security scans keep standards consistent. Fast linting and unit tests should run early, with heavier integration and end-to-end checks later in the pipeline.

Secure and Version Your CI Configuration

Treat pipeline configuration as code so teams can review, version, and audit it. Hardcoded secrets in CI configuration introduce both security risk and operational fragility. GitLab’s secure secret management features provide safer patterns for credentials and keys.

Use Analytics to Prioritize Fixes

GitLab CI/CD Analytics surfaces pipeline volume, duration, failure rate, and success rate over time. These trends highlight unstable jobs, slow stages, and recurring issues so teams can target the highest-impact fixes first.

Add Autonomous Fixing on Top of GitLab CI With Gitar

Even with a solid pipeline design, failures still occur and require attention. Gitar adds an autonomous fixing layer on top of GitLab CI so developers spend less time on manual debugging and more time on feature work.

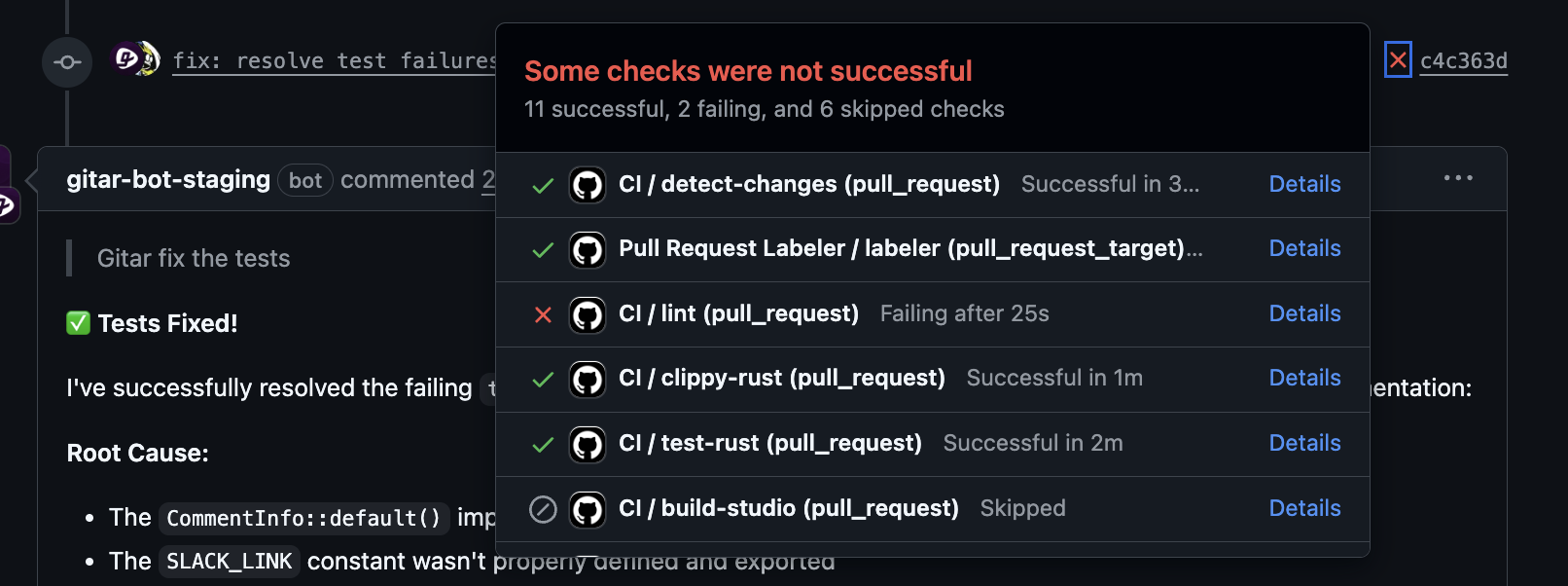

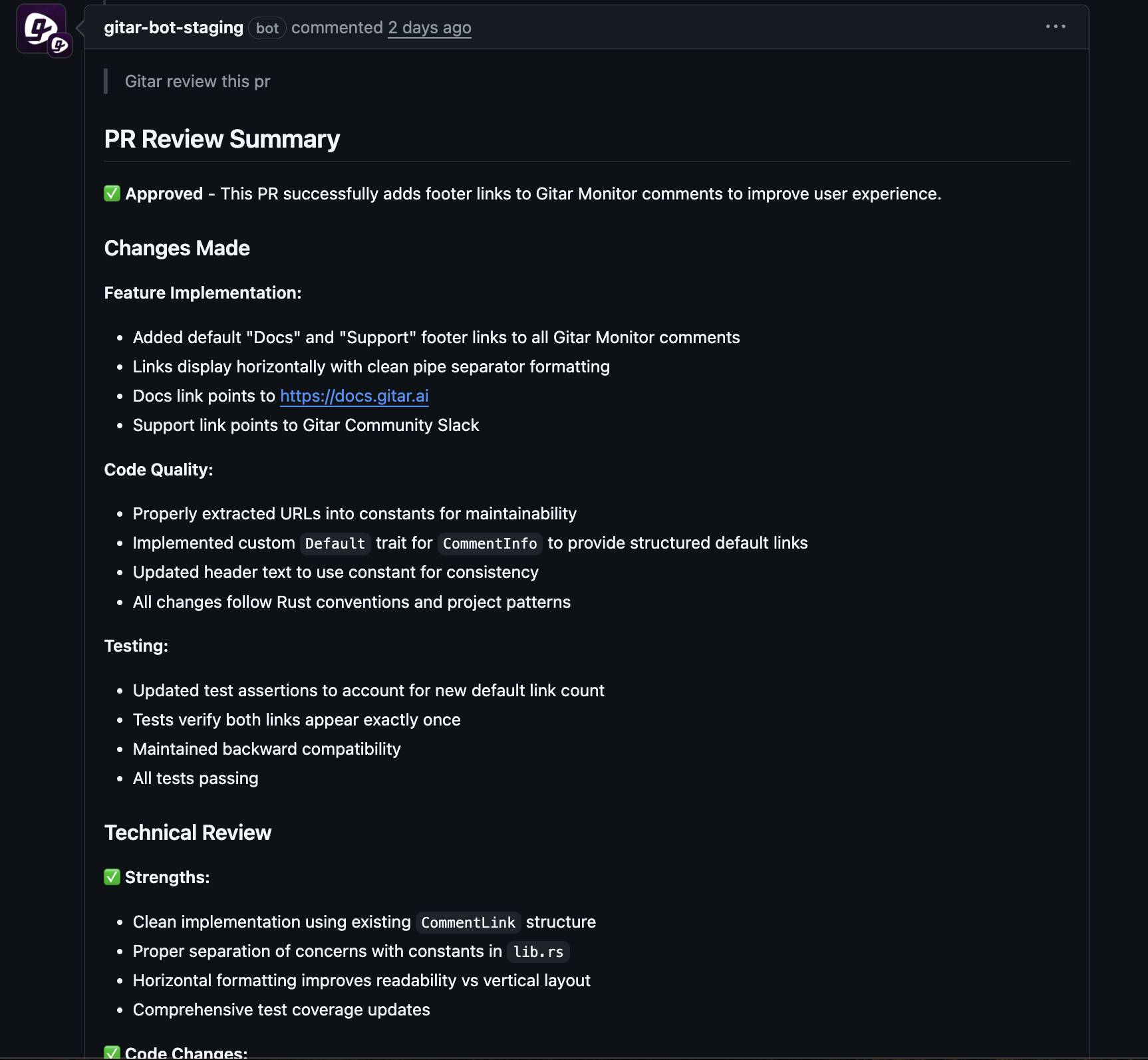

When a CI check fails because of a lint error, test failure, or build issue, Gitar reads the logs, generates a specific code change, applies the fix, and updates the merge request branch. The system validates its change against the CI workflow, so teams only see a green build once the fix passes.

Gitar focuses on several areas that typical suggestion-only tools do not fully cover:

- End-to-end autonomous fixing that applies, validates, and commits code changes instead of only proposing them.

- Enterprise environment replication that respects SDK versions, multi-language builds, and tools such as SonarQube and Snyk.

- A configurable trust model, from suggestion-only to auto-commit with rollback, so teams can adopt automation at a pace that fits their risk profile.

- Support for human review comments, where Gitar can update code in response to feedback and reduce time zone delays for distributed teams.

- Compatibility with GitLab CI, along with other CI systems such as GitHub Actions, CircleCI, and Buildkite.

Plan the Business Case for Autonomous CI

Adopting autonomous CI failure prevention is both a technical and a business decision. Teams need a clear view of build-versus-buy tradeoffs, adoption risk, and return on investment.

Build Versus Buy and Cost Impact

In-house development of AI-based fixing requires agent infrastructure, context management, and deep integration with GitLab events. These systems must handle concurrent runs, asynchronous job events, retries, and shared state across stages. Many organizations find that such work competes directly with core product roadmap priorities.

Commercial tools like Gitar reduce the need for developers and DevOps engineers to chase CI flakes and fix broken pipelines by hand. For teams that currently lose up to 30% of development time to these tasks, automation can produce significant savings and shorter lead times from first commit to merge.

Change Management and Metrics

Teams usually begin with conservative modes where Gitar proposes changes for review before auto-commit. As they gain confidence, they can enable more automation for low-risk failures while keeping manual control over sensitive areas.

Useful metrics include time-to-merge, CI success rate, count of retries, and reported time spent on CI-related interruptions. Developer satisfaction and the amount of uninterrupted deep work time also provide important signals.

Avoid Strategic Pitfalls in Advanced GitLab CI Automation

Even experienced teams can misstep when scaling GitLab CI automation and autonomous fixes.

- GitLab CI/CD Charts expose detailed pipeline and job-level history, yet many teams do not use this data to find recurring failures and slow jobs.

- Structured diagnosis techniques and reusable queries support systematic debugging, but ad hoc log inspection still dominates in many organizations.

- Context-switching costs remain underestimated, so short CI interruptions silently erode large blocks of productive time.

- Automation often receives a one-time setup without ongoing tuning, which leads to drift as codebases, dependencies, and test suites evolve.

Frequently Asked Questions (FAQ) on GitLab CI Failure Prevention

How does Gitar improve continuous integration failure prevention in GitLab CI?

Gitar acts as an autonomous healing engine instead of a suggestion-only assistant. It analyzes GitLab CI failure logs, generates a concrete fix, applies the change to the merge request, and validates the result through the CI workflow. This reduces manual debugging effort and the context-switching that often consumes a large share of developer time.

How does Gitar complement GitLab CI features like analytics and error reporting?

GitLab CI provides rich analytics and visibility into which pipelines and jobs fail. Gitar operates after a failure appears by turning that failure into an actionable fix. It uses the same logs and error details that developers would read and then performs the code change and retest automatically.

Is Gitar suitable for complex enterprise GitLab CI environments?

Gitar is built to work with multi-language, multi-SDK environments and with tools such as SonarQube and Snyk. It replicates the CI environment so its fixes match real build, test, and scan behavior rather than a simplified local setup.

How does Gitar affect engineering costs and time-to-market?

By reducing time spent on CI breakage and code review rework, Gitar frees engineers to focus on product features and architectural improvements. For teams of around 20 developers, this shift can represent hundreds of hours per month that move from maintenance work to roadmap delivery.

Conclusion: Move Toward Self-Healing GitLab CI

Continuous integration failure prevention in GitLab CI is now a central part of engineering strategy, not just a tooling detail. Well-structured pipelines, strong quality gates, and secure configuration create a stable base, but they do not remove the need to fix inevitable failures.

Autonomous, self-healing capabilities such as Gitar help teams keep pipelines healthy without constant manual intervention. These systems reduce context-switching, shorten time-to-merge, and support more predictable delivery.

Teams that combine solid GitLab CI design with autonomous fixing gain a clearer path to shipping reliable software at the speed their business requires. To add autonomous fixing to your existing GitLab CI workflows, install Gitar and start automatically resolving broken builds.