Key Takeaways

- Manual CI debugging and code review consume significant developer time and break focus, with up to 30% of time lost to CI and review issues.

- AI debugging assistants in 2026 shift from suggestion tools to autonomous systems that analyze logs, propose fixes, and validate changes in your CI environment.

- Gitar focuses on self-healing CI, handling failing checks, tedious review changes, and complex environments across major CI platforms.

- A phased rollout with clear metrics helps teams build trust, improve delivery speed, and quantify ROI from reduced cycle time and rework.

- Teams can install Gitar to automatically fix broken builds, reduce review toil, and keep developers focused on higher-value work.

The Developer Experience Imperative: Why Current Debugging Approaches Fail

The CI/CD Gauntlet: The Cost of Manual Debugging

Most engineers know the pattern: push a pull request, see a wall of red, and then chase down a failing test, a missing dependency, or a linter complaint. Each fix requires reading logs, switching back to a local environment, tweaking code, pushing again, and waiting for CI to finish. A five-minute change can turn into an hour of lost flow.

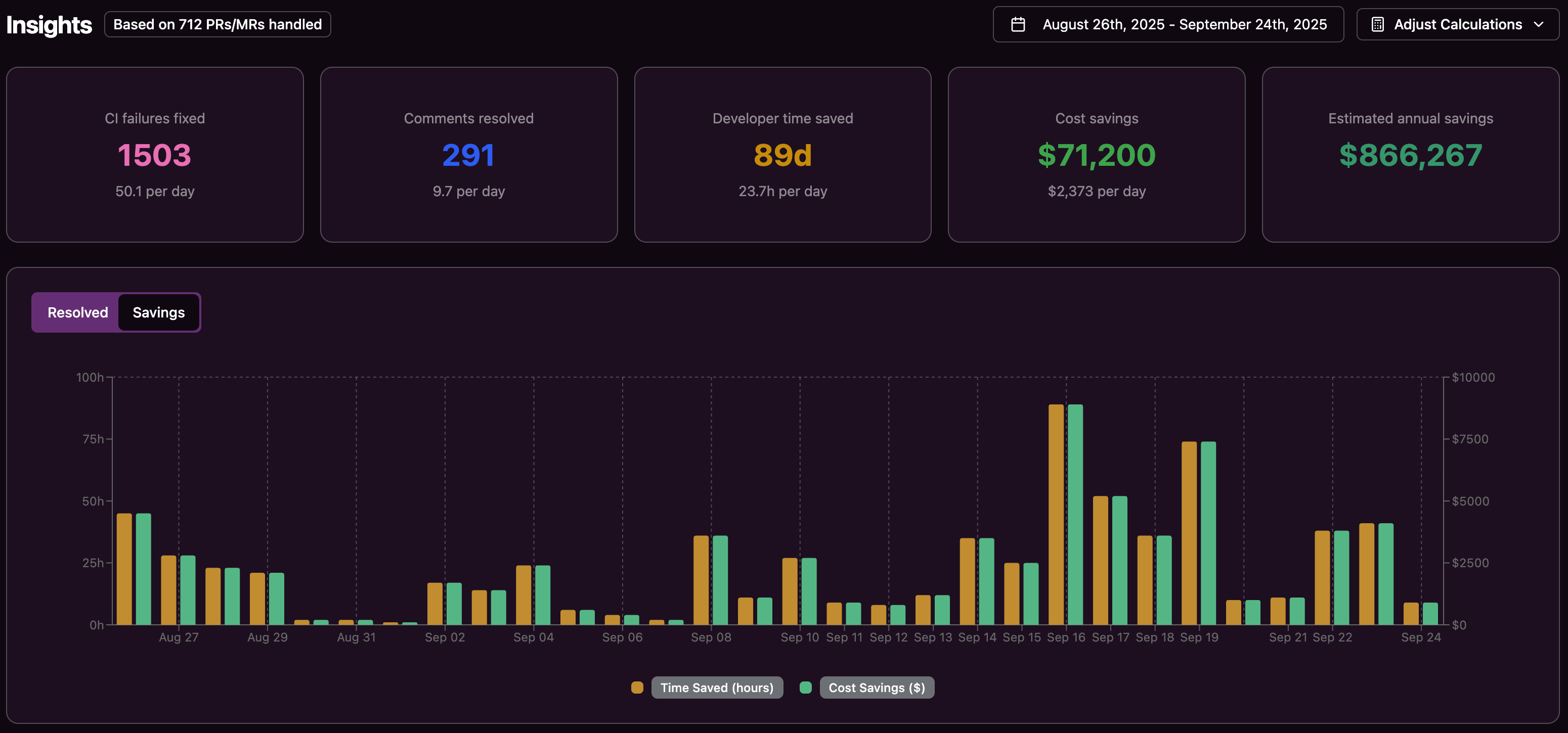

The financial impact can be large. For a 20-developer team, manual CI debugging and review rework can reach hundreds of thousands of dollars per year in lost productivity and schedule slip.

The Context Switching Tax

Developers rarely submit a pull request and move on without interruption. A CI failure or review comment often arrives after work has already shifted to a new task. Each interruption forces a context switch, which adds mental overhead and delays progress. A simple 30-minute fix can consume an hour or more of productive time once ramp-up and ramp-down are included.

The Right-Shift Bottleneck

Tools like GitHub Copilot and Cursor speed up code creation. That extra code turns into more pull requests, more tests, and more potential failures. The main constraint has shifted from writing code to validating, debugging, and merging it safely.

Industry Landscape Overview: From Suggestions to Self-Healing

AI for developers has moved from autocomplete to smarter assistants. Many tools still act as suggestion engines that produce code or comments inside an IDE or pull request, while developers remain responsible for applying fixes, wiring dependencies, and validating results in CI. The next stage focuses on autonomous systems that operate inside the full CI environment and drive builds back to green.

Introducing Gitar: The Autonomous AI Debugging Assistant for Self-Healing CI

Gitar focuses on the slowest parts of modern delivery: failing CI builds and back-and-forth code review. Instead of only suggesting code, it acts as a healing engine that works directly in your repositories and CI pipelines.

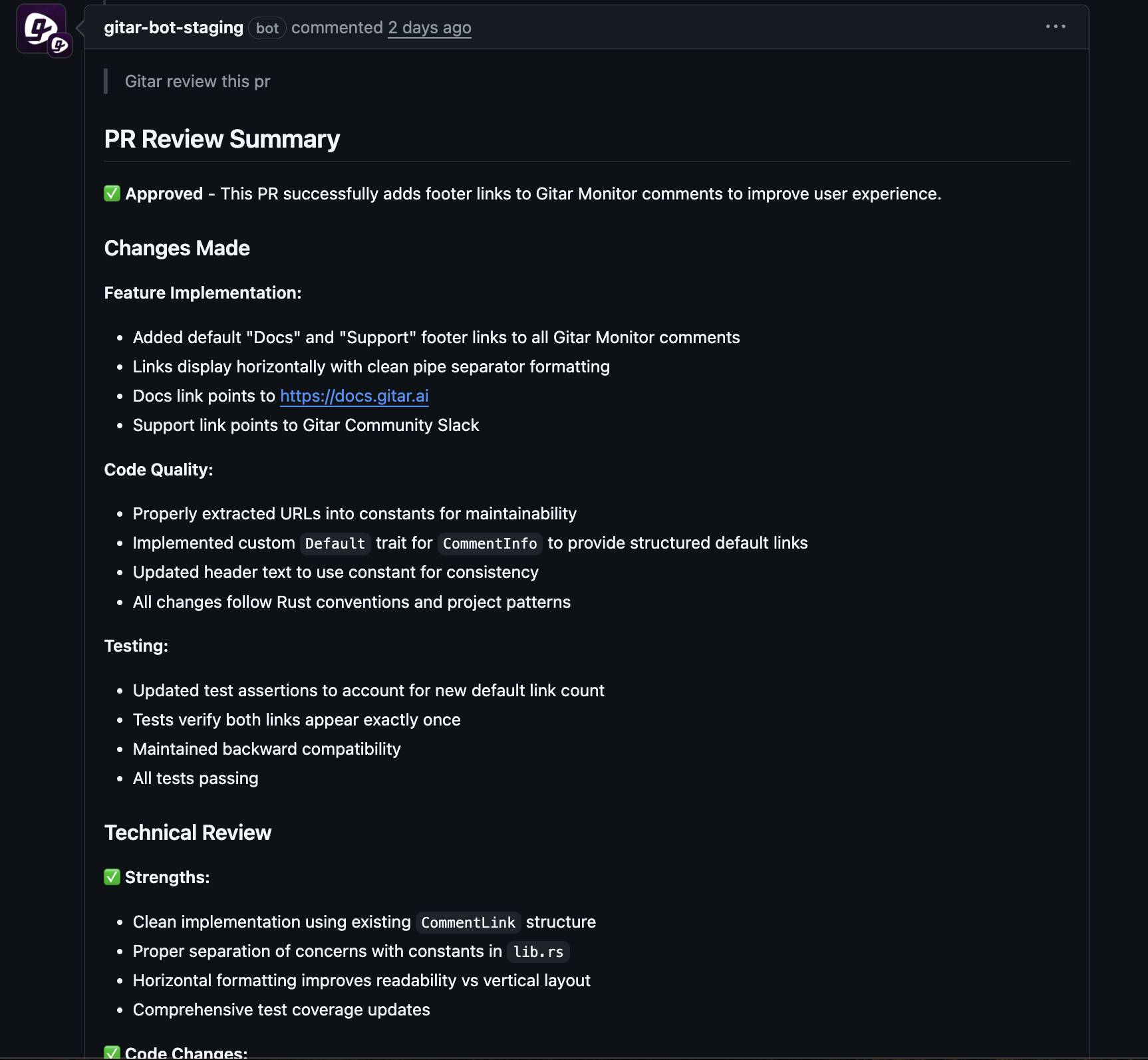

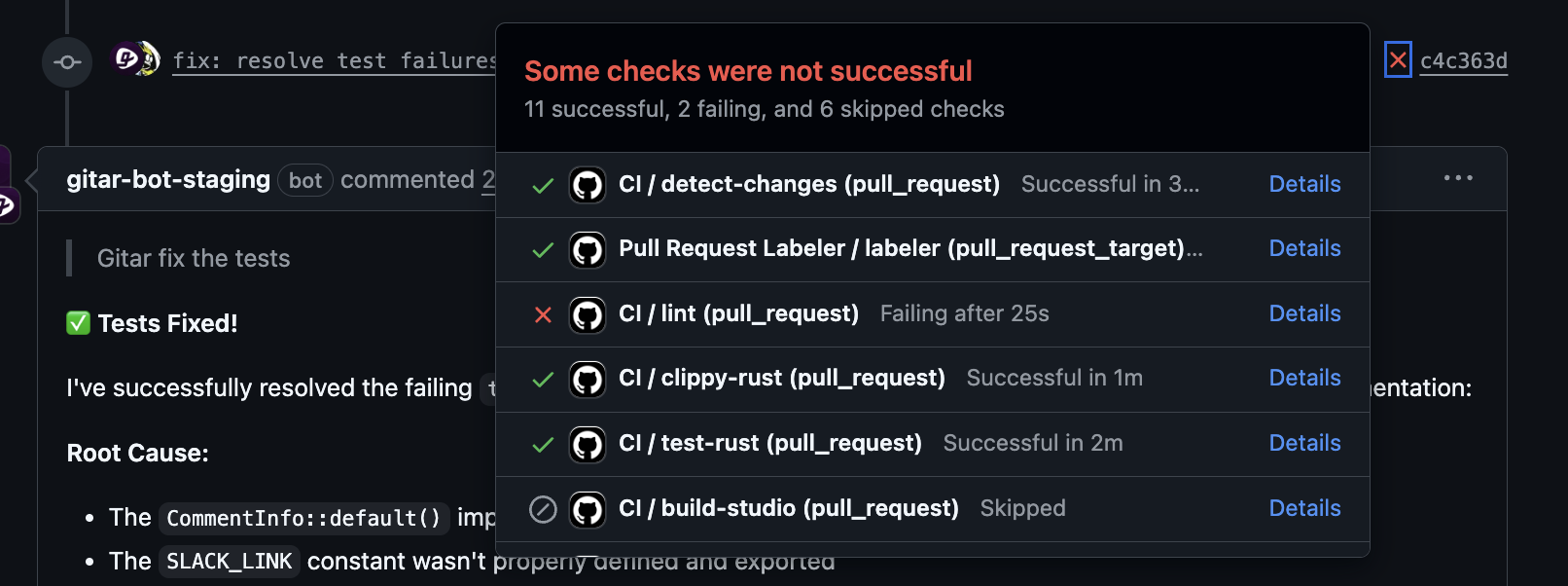

- Autonomous CI fixes Gitar reads failing CI logs for commands like npm run lint or pytest, identifies root causes, edits code, and commits fixes back to the pull request branch. It handles lint violations, test failures, build errors, and many dependency issues.

- Code review assistant Reviewers add comments with instructions for Gitar. The system implements the requested changes, which reduces idle time for distributed or cross-time-zone teams.

- Full environment replication Gitar runs inside a replica of your CI environment, including JDK versions, SDK stacks, and tools such as SonarQube or Snyk, so fixes align with real pipelines instead of an idealized local setup.

- Developer-in-the-loop controls Teams configure trust levels, from suggestion-only modes that require approval to auto-commit modes with rollback options.

- Cross-platform support Gitar works with GitHub Actions, GitLab CI, CircleCI, BuildKite, and other major CI platforms.

Strategic Considerations for Adopting AI Debugging Assistants

Increased Developer Productivity and Flow

Autonomous debugging reduces interruptions from failing builds and minor review requests. Developers spend more time on design, architecture, and feature work, and less time reopening old branches to repair small issues.

Accelerated Time-to-Market

Shorter pull request lifecycles translate directly into faster releases. When CI failures resolve automatically and mechanical review feedback is applied for you, the path from first commit to merge grows shorter and more predictable.

Reduced Operational Costs and Clear ROI

A 20-developer team that spends an hour each day on CI and review issues can see annual productivity losses near seven figures in loaded cost. Even a partial reduction of that time through automation produces measurable savings and more predictable delivery.

Improved Morale and Retention

Less time spent on repetitive debugging and log reading leads to higher job satisfaction. Teams that focus on challenging work instead of recurring CI fixes often report lower burnout and better retention.

Implementation Readiness and Metrics

Successful adoption starts with a clear view of current bottlenecks and stakeholders. Teams benefit from defining baseline metrics for cycle time, rework, and CI failure rates so they can track the impact of an AI debugging assistant over time.

Common Pitfalls for Experienced Teams

Teams sometimes underestimate change management, focus only on license cost instead of total time saved, or track too many vanity metrics. A narrow set of metrics, such as time to green build, pull request duration, and rework rate, keeps evaluations grounded.

Gitar vs. The Market: From Suggestion Engines to Autonomous Healing

Comparison Table: Gitar vs. Common Approaches

|

Feature/Capability |

Gitar |

AI Code Reviewers |

On-Demand AI Fixers |

IDE-Based Assistants |

|

Autonomous Fix Application |

Full automation with validation |

Suggestions only |

Manual triggering required |

Pre-commit only |

|

Full Environment Replication |

Complete CI environment |

Limited context |

Basic environment |

Local environment only |

|

Cross-Platform Support |

GitHub, GitLab, CircleCI, BuildKite |

Mainly GitHub or GitLab |

Often GitHub-focused |

IDE-specific |

|

Configurable Trust Model |

Conservative to aggressive modes |

Suggestion-only |

Limited automation |

Manual acceptance |

|

Context Maintenance |

Long-running agent with memory |

Pull request-level context |

Single-threaded |

Session-based |

|

Core Functionality |

Autonomous healing engine |

Suggestion engine |

On-demand fixer |

Coding assistant |

The main distinction is scope. Many tools recommend changes that still require manual edits and validation. Gitar applies, tests, and validates fixes inside the real CI environment so developers see completed work instead of raw suggestions.

Implementing Gitar: A Phased Approach to Building Trust and Value

Phase 1: Installation and Trust Building

Teams start by installing Gitar as a GitHub App on selected repositories, connecting it to existing CI, and choosing conservative settings. Early use often focuses on low-risk fixes such as lint cleanup and simple test issues, which helps teams verify behavior and review commit quality.

Phase 2: First Wins and Gradual Automation

The first time a developer sees a failure resolved with a clean commit and an explanation before they open the pull request, confidence grows quickly. Teams then expand Gitar to more repositories and increase automation levels where results remain consistent.

Phase 3: Advanced Workflows and Deeper Integration

Mature use includes more complex refactors, assistance for distributed review, and tighter integration with other tooling. Senior engineers can leave comments such as “@gitar refactor this function to use a map for better performance” and let Gitar handle the implementation and validation.

Frequently Asked Questions (FAQ) about AI Debugging Assistants

How does Gitar handle complex, unique CI setups?

Gitar runs inside a replica of your CI environment, including language versions, dependency graphs, and tools such as SonarQube or Snyk. This design lets it propose and validate fixes that match the exact conditions of your enterprise pipelines.

We already use AI code reviewers. Why do we need Gitar?

AI code reviewers highlight issues and suggest changes, but do not usually apply and validate them across the full CI workflow. Gitar acts as a healing engine that edits code, runs checks, and confirms that builds are green before reporting back.

How can we trust an AI system to modify our codebase directly?

Gitar supports several trust levels. Teams often begin with suggestion-only or approval-required modes, then move to auto-commit for certain fix types once they see consistent quality. Rollback options remain available for additional safety.

What is the measurable ROI of implementing Gitar?

Many teams see a reduction in time spent on CI failures, faster pull request turnaround, and fewer manual review cycles. For a typical 20-developer team, even cutting CI and review overhead by a fraction of an hour per person per day can save a high annual cost while improving predictability.

How does Gitar integrate with existing tools and workflows?

Gitar integrates with GitHub and GitLab repositories and works across major CI platforms such as GitHub Actions, GitLab CI, CircleCI, and BuildKite. It operates inside existing pull request and review flows, so teams keep their current branching and release strategies.

Conclusion: Building a Self-Healing Delivery Pipeline with Gitar

Software teams in 2026 face increased pressure to ship quickly without sacrificing quality. AI coding tools have accelerated code creation, but they have also exposed validation and debugging as the new constraint. Autonomous debugging assistants help close that gap by moving CI and review workflows toward self-healing behavior.

Gitar contributes to that shift by fixing many CI failures automatically, implementing review feedback on demand, and working inside real CI environments. Teams that adopt this approach gain more focused developers, faster pull request cycles, and clearer visibility into delivery performance.