Key Takeaways

- Engineering teams lose a large share of capacity to debugging, CI failures, and review rework, which directly slows time-to-market and increases costs.

- Autonomous AI debugging assistants extend CI and code review by detecting, fixing, and validating issues so developers can stay focused on feature work.

- Self-healing CI, clear trust controls, and deep environment awareness are critical requirements for any production-ready autonomous debugging solution.

- Successful adoption depends on gradual rollout, strong guardrails, and measurable impact on DORA metrics such as MTTR and deployment frequency.

- Teams can start improving CI reliability and code review speed by adding Gitar to their existing workflows, then iterating on trust levels as results prove out. Try Gitar on your CI pipeline.

The Developer Productivity Crisis: Why Autonomous AI Debugging Matters

Modern engineering teams spend a large portion of their time on maintenance instead of new product work. Developers spend roughly 35-40% of their time on maintenance work, including debugging, refactoring, and dealing with CI/CD and deployment issues. In complex systems, debugging and rework can consume 40-50% of overall effort.

These problems show up most sharply in CI and code review. A 20-developer team can lose around $1 million per year in productivity to failing builds, slow pipelines, and repeated review cycles. Each failed run disrupts focus, and what should be a quick fix often stretches into an hour or more of context switching. CI/CD and build system friction is consistently identified as a major drag on developer productivity and flow.

Organizations that reduce this friction gain a real speed advantage. Top-quartile software organizations ship features up to several times faster than median peers by improving testing, integration, and deployment workflows. Autonomous AI debugging assistants give teams a concrete way to close that gap.

Install Gitar to reduce CI toil and shorten the path from pull request to merge.

How Autonomous AI Debugging Assistants Fit Into Your Delivery Pipeline

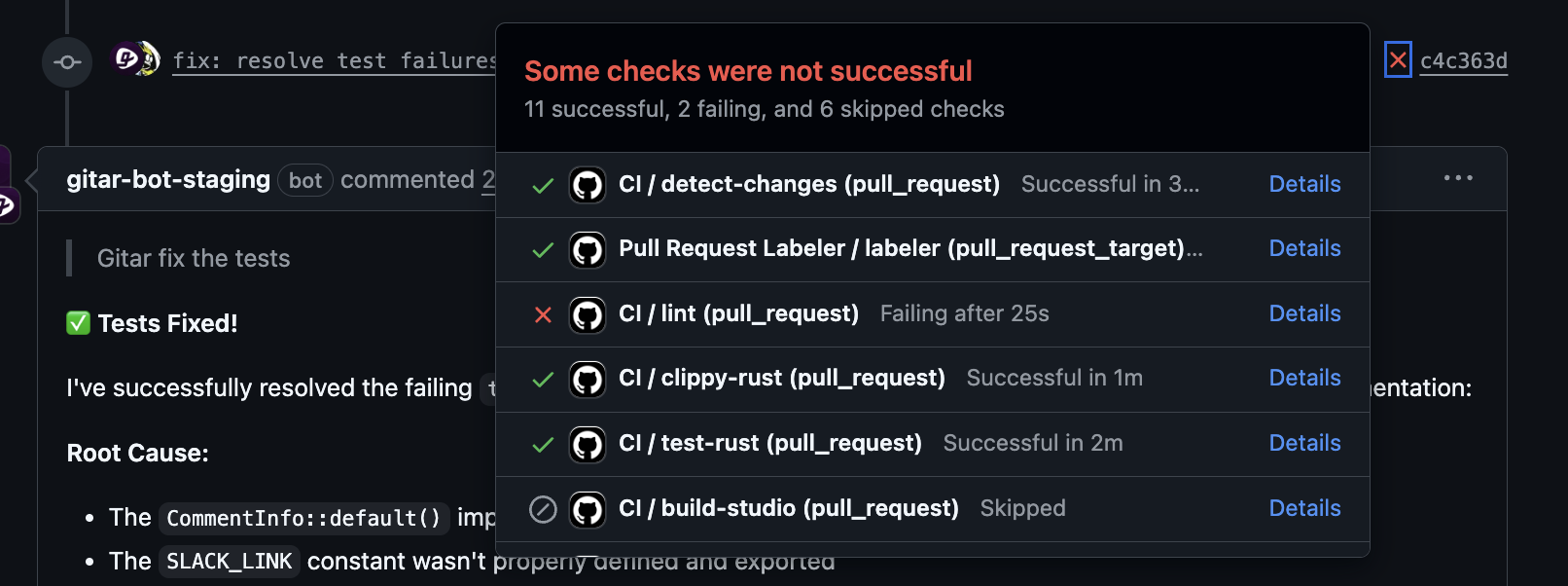

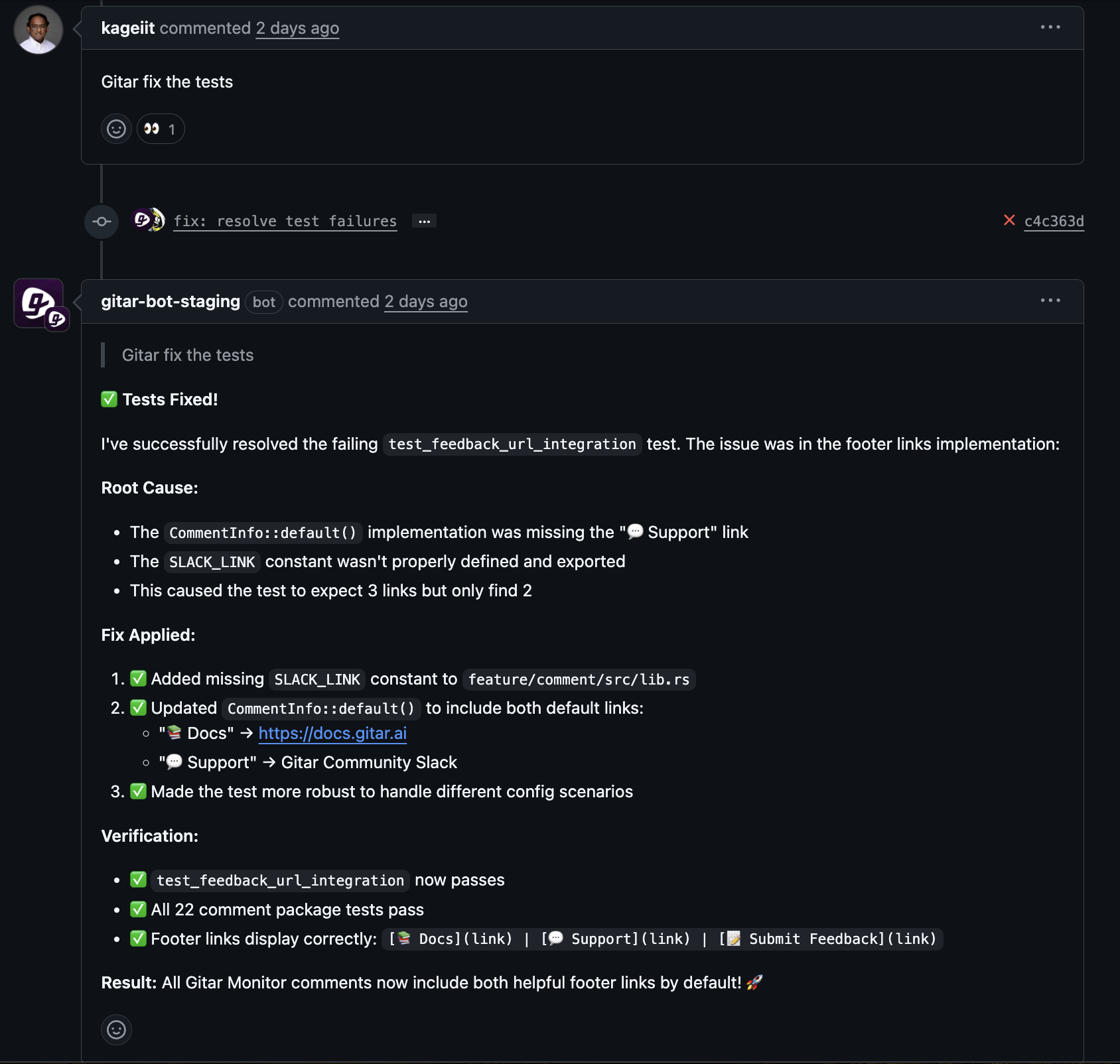

Autonomous AI debugging assistants shift from suggestion-only tools to systems that can act. Instead of only pointing out issues, they detect CI failures and review feedback, generate code changes, and apply fixes that pass checks.

These agents integrate with CI/CD, listen for events such as failed jobs, analyze logs, and modify code in the same repositories developers use. Self-healing CI describes this pattern, where common and repeatable failures resolve automatically, often before developers return to the pull request.

The most effective systems operate with:

- Event-driven triggers based on CI status and review comments

- Access to repo and environment context, including configuration and dependencies

- Automatic validation through existing tests and checks

- Policy controls that define where and how the agent can act

Safe autonomy depends on safeguards such as testing, static analysis, and policy constraints around changes. This moves the system beyond a generic code assistant toward an operational tool that fits into production workflows.

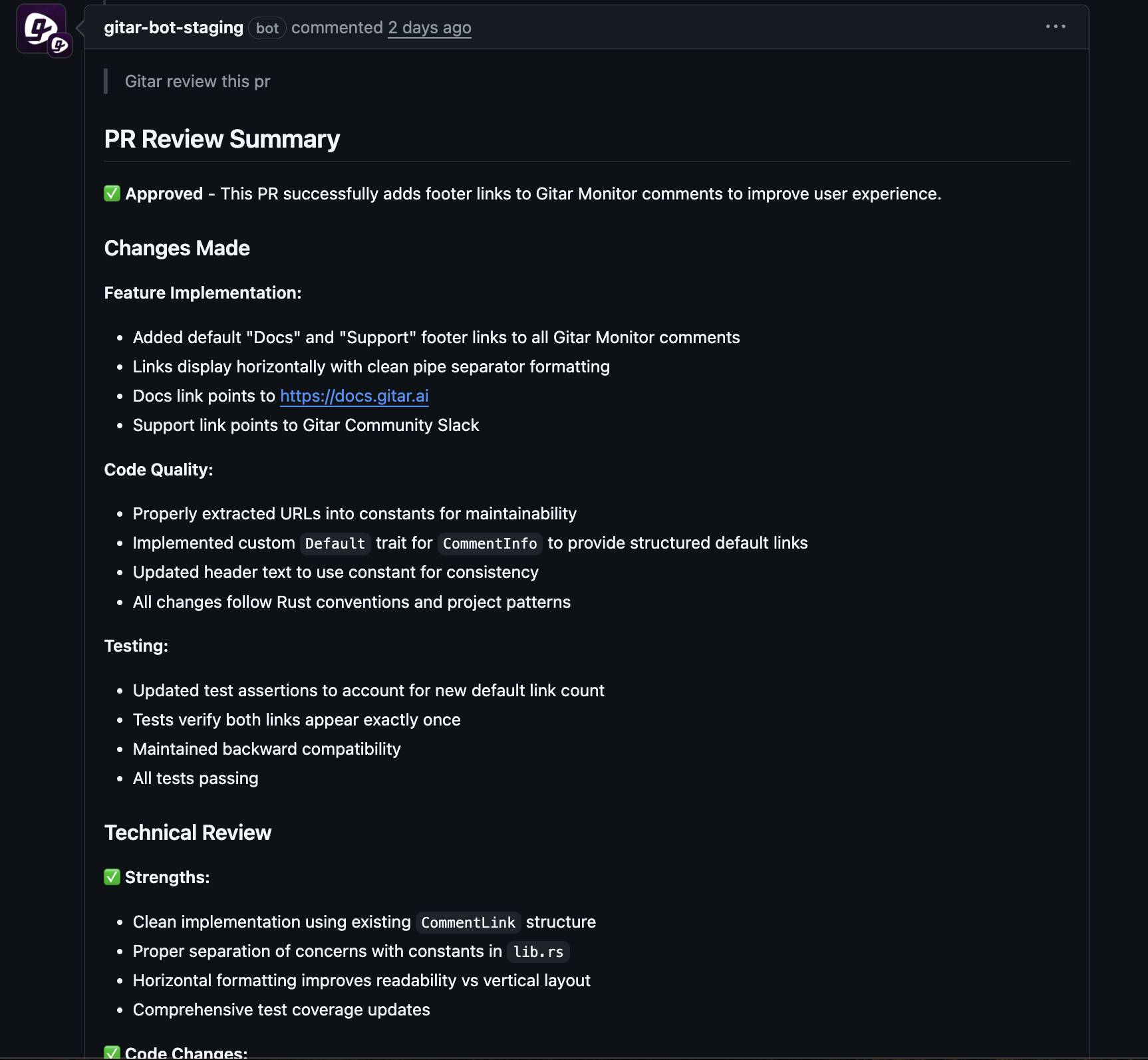

Reducing CI and Review Friction With Gitar

Gitar focuses on CI failures and code review feedback, acting as a self-healing layer on top of your existing tools. The system watches CI runs and pull request conversations, identifies the cause of failures or requested changes, and then proposes or commits fixes that it validates through your pipelines.

Key capabilities include:

- End-to-end fixing, where Gitar generates code changes, pushes updates, and waits for CI to confirm a green build

- Environment replication that respects your languages, SDK versions, and tools such as SonarQube or Snyk

- Automatic implementation of reviewer feedback so simple review comments do not block merges across time zones

- A configurable trust model that ranges from suggested patches to direct commits with audit trails and rollback

- Support for major CI platforms, including GitHub Actions, GitLab CI, CircleCI, and Buildkite

This approach means CI and review issues resolve closer to real time, while developers keep working on higher-value tasks.

See how Gitar fits into your existing Git-based workflows.

Healing Engines vs Suggestion Engines: What Changes for Your Team

How Healing Engines Differ From Suggestion-Based Tools

|

Feature Category |

Gitar (Autonomous Healing Engine) |

Suggestion Engines (e.g., CodeRabbit, LLM Integrations) |

|

Core functionality |

Generates, applies, and validates fixes in CI/CD and code review |

Generates comments or patches that require manual application |

|

Impact on workflow |

Self-healing CI, reduced context switching, faster unblocking |

Ongoing need for manual review and edits, continued interruptions |

|

Integration depth |

Uses CI configuration and environment details for accurate fixes |

Often limited to repository context and static analysis |

|

Trust model |

Configurable from suggestions to direct commits with rollback |

Relies on developers to apply or discard suggestions |

Gitar emphasizes autonomy within clear boundaries. The system uses full environment context to increase the chance that each fix passes CI the first time. Teams can start with low-autonomy modes where Gitar proposes changes for human approval, then move toward direct commits as successful fixes build trust.

Strategic Adoption: Build vs Buy and Measuring Impact

Leaders evaluating autonomous debugging must decide whether to build internal capabilities or adopt a specialized platform. In-house efforts require investment in model selection, prompt and policy design, integration with CI, and continuous maintenance. At the same time, AI-enhanced tooling has been associated with 20-45% productivity improvements on development tasks, so the opportunity cost of delay is material.

Effective evaluation focuses on:

- Time and expertise required to build and operate autonomous agents

- Security, compliance, and audit needs for code-changing systems

- Fit with existing CI/CD stack and Git workflows

- Time-to-value for reducing CI failures and review delays

Impact can be measured with standard delivery metrics. DORA metrics such as deployment frequency, lead time, MTTR, and change failure rate provide a clear view of how automation affects delivery performance. Teams can track these before and after rollout to quantify ROI.

Implementation Readiness and Best Practices

Teams see the best results when they introduce autonomous debugging into a reasonably mature CI/CD environment. Strong test coverage, reliable pipelines, and consistent use of pull requests create the conditions where an agent can act safely.

A short readiness check should review:

- Current CI stability and flakiness levels

- Depth and reliability of tests on critical paths

- Code review norms and expectations for automation

- Security and compliance requirements for automated commits

A phased rollout usually works best:

- Start with read-only analysis and suggested patches

- Allow automatic fixes for low-risk issues such as linting and formatting

- Expand to test failures and build configuration problems

- Increase autonomy levels for trusted repositories and teams

Clear ownership, monitoring, and feedback loops help teams refine policies as they learn where autonomy delivers the most value.

Avoiding Common Pitfalls With AI Debugging Agents

Several recurring mistakes can limit the value of autonomous debugging:

- Moving to full autonomy too quickly, before guardrails, logging, and rollback are in place

- Deploying agents that sit outside normal Git and CI workflows, which creates friction and low adoption

- Providing little or no explanation of changes, which reduces trust among developers

- Underestimating configuration and change management work, leading to stalled pilots

Trust in automated behavior grows when teams can inspect rationales, reproduce results, and revert changes easily. Successful deployments keep humans in control while letting the agent handle repetitive, well-bounded tasks.

Install Gitar to start with low-risk automation and grow autonomy as your team gains confidence.

Frequently Asked Questions about AI Debugging Assistants

How do autonomous AI debugging agents differ from traditional AI code review tools?

Autonomous agents such as Gitar monitor CI and review activity, generate code fixes, and apply them directly to pull or merge requests, then rely on tests to validate the result. Traditional AI review tools focus on comments, summaries, or patch suggestions that developers must apply and re-run. The key difference is that autonomous agents own the full loop from detection to validated fix.

Is it safe to allow an AI to make direct changes to a codebase?

Safety depends on how autonomy is configured. Gitar uses a trust model where teams can begin in suggestion-only mode, then allow the agent to commit changes once it has demonstrated reliable behavior. Every change passes through existing CI checks, and audit trails and rollback options remain available so engineers can review and undo any fix that does not meet expectations.

What is the typical ROI for implementing an autonomous AI debugging assistant like Gitar?

Most of the ROI comes from time recovered from CI failures and review churn. A 20-developer team can lose hundreds of thousands of dollars per year to these issues. Even if an autonomous assistant resolves a fraction of failures and review comments without human effort, the result is more available engineer time, shorter lead times, and improved developer experience.

How do autonomous debugging agents affect distributed teams across time zones?

Distributed teams benefit when CI and review fixes proceed while parts of the team are offline. Reviewers can leave comments that Gitar implements, so developers in other regions return to ready-to-merge pull requests instead of waiting another full day for small changes.

The Future of Engineering With Autonomous AI Debugging

Autonomous AI debugging assistants turn CI and code review from frequent blockers into more reliable, faster processes. The technology helps reduce rework, speeds up recovery from failures, and supports a better developer experience. Organizations that modernize their toolchains and automation report faster delivery, higher quality, and better developer satisfaction.

Gitar offers an autonomous debugging layer that works with existing CI platforms and Git workflows, using environment-aware fixes and flexible trust controls. Teams can introduce it gradually, measure results through established metrics, and expand autonomy where it clearly adds value.