Key Takeaways

- Engineering teams lose significant time and focus to CI failures and pull request review cycles, especially in distributed environments.

- Autonomous healing engine AI agents reduce this toil by diagnosing CI failures, applying fixes, and validating builds without manual intervention.

- Suggestion-only tools still require developers to implement and validate changes, so they do not fully address the productivity cost of failed pipelines.

- Strategic rollout, clear trust controls, and ROI tracking help leaders adopt AI agents safely and measure real impact on throughput and developer experience.

- Gitar provides production-ready CI healing agents that automatically fix failing builds and code review feedback, which you can try by installing Gitar for your repositories.

The Unseen Costs of Engineering Toil: Why Manual Pull Request Management Fails Modern Teams

Engineering toil in pull request management is a major but often overlooked productivity drain. Developers can waste up to 30% of their time dealing with CI failures and code review cycles, which creates large operational costs that reach beyond engineering.

Typical pull request workflows create a cycle of inefficiency. A developer pushes code, CI fails because of a missing dependency, a flaky test, or a linter complaint. The developer then has to dig through logs, switch back to a local environment, apply a fix, commit, push, and wait again for pipelines. A five-minute fix often turns into an hour of disrupted focus.

Context switching magnifies this cost. When developers move to a new task, then get pulled back by CI failures or review comments, they lose flow and spend extra time rebuilding mental context. A simple 30-minute fix can consume an hour or more of productive capacity.

Distributed teams feel this even more. A developer on the US West Coast who needs a review from a teammate in Bangalore can see a simple back-and-forth stretch from hours into days because of time zone gaps. This friction contributes to burnout and delays projects at roughly 60% of companies through delayed projects.

Faster code generation from tools like GitHub Copilot and Cursor increases the volume of code that needs review and testing, which raises the stakes for efficient pull request management. Gitar helps absorb this load by automatically fixing broken builds and acting on review feedback, so developers can stay focused on higher-value work.

Introducing Gitar: Autonomous AI Agents to Eliminate Pull Request Toil

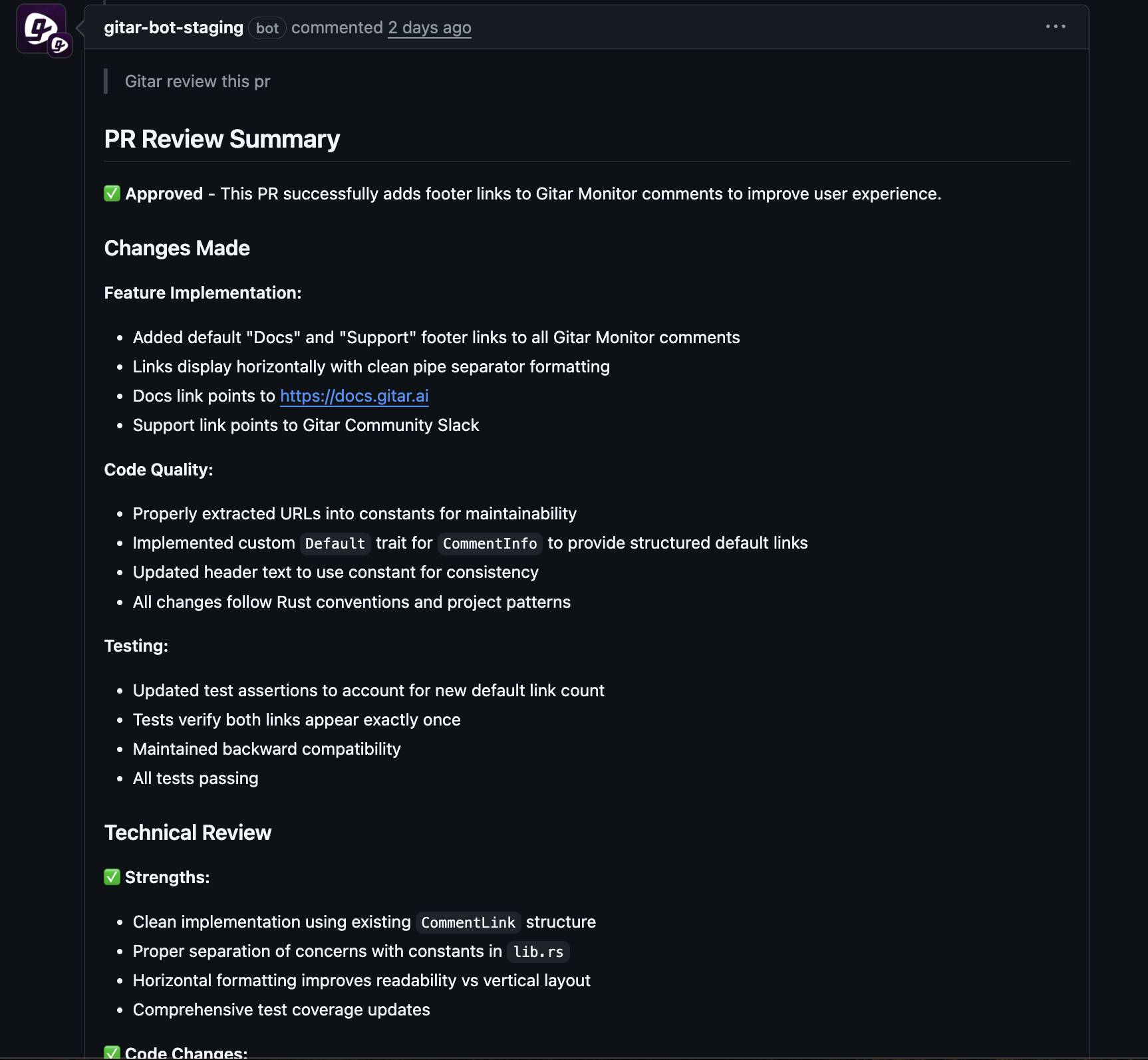

Gitar shifts pull request management from reactive firefighting to proactive automation. Its autonomous AI agents act as a healing engine that identifies CI failures, proposes or applies fixes, and validates updated builds so pull requests can move forward with less manual work.

Key capabilities that set Gitar apart include:

- Autonomous CI fixes that resolve linting errors, test failures, and build issues by analyzing logs, generating changes, and committing to the pull request branch

- Intelligent code review action that interprets reviewer comments, applies requested edits, and posts clear explanations

- Full environment replication that mirrors enterprise workflows with specific SDKs, dependencies, and third-party tools

- A configurable trust model that ranges from suggestion only to auto-commit, based on team preference

- Cross-platform support that works with GitHub Actions, GitLab CI, CircleCI, BuildKite, and other major CI systems

Request a Gitar demo to see autonomous CI healing in your own repositories.

AI Agent Architectures: The Difference Between Healing and Suggestion Engines

Architecture matters when evaluating AI agents for pull request management. Different approaches offer very different levels of automation, validation, and integration effort.

How a Healing Engine Works

Gitar uses a healing engine model for CI automation. This model not only suggests fixes but also applies them, runs the full CI workflow, and confirms that builds pass before handing the pull request back to the team. The engine analyzes failures in context, generates code changes, updates branches, and triggers the same pipelines that developers rely on for production readiness.

This approach closes the gap between identifying an issue and proving that a fix is safe. Healing engines can mirror complex enterprise environments, such as specific SDK combinations, multilingual projects, and security or quality scanners like SonarQube and Snyk.

Where Suggestion Engines Fit

Suggestion engines, including many AI code reviewers, analyze code and highlight issues or improvements. These tools add value for review quality and education, yet developers still need to implement and validate most changes. Manual effort remains significant, especially when CI pipelines fail.

How On-Demand AI Fixers Compare

On-demand AI fixers, such as generic coding assistants or single-purpose GitHub Actions, typically run only when a developer calls them. They can help with targeted problems but may lack deep environment awareness or broad platform coverage, which limits their impact on overall engineering toil.

|

Feature |

Gitar (CI Healing Engine) |

AI Code Reviewers (Suggestion Engines) |

On-Demand AI Fixers |

|

Core Functionality |

Autonomous fixes with validation |

Suggestions requiring manual implementation |

Manual triggering for specific issues |

|

Validation/Guaranteed Fixes |

Full CI pipeline validation |

Validation may vary by tool |

Validation varies; specifics depend on the setup |

|

Environmental Context |

Complete environment replication |

Surface-level code analysis |

Basic context varies by tool |

|

Platform Support |

Cross-platform (GitHub, GitLab, CircleCI) |

Primarily Git provider is focused |

Support varies by tool |

Strategic Implementation: Integrating Gitar into Your Engineering Workflow for Maximum ROI

Using Configurable Modes to Build Trust

Gitar includes configurable modes so teams can ramp up automation safely. In conservative mode, the agent suggests fixes, and developers approve or edit them before merging. As confidence grows, teams can enable automatic commits with safeguards such as branch protections and required reviews.

Connecting Gitar to Your Existing Dev Stack

Gitar integrates with common version control and CI platforms, including GitHub, GitLab, GitHub Actions, GitLab CI, CircleCI, and BuildKite. Setup usually involves authorizing a GitHub App or similar connection for selected repositories and configuring options in the web dashboard, without custom prompt engineering or fragile scripts.

Quantifying the Impact: Measuring ROI for Autonomous Pull Request Management

Clear ROI helps justify automation investment. A 20-member developer team that spends one hour per day on CI and review issues loses about 5,000 hours per year, which can equal roughly 1 million dollars in loaded cost at 200 dollars per hour.

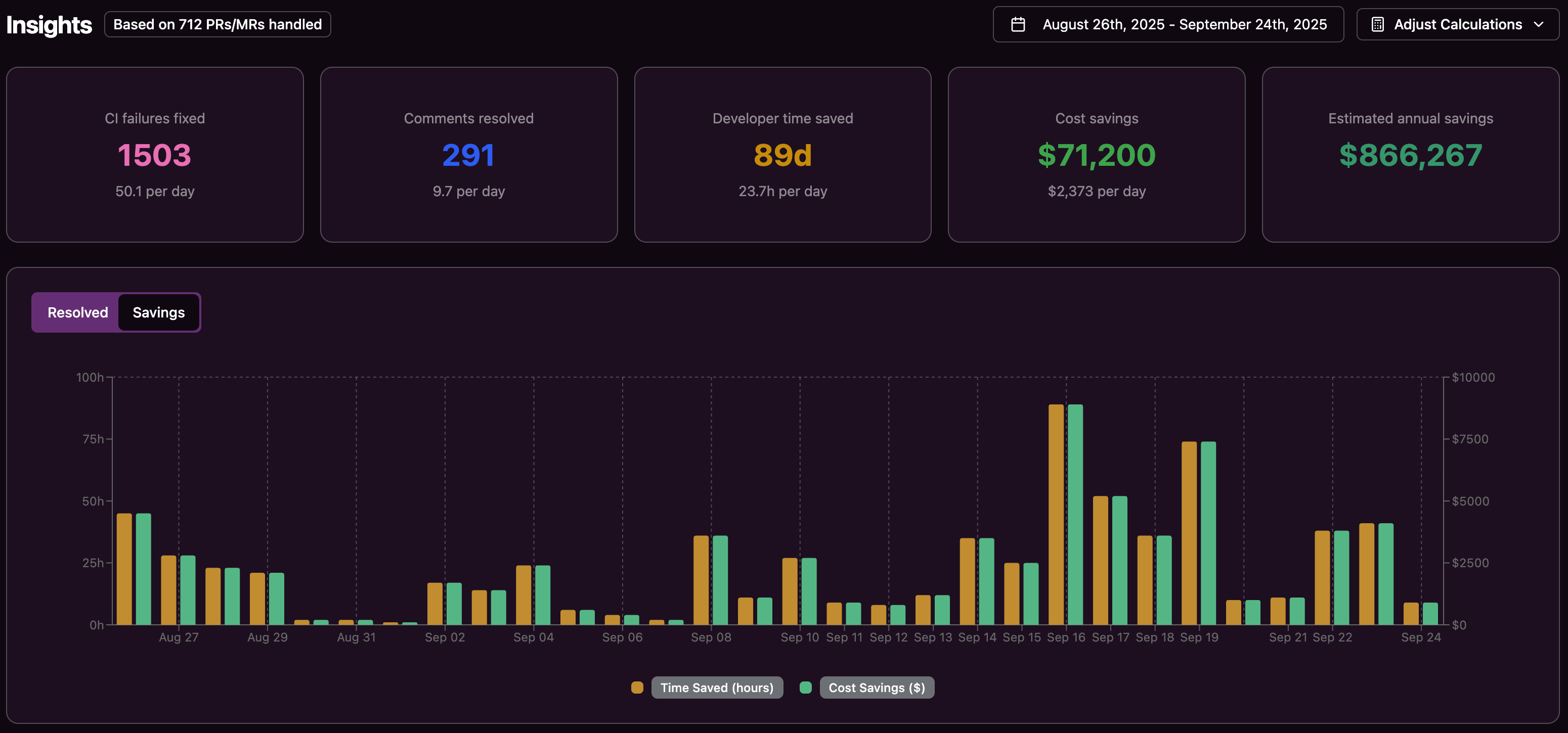

Even if automation removes only half of this toil, the savings are substantial, and leaders also see faster release cycles and better developer morale. Gitar reporting surfaces metrics such as CI failures fixed, comments resolved, and estimated time and cost savings so teams can track impact over time.

Overcoming Strategic Pitfalls: Averts Common Errors in AI Agent Adoption

Thoughtful planning helps teams avoid common missteps when rolling out AI agents for CI and pull request automation.

- Environmental blind spots: Some teams expect general-purpose AI tools to work in complex CI environments without full integration. Reliable fixes require accurate replication of dependencies, SDK versions, and third-party scanners.

- Overreliance on suggestions: Relying only on suggestion engines still leaves developers with manual implementation and validation work, so CI remains a bottleneck.

- Change management gaps: Teams that skip education and gradual rollout often face skepticism. A phased approach that starts in suggestion mode and highlights early wins builds trust.

- Incomplete cost accounting: Many organizations track only build minutes, not the time lost to context switching, review delays, and missed deadlines, which hides the true cost of engineering toil.

Frequently Asked Questions About Reducing Engineering Toil with AI Agents

How does Gitar differ from existing AI reviewers?

Many AI reviewers focus on commenting on code or generating suggested patches that developers must apply and validate. Gitar instead acts as a healing engine that applies fixes, runs your CI workflows, and returns pull requests in a passing state whenever possible.

How can teams maintain trust in automated fixes?

Teams keep control over merges with Gitar policy settings. Organizations can require human review and approval before any AI-generated commit is merged, and they can tune where Gitar runs, such as on specific repositories or branches.

How does Gitar support complex CI environments?

Gitar is built to emulate real project environments, including language runtimes, frameworks, dependencies, and tools such as SonarQube and Snyk. This depth allows the agent to propose fixes that match the way your pipelines already run.

How does Gitar affect CI minutes and pipeline performance?

Gitar is designed to run alongside existing workflows without consuming additional customer CI minutes or slowing pipelines. The agent optimizes when it triggers work so developers see fewer failed runs and less repetition.

How does Gitar help distributed teams coordinate reviews?

Distributed teams can use Gitar to keep pull requests moving while teammates are offline. A reviewer can leave a comment that addresses Gitar, the agent implements the change and updates the pull request, and the original author can return to a ready-to-merge state when they come back online.

Conclusion: Reclaiming Developer Productivity by Eliminating Engineering Toil

Engineering toil around CI failures and pull request reviews slows delivery and erodes developer satisfaction. Manual triage and repetitive fixes do not scale in a world of accelerating code generation and globally distributed teams.

Autonomous AI agents like Gitar introduce a healing layer into the development workflow. By diagnosing failures, applying and validating fixes, and respecting existing review policies, these agents reduce interruptions and free engineers to focus on design, architecture, and product impact.

Teams that adopt this model gain higher throughput, lower operational cost per feature, and a more sustainable developer experience. Request a Gitar demo to see how autonomous CI healing can reduce engineering toil and speed up time to merge.